November 23, 2019

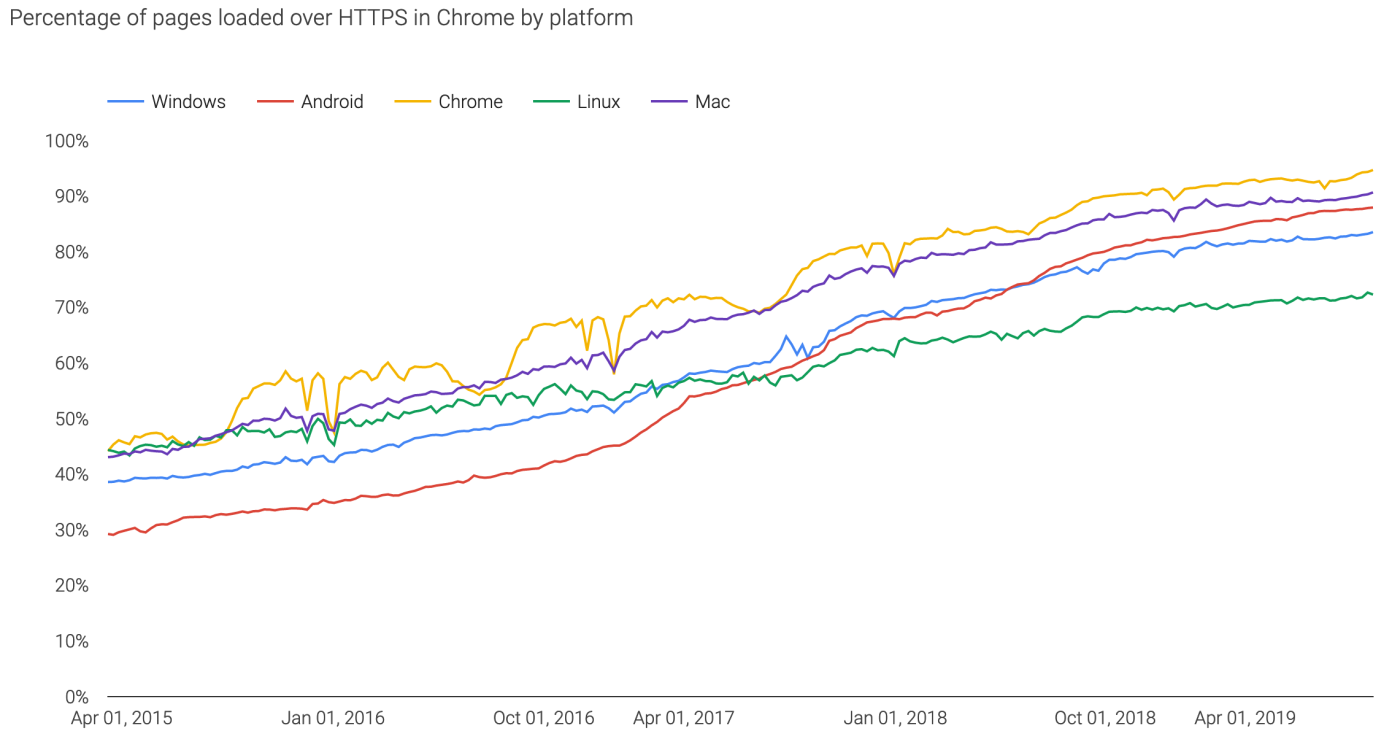

HTTPS reaches 80% - mission accomplished after 14 years

A post on Matthew Green's blog highlights that Snowden revelations helped the push for HTTPS everywhere.

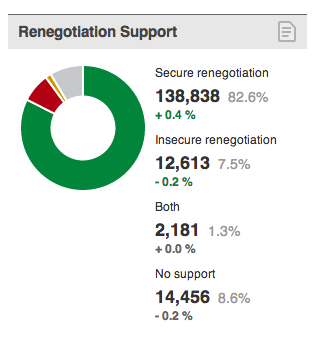

Firefox also has a similar result, indicating a web-wide world result of 80%.

(It should be noted that google's decision to reward HTTPS users by prioritising it in search results probably helped more than anything, but before you jump for joy at this new-found love for security socialism, note that it isn't working too well in the fake news department.)

The significance of this is that back in around 2005 some of us first worked out that we had to move the entire web to HTTPS. Logic at the time was:

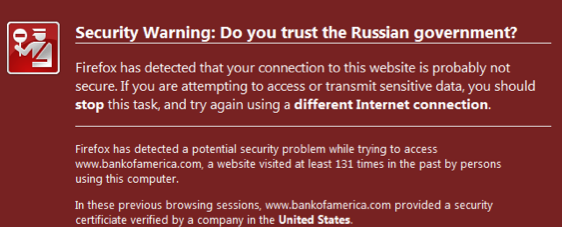

Why is this important? Why do we care about a small group of sites are still running SSL v2. Here's why - it feeds into phishing:1. In order for browsers to talk to these sites, they still perform the SSL v2 Hello. 2. Which means they cannot talk the TLS hello. 3. Which means that servers like Apache cannot implement TLS features to operate multiple web sites securely through multiple certificates. 4. Which further means that the spread of TLS (a.k.a. SSL) is slowed down dramatically (only one protected site per IP number - schlock!), and 5, this finally means that anti-phishing efforts at the browser level haven't a leg to stand on when it comes to protecting 99% of the web.Until *all* sites stop talking SSL v2, browsers will continue to talk SSL v2. Which means the anti-phishing features we have been building and promoting are somewhat held back because they don't so easily protect everything.

For the tl;dr: we can't protect the web when HTTP is possible. Having both HTTP and HTTPS as alternatives broke the rule: there is only one mode, and it is secure, and allowed attackers like phishers to just use HTTP and *pretend* it was secure.

The significance of this for me is that, from that point of time until now, we can show that a typical turn around the OODA loop (observe, orient, decide, act) of Information Security Combat took about 14 years. Albeit in the Internet protocol world, but that happens to be a big part of it.

During that time, a new bogeyman turned up - the NSA listening to everything - but that's ok. Decent security models should cover multiple threats, and we don't so much care which threat gets us to a comfortable position.

October 19, 2018

AES was worth $250 billion dollars

So says NIST...

10 years ago I annoyed the entire crypto-supply industry:

Hypothesis #1 -- The One True Cipher Suite In cryptoplumbing, the gravest choices are apparently on the nature of the cipher suite. To include latest fad algo or not? Instead, I offer you a simple solution. Don't.

There is one cipher suite, and it is numbered Number 1.

Cypersuite #1 is always negotiated as Number 1 in the very first message. It is your choice, your ultimate choice, and your destiny. Pick well.

The One True Cipher Suite was born of watching projects and groups wallow in the mire of complexity, as doubt caused teams to add multiple algorithms- a complexity that easily doubled the cost of the protocol with consequent knock-on effects & costs & divorces & breaches & wars.

It - The One True Cipher Suite as an aphorism - was widely ridiculed in crypto and standards circles. Developers and standards groups like the IETF just could not let go of crypto agility, the term that was born to champion the alternate. This sacred cow led the TLS group to field something like 200 standard suites in SSL and radically reduce them to 30 or 40 over time.

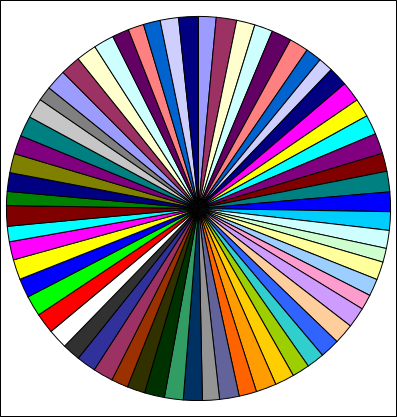

Now, NIST has announced that AES as a single standard algorithm is worth $250 billion economic benefit over 20 years of its project lifetime - from 1998 to now.

h/t to Bruce Schneier, who also said:

"I have no idea how to even begin to assess the quality of the study and its conclusions -- it's all in the 150-page report, though -- but I do like the pretty block diagram of AES on the report's cover."

One good suite based on AES allows agility within the protocol to be dropped. Entirely. Instead, upgrade the entire protocol to an entirely new suite, every 7 years. I said, if anyone was asking. No good algorithm lasts less than 7 years.

Crypto-agility was a sacred cow that should have been slaughtered years ago, but maybe it took this report from NIST to lay it down: $250 billion of benefit.

In another footnote, we of the Cryptix team supported the AES project because we knew it was the way forward. Raif built the Java test suite and others in our team wrote and deployed contender algorithms.

June 29, 2017

SegWit and the dispersal of the transaction

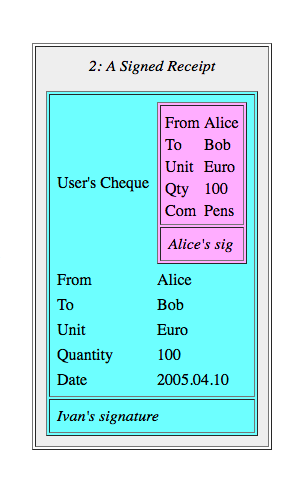

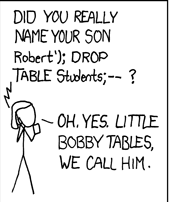

Jimmy Nguyen criticises SegWit on the basis that it breaks the signature of a contract according to US law. This is a reasonable argument to make but it is also not a particularly relevant one. In practice, this only matters in the context of a particularly vicious and time-wasting case. You could argue that all of them are, and lawyers will argue on your dime that you have to get this right. But actually, for the most part, in court, lawyers don’t like to argue things that they know they are going to lose. The contract is signed, it’s just not signed in a particularly helpful fashion. For the most part, real courts and real judges know how to distinguish intent from technical signatures, so it would only be relevant where the law states that a particular contract must be signed in a particular way, and then we’ve got other problems. Yes, I know, UCC and all that, but let’s get back to the real world.

Jimmy Nguyen criticises SegWit on the basis that it breaks the signature of a contract according to US law. This is a reasonable argument to make but it is also not a particularly relevant one. In practice, this only matters in the context of a particularly vicious and time-wasting case. You could argue that all of them are, and lawyers will argue on your dime that you have to get this right. But actually, for the most part, in court, lawyers don’t like to argue things that they know they are going to lose. The contract is signed, it’s just not signed in a particularly helpful fashion. For the most part, real courts and real judges know how to distinguish intent from technical signatures, so it would only be relevant where the law states that a particular contract must be signed in a particular way, and then we’ve got other problems. Yes, I know, UCC and all that, but let’s get back to the real world.

But there is another problem, and Nguyen’s post has triggered my thinking on it. Let’s examine this from the perspective of triple entry. When we (by this I mean to include Todd and Gary) were thinking of the problem, we isolated each transaction as being essentially one atomic element. Think of an entry in accounting terms. Or think of a record in database terms. However you think about it, it’s a list of horizontal elements that are standalone.

When we sign it using a private key, we take the signature and append it to the entry. By this means, the entry becomes stronger - it carries its authorisation - but it still retains its standalone property.

So, with the triple entry design in that old paper, we don’t actually cut anything out of the entry, we just make it stronger with an appended signature. You can think of it as is a strict superset of the old double entry and even the older single entry if you wanted to go that far. Which makes it compatible which is a nice property, we can extract double entry from triple entry and still use all the old software we’ve built over the last 500 years.

And, standalone means that Alice can talk to Bob about her transactions, and Bob can talk to Carol about his transaction without sharing any irrelevant or private information.

Now, Satoshi’s design for triple entry broke the atomicity of transactions for consensus purposes. But it is still possible to extract out the entries out of the UTXO, and they remain standalone because they carry their signature. This is especially important for say an SPV client, but it’s also important for any external application.

Like this: I’m flying to Shanghai next week on Blockchain Airlines, and I’ve got to submit expenses. I hand the expenses department my Bitcoin entries, sans signatures, and the clerk looks at them and realises they are not signed. See where this is going? Because, compliance, etc, the expenses department must now be a full node. Not SPV. It must now hold the entire blockchain and go searching for that transaction to make sure it’s in there - it’s real, it was expended. Because, compliance, because audit, because tax, because that’s what they do - check things.

Like this: I’m flying to Shanghai next week on Blockchain Airlines, and I’ve got to submit expenses. I hand the expenses department my Bitcoin entries, sans signatures, and the clerk looks at them and realises they are not signed. See where this is going? Because, compliance, etc, the expenses department must now be a full node. Not SPV. It must now hold the entire blockchain and go searching for that transaction to make sure it’s in there - it’s real, it was expended. Because, compliance, because audit, because tax, because that’s what they do - check things.

If Bitcoin is triple entry, this is making it a more expensive form of triple entry. We don’t need those costs, bearing in mind that these costs are replicated across the world - every user, every transaction, every expenses report, every accountant. For the cost of including a signature, an EC signature at that, the extra bytes gain us a LOT of strength, flexibility and cost savings.

(You could argue that we have to provide external data in the form of the public key. So whoever’s got the public key could also keep the sigs. This is theoretically true but is starting to get messy and I don’t want to analyse right now what that means for resource, privacy, efficiency.)

Some might argue that this causes more spread of Bitcoin, more fullnodes and more good - but that’s the broken window fallacy. We don’t go around breaking things to cause the economy to boom. A broken window is always a dead loss to society, although we need to constantly remind the government to stop breaking things to fix them. Likewise, we do not improve things by loading up the accounting departments of the world with additional costs. We’re trying to remove those costs, not load them up, honestly!

Then, but malleability! Yeah, that’s a nuisance. But the goal isn’t to fix malleability. The goal is to make the transactions more certain. Segwit hasn’t made transactions more certain if it has replaced one uncertainty with another uncertainty.

Today, I’m not going to compare one against the other - perhaps I don’t know enough, and perhaps others can do it better. Perhaps it is relatively better if all things are considered, but it’s not absolutely better, and for accounting, it looks worse.

Which does rather put the point on ones worldview. SegWit seems to retain the certainty but only as outlined above: when ones worldview is full nodes, Bitcoin is your hammer and your horizon. E.g., if you’re only thinking about validation, then signatures are only needed for validation. Nailed it.

But for everyone else? Everyone else, everyone outside the Bitcoin world is just as likely to simply decline as they are to add a full node capability. “We do not accept Bitcoin receipts, thanks very much.”

Or, if you insist on Bitcoin, you have to go over to this authority and get a signed attestation by them that the receipt data is indeed valid. They’ve got a full node. Authenticity as a service. Some will think “business opportunity!” whereas others will think “huh? Wasn’t avoiding a central authority the sort of thing we were trying to avoid?”

I don’t know what the size of the market for interop is, although I do know quite a few people who obsess about it and write long unpublished papers (daily reminder - come on guys, publish the damn things!). Personally I would not make that tradeoff. I’m probably biased tho, in the same way that Bitcoiners are biased: I like the idea of triple entries, in the same way that Bitcoiners like UTXO. I like the idea that we can rely on data, in the same way that Bitcoiners like the idea that they can rely on a bunch of miners.

I don’t know what the size of the market for interop is, although I do know quite a few people who obsess about it and write long unpublished papers (daily reminder - come on guys, publish the damn things!). Personally I would not make that tradeoff. I’m probably biased tho, in the same way that Bitcoiners are biased: I like the idea of triple entries, in the same way that Bitcoiners like UTXO. I like the idea that we can rely on data, in the same way that Bitcoiners like the idea that they can rely on a bunch of miners.

Now, one last caveat. I know that SegWit in all its forms is a political food fight. Or a war, depending your use of the language. I’m not into that - I keep away from it because to my mind war and food fights are a dead loss to society. I have no position one way or the other. The above is an accounting and contractual argument, albeit with political consequences. I’m interested to hear arguments that address the accounting issues here, and not at all interested in arguments based on “omg you’re a bad person and you’re taking money from my portfolio.”

I’ve little hope of that, but I thought I’d ask :-)

February 27, 2017

Today I’m trying to solve my messaging problem...

Financial cryptography is that space between crypto and finance which by nature of its inclusiveness of all economic activities, is pretty close to most of life as we know it. We bring human needs together with the net marketplace in a secure fashion. It’s all interconnected, and I’m not talking about IP.

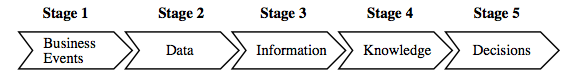

Today I’m trying to solve my messaging problem. In short, tweak my messaging design to better supports the use case or community I have in mind, from the old client-server days into a p2p world. But to solve this I need to solve the institutional concept of persons, i.e. those who send messages. To solve that I need an identity framework. To solve the identity question, I need to understand how to hold assets, as an asset not held by an identity is not an asset, and an identity without an asset is not an identity. To resolve that, I need an authorising mechanism by which one identity accepts another for asset holding, that which banks would call "onboarding" but it needs to work for people not numbers, and to solve that I need a voting solution. To create a voting solution I need a resolution to the smart contracts problem, which needs consensus over data into facts, and to handle that I need to solve the messaging problem.

Bugger.

A solution cannot therefore be described in objective terms - it is circular, like life, recursive, dependent on itself. Which then leads me to thinking of an evolutionary argument, which, assuming an argument based on a higher power is not really on the table, makes the whole thing rather probabilistic. Hopefully, the solution is more probabilistically likely than human evolution, because I need a solution faster than 100,000 years.

This could take a while. Bugger.

February 23, 2017

SHA1 collision attack - FINALLY after TWELVE years

Timeline on a hash collision attack:

| 1993 | SHA0 published |

| 1995 | SHA1 published due to weaknesses found |

| 2001 | SHA2 published due to expectations of weakness in SHA1 |

| 2005 | Shandong team MD5 attacked, SHA1 worried |

| 2009? | RocketSSL breached for using MD5 |

| 2014 | Chrome responds and starts phasing out SHA1 |

| 2017 | CWI & Google announce collision attack on SHA1 |

The point I wish to make here is that SHA1 was effectively deprecated in 2001 with the publication of SHA2. If you are vulnerable to a collision attack, then you had your moment of warning sixteen years ago.

On the other hand, think about this for a moment - in 2005 the Shandong shot was heard around the cryptographic world. Everyone knew! But we now see that SHA1 lasted an additional 12 years before it crumbled to a collision attack. That shows outstanding strength, an incredible run.

On the third hand, let's consider your protocol. If your protocol is /not/ vulnerable to a collision attack then SHA1 is still good. As is SHA0 and MD5. And, as an aside, no protocol should be vulnerable to a collision attack - such weakness is probably a bug.

So SHA1 is technically only a problem if you have another weakness in your protocol. And if you have that weakness, well, it's a pretty big one, and you should be worried for everything, not just SHA1.

On the fourth hand, however, institutions are too scared to understand the difference, and too bureaucratic to suggest better practices like eliminating collision vulnerabilities. Hence, all software suppliers have been working to deprecate SHA1 from consideration. To show you how asinine this gets, some software suppliers are removing older hash functions so, presumably you can't use them - to either make new ones or check old ones. (Doh!)

Security moves as a herd not as a science. Staying within the herd provides sociability in numbers, but social happiness shouldn't be mistaken for security, as the turkey well knows.

Finally, on the fifth hand, I still use SHA1 in Ricardo for naming Ricardian Contracts. Try for the life of me, and I still can't see how to attack it with collisions. As, after all, the issuer signs his own contract, and if he collides, he's up for both contracts, and there are copies of both distributed...

There is no cause for panic, if you've done your homework.

November 30, 2016

Corda Day - a new force

Today is the day that Corda goes open source. Which will be commented far and wide, so not a lot of point in duplicating that effort. But perhaps a few comments on where this will lead us, as a distributed ledger sector.

Today is the day that Corda goes open source. Which will be commented far and wide, so not a lot of point in duplicating that effort. But perhaps a few comments on where this will lead us, as a distributed ledger sector.

For a long time, since 2009, Bitcoin dominated the scene. Ethereum successfully broke that monopoly on attention, not without a lot of pain, but it is safe to say that for a while now, there have been two broad churches in town.

As Corda comes out, it will create a third force. From today, the world moves to three centers of gravity. As with the the fabled three-body-gravity problem of astrophysics, it's a little difficult to predict how this will pan out but some things can be said.

This post is to predict that third force. First, a recap of features, and shortfalls. Then, direction, and finally interest.

Featurism. It has to be said again and again (and over and over) that Corda is a system that was built for what the finance world wanted. It wasn't ever a better blockchain, indeed, it's not even a blockchain - that was considered optional and in the event, discarded. It also wasn't ever a smarter contract, as seen against say Ethereum.

Corda was what made sense to corporates wanting to trade financial instruments - a focus which remains noticeably lacking in 'the incumbent chains' and the loud startups.

Sharing. In particular, as is well hashed in the introductory paper: Corda does not share the data except with those who are participants to the contract. This is not just a good idea, it's the law - there are lots and lots of regulations in place that make sharing data a non-starter if you are in the regulated world. Selling a public, publishing blockchain to a bank is like selling a prime beef steak to a vegetarian - the feedgrain isn't going to improve your chances of a sale.

Toasting. Corda also dispenses with the planet-warming proof of work thing. While an extraordinary innovation, it just will not fly in a regulated world. Sorry about that, guys. But, luckily, it turns out we don't need it in the so-called private chain business - because we are dealing with a semi-trusted world in financial institutions, they can agree on a notary to sign off on some critical transactions; And -- innovation alert here -- as it happens, the notary is an interface or API. It can be a single server, or if you feel like going maximal, you can hook up a blockchain at that point. In theory at least, Corda can happily use Bitcoin to do its coordination, if you write the appropriate notary interface. If that's your thing. And for a few use cases, a blockchain works for the consensus part.

These are deviations. Then there are similarities.

Full language capability. Corda took one lead from Ethereum which was the full Turing-complete VM - although we use Java's JVM as it's got 20 years of history, and Java is the #1 language in finance. Which we can do without the DAO syndrome because our contracts will be user-driven, not on an unstoppable computer - if there's a problem, we just stop and resolve it. No problem.

UTXO. Corda also took the UTXO transaction model from Bitcoin - for gains in scaleability and flexibility.

There's a lot more, but in brash summary - Corda is a lot closer to what the FIs might want to use.

Minuses. I'm not saying it's perfect, so let me say some bad things: Corda is not ready for production, has zero users, zero value on-ledger. It has not been reviewed for security, nor does that make sense until it's built out. It's missing some key things (which you can see in the docs or the new technical paper). It hasn't been tested at scale, neither with a regulator nor with a real user base.

Direction. Corda has a long long way to go, but the way it is going is ever closer to that same direction - what financial institutions want. The Ethereum people and the Bitcoin people have not really cottoned on to user-driven engineering, and remain bemused as to who the users of their system are.

Which brings us to the next point - interest. Notwithstanding all the above, or perhaps because of it - Corda already has the attention of the financial world:

- Regulators are increasingly calling R3 for expertise in the field.

- 75 or so members, each of which is probably larger than the entire blockchain field put together, have signed up. OK, so there is some expected give and take as R3 goes through its round process (which I don't really follow so don't ask) but even with a few pulling out, members are still adding and growth is still firmly positive.

- Here's a finger in the air guess: I could be wrong, but I think that as of today we already have about the same order of magnitude of programming talent working on Corda as Bitcoin or Ethereum, provided to us by various banks working a score or more projects. Today, we'll start the process of adding a zero. OK, adding that zero might take a month or two. But thereafter we're going to be looking at the next zero.

- Internally, members have been clamouring to get into it for 6 months now - but capacity has been too tight because of the dev team bottleneck. That changes today.

All of which is to say: I predict that Corda will shoot to pole position. That's because it is powered by its members, and it is focussed to their needs. A clear feedback loop which is totally absence in the blockchain world.

The Game. Today, Corda becomes the third force in distributed ledger technologies. But I also predict it's not only the game changer, it's the entire game.

The reason I say that is because it is the only game that has asked the users what they want. In contrast, Bitcoin told the users it wanted an unstoppable currency - sure, works for a small group but not for the mass market. Ethereum told their users they need an unstoppable machine - which worked how spectacularly with the DAO? Not. What. We. Wanted.

Corda is the only game in town because it's the only one that asked the users. It's that simple.

June 12, 2016

Where is the Contract? - a short history of the contract in Financial Cryptography systems

(Editor's note: Dates are approximate. Written in May of 2014 as an educational presentation to lawyers, notes forgotten until now. Freshened 2016.09.11.)

Where is the contract? This is a question that has bemused the legal fraternity, bewitched the regulator, and sent the tech community down the proverbial garden path. Let's track it down.

Within the financial cryptography community, we have seen the discussion of contracts in approximately these ways:

- Smart Contracts, as performance machines with money ,

- Ricardian Contract which captures the writings of an agreement ,

- Compositions: of elements such as the "offer and acceptance" agreement into a Russian Doll Contracts pattern, or of clause-code pairs, or of split contract constructions.

Let's look at each in turn.

a. Performance

a(i) Nick Szabo theorised the notion of smart contracts as far back as 1994. His design postulated the ability of our emerging financial cryptography technology to automate the performance of human agreements within computer programs that also handled money. That is, they are computer programs that manage performance of a contract with little or less human intervention.

At an analogous level at least, smart contracts are all around. So much of the performance of contracts is now built into the online services of corporations that we can't even count them anymore. Yet these corporate engines of performance were written once then left running forever, whereas Szabo's notion went a step further: he suggested smart contracts as more of a general service to everyone: your contractual-programmer wrote the smart contract and then plugged it into the stack, or the service or the cloud. Users would then come along and interact with this machine, to get services.

a(ii). Bitcoin. In 2009 Bitcoin deployed a limited form of Smart Contracts in an open service or cloud setting called the blockchain. This capability was almost a side-effect of a versatile payments transaction of smart contracts. After author Satoshi Nakamoto left, the power of smart contracts was reduced in scope somewhat due to security concerns.

To date, success has been limited to simple uses such as Multisig which provides a separation of concerns governance pattern by allowing multiple signers to release funds.

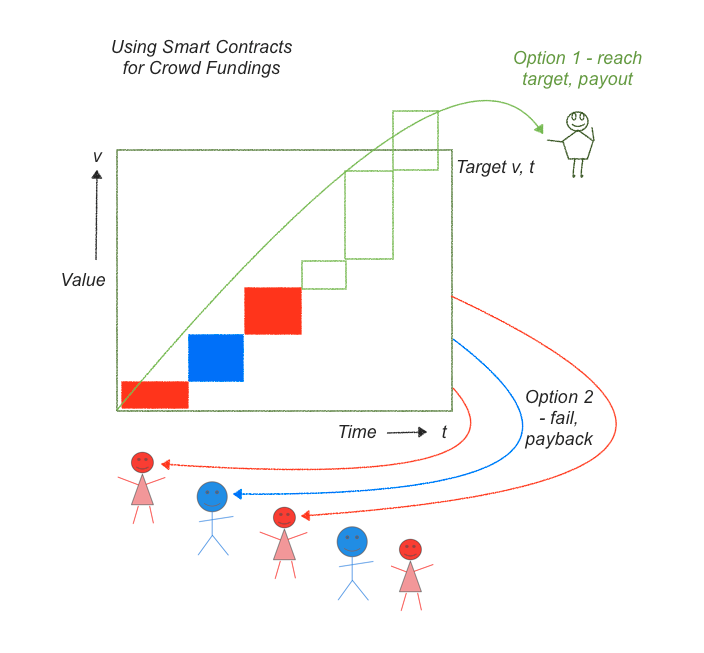

If we look at the above graphic we can see a fairly complicated story that we can now reduce into one smart contract. In a crowd funding, a person will propose a project. Many people will contribute to a pot of money for that project until a particular date. At that date, we have to decide whether the pot of money is enough to properly fund the project and if so, send over the funds. If not, return the funds.

To code this up, the smart contract has to do these steps:

- describe the project, including an target value v and a strike date t.

- collect and protect contributions (red, blue, green boxes)

- on the strike date /t/, count the total, and decide on option 1 or 2:

- if the contributions reach the amount, pay all over to owner (green arc), else

- if the contributions do not exceed the target v, pay them all back to funders (red and blue arcs).

A new service called Lighthouse now offers crowdfunding but keep your eyes open for crowdfunding in Ethereum as their smart contracts are more powerful.

b. Writings of the Contract

Back in 1996, as part of a startup doing bond trading on the net, I created a method to bring a classical 'paper' contract into touch with a digital accounting system such as cryptocurrencies. The form, which became known as the Ricardian Contract, was readily usable for anything that you could put into a written contract, beyond its original notion of bonds.

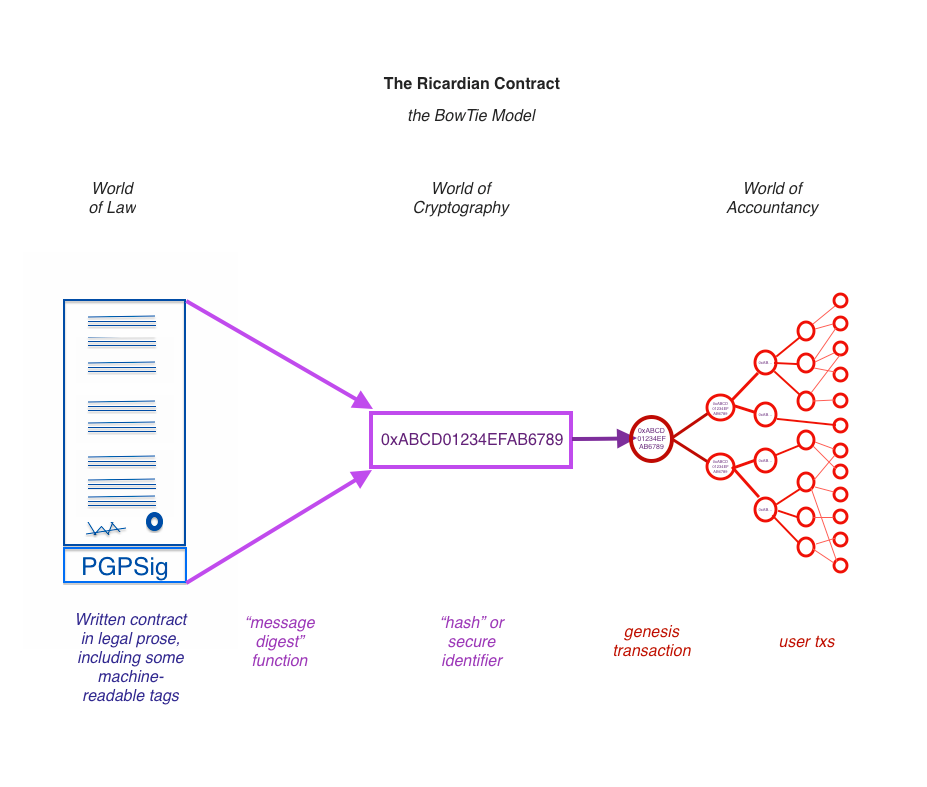

In short: write a standard contract such as a bond. Insert some machine-readable tags that would include parties, amounts, dates, etc that the program also needed to display. Then sign the document using a cleartext digital signature, one that preserves the essence as a human-readable contract. OpenPGP works well for that. This document can be seen on the left of this bow-tie diagram.

Then - hash the document using a cryptographic message digest function that creates a one-for-one identifier for the contract, as seen in the middle. Put this identifier into every transaction to lock in which instrument we're paying back and forth. As the transactions start from one genesis transaction and then fan out to many transactions, all of them including the Ricardian hash, with many users, this is shown in the right hand part of the bow-tie.

See 2004 paper and wikipedia page on the Ricardian contract. We have then a contract form that is readable by person and machine, and can be locked into every transaction - from the genesis transaction, value trickles out to all others.

The Ricardian Contract is now emerging in the Bitcoin world. Enough businesses are looking at the possibilities of doing settlement and are discovering what I found in 1996 - we need a secure way to lock tangible writings of a contract on to the blockchain. A highlight might be NASDAQ's recent announcements, and Coinprism's recent work with OpenAssets project [1, 2, 3], and some of the 2nd generation projects have incorporated it without much fuss.

c. Composition

c(i). Around 2006 Chris Odom built OpenTransactions, a cryptocurrency system that extended Ricardian Contract beyond issuance. The author found:

"While these contracts are simply signed-XML files, they can be nested like russian dolls, and they have turned out to become the critical building block of the entire Open Transactions library. Most objects in the library are derived, somehow, from OTContract. The messages are contracts. The datafiles are contracts. The ledgers are contracts. The payment plans are contracts. The markets and trades are all contracts. Etc.I originally implemented contracts solely for the issuing, but they have really turned out to have become central to everything else in the library."

In effect Chris Odom built an agent-based system using the Ricardian Contract to communicate all its parameters and messages within and between its agents. He also experimented with Smart Contracts, but I think they were a server-upload model.

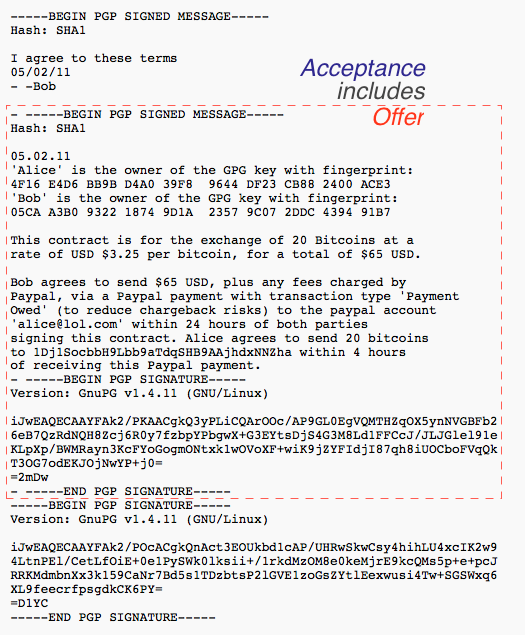

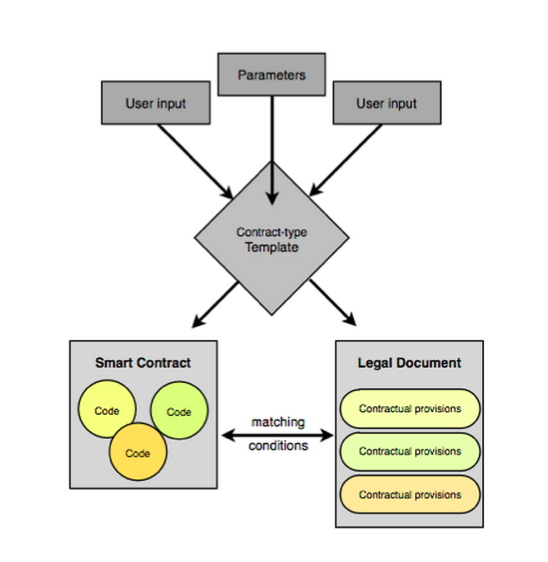

c(ii). CommonAccord construct small units containing matching smart code and prose clauses, and then compose these into full contracts using the browser. Once composed, the result can be read, verified and hashed a la Ricardian Contracts, and performed a la smart contracts.

c(iii) Let's consider person to person trading. With face-to-face trades, the contract is easy. With mail order it is harder, as we have to identify each components, follow a journey, and keep the paper work. With the Internet it is even worse because there is no paperwork, it's all pieces of digital data that might be displayed, might be changed, might be lost.

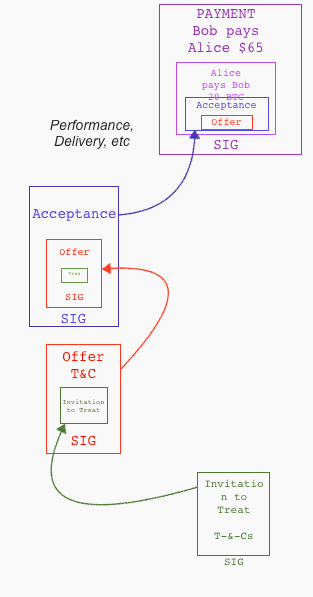

Shifting forward to 2014 and OpenBazaar decided to create a version of eBay or Amazon and put it onto the Bitcoin blockchain. To handle the formation of the contract between people distant and anonymous, they make each component into a Ricardian Contract, and place each one inside the succeeding component until we get to the end.

Let's review the elements of a contract in a cycle:

Let's review the elements of a contract in a cycle:

✓ Invitation to treat is found on blockchain similar to web page.

✓ offer by buyer

✓ acceptance by merchant

✓ (performance...)

✓ payment (multisig partner controls the money)

The Ricardian Contract finds itself as individual elements in the formation of the wider contract formation around a purchase. In each step, the prior step is included within the current contractual document. Like the lego blocks above, we can create a bigger contract by building on top of smaller components, thus implementing the trade cycle into Chris Odom's vision of Russian Dolls.

Conclusion

In conclusion, the question of the moment was:

Where is the contract?

So far, as far as the technology field sees it, in three areas:

- as performance - the Smart Contract

- as writing - the Ricardian Contract

- as composition - elements packaged into Russian Dolls, clause-code pairs and convergance as split contracts.

I see the future as convergence of these primary ideas: the parts or views we call smart & legal contracts will complement each other and grow together, being combined as elements into fuller agreements between people.

For those who think nothing much has changed in the world of contracts for a century or more, I say this: We live in interesting times!

(Editor's reminder: Written in May of 2014, and the convergence notion fed straight into "The Sum of all Chains".)

June 28, 2015

The Nakamoto Signature

The Nakamoto Signature might be a thing. In 2014, the Sidechains whitepaper by Back et al introduced the term Dynamic Membership Multiple-party Signature or DMMS -- because we love complicated terms and long impassable acronyms.

Or maybe we don't. I can never recall DMMS nor even get it right without thinking through the words; in response to my cognitive poverty, Adam Back suggested we call it a Nakamoto signature.

That's actually about right in cryptology terms. When a new form of cryptography turns up and it lacks an easy name, it's very often called after its inventor. Famous companions to this tradition include RSA for Rivest, Shamir, Adleman; Schnorr for the name of the signature that Bitcoin wants to move to. Rijndael is our most popular secret key algorithm, from the inventors names, although you might know it these days as AES. In the old days of blinded formulas to do untraceable cash, the frontrunners were signatures named after Chaum, Brands and Wagner.

On to the Nakamoto signature. Why is it useful to label it so?

Because, with this literary device, it is now much easier to talk about the blockchain. Watch this:

The blockchain is a shared ledger where each new block of transactions - the 10 minutes thing - is signed with a Nakamoto signature.

Less than 25 words! Outstanding! We can now separate this discussion into two things to understand: firstly: what's a shared ledger, and second: what's the Nakamoto signature?

Each can be covered as a separate topic. For example:

the shared ledger can be seen as a series of blocks, each of which is a single document presented for signature. Each block consists of a set of transactions built on the previous set. Each succeeding block changes the state of the accounts by moving money around; so given any particular state we can create the next block by filling it with transactions that do those money moves, and signing it with a Nakamoto signature.

Having described the the shared ledger, we can now attack the Nakamoto signature:

A Nakamoto signature is a device to allow a group to agree on a shared document. To eliminate the potential for inconsistencies aka disagreement, the group engages in a lottery to pick one person's version as the one true document. That lottery is effected by all members of the group racing to create the longest hash over their copy of the document. The longest hash wins the prize and also becomes a verifiable 'token' of the one true document for members of the group: the Nakamoto signature.

That's it, in a nutshell. That's good enough for most people. Others however will want to open that nutshell up and go deeper into the hows, whys and whethers of it all. You'll note I left plenty of room for argument above; Economists will look at the incentive structure in the lottery, and ask if a prize in exchange for proof-of-work is enough to encourage an efficient agreement, even in the presence of attackers? Computer scientists will ask 'what happens if...' and search for ways to make it not so. Entrepreneurs might be more interested in what other documents can be signed this way. Cryptographers will pounce on that longest hash thing.

But for most of us we can now move on to the real work. We haven't got time for minutia. The real joy of the Nakamoto signature is that it breaks what was one monolithic incomprehensible problem into two more understandable ones. Divide and conquer!

The Nakamoto signature needs to be a thing. Let it be so!

NB: This article was kindly commented on by Ada Lovelace and Adam Back.

February 16, 2015

Google's bebapay to close down, Safaricom shows them how to do it

In news today, BebaPay, the google transit payment system in Nairobi, is shutting down. As predicted in this blog, the payment system was a disaster from the start, primarily because it did not understand the governance (aka corruption) flow of funds in the industry. This resulted in the erstwhile operators of the system conspiring to make sure it would not work.

How do I know this? I was in Nairobi when it first started up, and we were analysing a lot of market sectors for payments technology at the time. It was obvious to anyone who had actually taken a ride on a Matatu (the little buses that move millions of Kenyans to work) that automating their fares was a really tough sell. And, once we figured out how the flow of funds for the Matatu business worked, from inside sources, we knew a digital payments scheme was dead on arrival.

As an aside there is a play that could have been done there, in a nearby sector, which is the tuk-tuks or motorbike operators that are clustered at every corner. But that's a case-study for another day. The real point to take away here is that you have to understand the real flows of money, and when in Africa, understand that what we westerners call corruption means that our models are basically worthless.

Or in shorter terms, take a ride on the bus before you decide to improve it.

Meanwhile, in other news, Safaricom are now making a big push into the retail POS world. This was also in the wings at the time, and when I was there, we got the inside look into this field due to a friend who was running a plucky little mPesa facilitation business for retails. He was doing great stuff, but the elephant in the room was always Safaricom, and it was no polite toilet-trained beast. Its reputation for stealing other company's business ideas was a legend; in the payment systems world, you're better off modelling Safaricom as a bank.

Ah, that makes more sense... You'll note that Safaricom didn't press over-hard to enter the transit world.

The other great takeway here is that westerners should not enter into the business of Africa lightly if at all. Westerners' biggest problem is that they don't understand the conditions there, and consequently they will be trapped in a self-fulfilling cycle of western psuedo-economic drivel. Perhaps even more surprising, they also can't turn to their reliable local NGOs or government partners or consultancies because these people are trained & paid by the westerners to feed back the same academic models.

How to break out of that trap economically is a problem I've yet to figure out. I've now spent a year outside the place, and I can report that I have met maybe 4 or 5 people amongst say 100 who actually understand the difference? Not a one of these is employed by an NGO, aid department, consultant, etc. And, these impressive organisations around the world that specialise in Africa are in this situation -- totally misinformed and often dangerously wrong.

How to break out of that trap economically is a problem I've yet to figure out. I've now spent a year outside the place, and I can report that I have met maybe 4 or 5 people amongst say 100 who actually understand the difference? Not a one of these is employed by an NGO, aid department, consultant, etc. And, these impressive organisations around the world that specialise in Africa are in this situation -- totally misinformed and often dangerously wrong.

I feel very badly for the poor of the world, they are being given the worst possible help, with the biggest smile and a wad of cash to help it along its way to failure.

Which leads me to a pretty big economic problem - solving this requires teaching what I learnt in a few years over a single coffee - can't be done. I suspect you have to go there, but even that isn't saying what's what.

Luckily however the developing world -- at least the parts I saw in Nairobi -- is now emerging with its own digital skills to address their own issues. Startup labs abound! And, from what I've seen, they are doing a much better job at it than the outsiders.

So, maybe this is a problem that will solve itself? Growth doesn't happen at more than 10% pa, so patience is perhaps the answer, not anger. We can live and hope, and if an NGO does want to take a shot at the title, I'm in for the 101th coffee.

November 19, 2014

Bitcoin and the Byzantine Generals Problem -- a Crusade is needed? A Revolution?

It is commonly touted that Bitcoin solves the Byzantine Generals Problem because it deals with coordination between geographically separated points, and there is an element of attack on the communications. But I am somewhat suspicious that this is not quite the right statement.

To review "the Byzantine Generals Problem," let's take some snippets from Lamport, Shostack and Pease's seminal paper of that name:

We imagine that several divisions of the Byzantine army are camped outside an enemy city, each division commanded by its own general. The generals can communicate with one another only by messenger. After observing the enemy, they must decide upon a common plan of action. However, some of the generals may be traitors, trying to prevent the loyal generals from reaching agreement. The generals must have an algorithm to guarantee thatA. All loyal generals decide upon the same plan of action.

The loyal generals will all do what the algorithm says they should, but the traitors may do anything they wish. The algorithm must guarantee condition A regardless of what the traitors do.

The loyal generals should not only reach agreement, but should agree upon a reasonable plan. We therefore also want to insure thatB. A small number of traitors cannot cause the loyal generals to adopt a bad plan.

Lamport, Shostack and Pease, "the Byzantine Generals Problem", ACM Transactions on Programming Languages and Systems,Vol.4, No. 3, July 1982, Pages 382-401.

My criticism is one of strict weakening. Lamport et al addressed the problem of Generals communicating, but there are no Generals in the Bitcoin design. If we read Lamport, although it doesn't say it explicitly, there are N Generals, exactly, and they are all identified, being loyal or disloyal as it is stated. Which means that the Generals Problem only describes a fixed set in which everyone can authenticate each other.

While still a relevant problem, the Internet world of p2p solutions has another issue -- the sybil attack. Consider "Exposing Computationally-Challenged Byzantine Impostors" from 2005 by Aspnes, Jackson and Krishnamurthy:

Peer-to-peer systems that allow arbitrary machines to connect to them are known to be vulnerable to pseudospoofing or Sybil attacks, first described in a paper by Douceur [7], in which Byzantine nodes adopt multiple identities to break fault-tolerant distributed algorithms that require that the adversary control no more than a fixed fraction of the nodes. Douceur argues in particular that no practical system can prevent such attacks, even using techniques such as pricing via processing [9], without either using external validation (e.g., by relying on the scarceness of DNS domain names or Social Security numbers), or by making assumptions about the system that are unlikely to hold in practice. While he describes the possibility of using a system similar to Hashcash [3] for validating identities under certain very strong cryptographic assumptions, he suggests that this approach can only work if (a) all the nodes in the system have nearly identical resource constraints; (b) all identities are validated simultaneously by all participants; and (c) for "indirect validations," in which an identity is validated by being vouched for by some number of other validated identities, the number of such witnesses must exceed the maximum number of bad identities. This result has been abbreviated by many subsequent researchers [8, 11, 19-21] as a blanket statement that preventing Sybil attacks without external validation is impossible.J. Aspnes, C. Jackson, and A. Krishnamurthy, "Exposing computationally-challenged byzantine impostors," Tech. Report YALEU/DCS/TR-1332, Yale University, 2005, http://www.cs.yale.edu/homes/aspnes/papers/tr1332.pdf

Prescient, or what? The paper then goes on to argue that the solution to the sybil attack is precisely in weakening the restriction over identity: *The good guys can also duplicate*.

We argue that this impossibility result is much more narrow than it appears, because it gives the attacking nodes a significant advantage in that it restricts legitimate nodes to one identity each. By removing this restriction...

This is clearly not what Lamport et al's Generals were puzzling over in 1982, but it is as clearly an important problem, related, and one that is perhaps more relevant to Internet times.

It's also the one solved according to the Bitcoin model. If Bitcoin solved the Byzantine Generals Problem, it did it by shifting the goal posts. Where then did Satoshi move the problem to? What is his problem?

With p2p in general and Bitcoin in particular, we're talking more formally about a dynamic membership set, where the set comes together once to demand strong consensus and that set is then migrated to a distinct set for the next round, albeit with approximately the same participants.

What's that? It's more like a herd, or a school of fish. As it moves forward, sudden changes in direction cause some to fall off, others to join.

The challenge then might be to come up with a name. Scratching my head to come up with an analogue in human military affairs, it occurs that the Crusades were something like this: A large group of powerful knights, accompanied by their individual retainers, with a forward goal in mind. The group was not formed on state lines typical of warfare but religious lines, and it would for circumstances change as it moved. Some joined, while some crusaders never made it to the front; others made it and died in the fighting, and indeed some entire crusades never made it out of Europe.

Crusaders were typically volunteers, often motivated by greed, force or threat of reputation damage. There were plenty of non-aligned interests in a crusade, and for added historical bonus, they typically travelled through Byzantium or Constantinople as it was then known. And, as often bogged down there, literally falling to the Byzantine attacks of the day.

Perhaps p2p faces the Byzantine Crusaders Problem, and perhaps this is what Bitcoin has solved?

In the alternate, I've seen elsewhere that the problem is referred to as the Revolutionaries' Problem. This term also works in that it speaks to the democracy of the moment. As a group of very interested parties come together they strike out at the old ways and form a new consensus over financial and other affairs.

History will be the judge of this, but it does seem that for the sake of pedagogy and accuracy, we need a new title. Bysantium Crusaders' problem? Democratic Revolutionaries' problem? Consensus needed!

September 03, 2014

Proof of Work made useful -- auctioning off the calculation capacity is just another smart contract

Just got tipped to Andrew Poelstra's faq on ASICs, where he says of Adam Back's Proof of Work system in Bitcoin:

Just got tipped to Andrew Poelstra's faq on ASICs, where he says of Adam Back's Proof of Work system in Bitcoin:

In places where the waste heat is directly useful, the cost of mining is merely the difference between electric heat production and ordinary heat production (here in BC, this would be natural gas). Then electricity is effectively cheap even if not actually cheap.

Which is an interesting remark. If true -- assume we're in Iceland where there is a need for lots of heat -- then Bitcoin mining can be free at the margin. Capital costs remain, but we shouldn't look a gift horse in the mouth?

My view remains, and was from the beginning of BTC when Satoshi proposed his design, that mining is a dead-weight loss to the economy because it turns good electricity into bad waste, heat. And, the capital race adds to that, in that SHA2 mining gear is solely useful for ... Bitcoin mining. Such a design cannot survive in the long run, which is a reflection of Gresham's law, sometimes expressed as the simplistic aphorism of "bad money drives out good."

Now, the good thing about predicting collapse in the long run is that we are never proven wrong, we just have to wait another day ... but as Ben Laurie pointed out somewhere or other, the current incentives encourage the blockchain mining to consume the planet, and that's not another day we want to wait for.

Not a good thing. But if we switch production to some more socially aligned pattern /such as heating/, then likely we could at least shift some of the mining to a cost-neutrality.

Why can't we go further? Why can't we make the information calculated socially useful, and benefit twice? E.g., we can search for SETI, fold some DNA, crack some RSA keys. Andrew has commented on that too, so this is no new idea:

7. What about "useful" proofs-of-work?These are typically bad ideas for all the same reasons that Primecoin is, and also bad for a new reason: from the network's perspective, the purpose of mining is to secure the currency, but from the miner's perspective, the purpose of mining is to gain the block reward. These two motivations complement each other, since a block reward is worth more in a secure currency than in a sham one, so the miner is incentivized to secure the network rather than attacking it.

However, if the miner is motivated not by the block reward, but by some social or scientific purpose related to the proof-of-work evaluation, then these incentives are no longer aligned (and may in fact be opposed, if the miner wants to discourage others from encroaching on his work), weakening the security of the network.

I buy the general gist of the alignments of incentives, but I'm not sure that we've necessarily unaligned things just by specifying some other purpose than calculating a SHA2 to get an answer close to what we already know.

Let's postulate a program that calculates some desirable property. Because that property is of individual benefit only, then some individual can pay for it. Then, the missing link would be to create a program that takes in a certain amount of money, and distributes that to nodes that run it according to some fair algorithm.

What's a program that takes in and holds money, gets calculated by many nodes, and distributes it according to an algorithm? It's Nick Szabo's smart contract distributed over the blockchain. We already know how to do that, in principle, and in practice there are many efforts out there to improve the art. Especially, see Ethereum.

So let's assume a smart contract. Then, the question arises how to get your smart contract accepted as the block calculation for 17:20 on this coming Friday evening? That's a consensus problem. Again, we already know how to do consensus problems. But let's postulate one method: hold a donation auction and simply order these things according to the amount donated. Close the block a day in advance and leave that entire day to work out which is the consensus pick on what happens at 17:20.

So let's assume a smart contract. Then, the question arises how to get your smart contract accepted as the block calculation for 17:20 on this coming Friday evening? That's a consensus problem. Again, we already know how to do consensus problems. But let's postulate one method: hold a donation auction and simply order these things according to the amount donated. Close the block a day in advance and leave that entire day to work out which is the consensus pick on what happens at 17:20.

Didn't get a hit? If your smart contract doesn't participate, then at 17:30 it expires and sends back the money. Try again, put in more money? Or we can imagine a variation where it has a climbing ramp of value. It starts at 10,000 at 17:20 and then adds 100 for each of the next 100 blocks then expires. This then allows an auction crossing, which can be efficient.

An interesting attack here might be that I could code up a smartcontract-block-PoW that has a backdoor, similar to the infamous DUAL_EC random number generator from NIST. But, even if I succeed in coding it up without my obfuscated clause being spotted, the best I can do is pay for it to reach the top of the rankings, then win my own payment back as it runs at 17:20.

With such an attack, I get my cake calculated and I get to eat it too. As far as incentives go to the miner, I'd be better off going to the pub. The result is still at least as good as Andrew's comment, "from the network's perspective, the purpose of mining is to secure the currency."

What about the 'difficulty' factor? Well, this is easy enough to specify, it can be part of the program. The Ethereum people are working on the basis of setting enough 'gas' to pay for the program, so the notion of 'difficulty' is already on the table.

I'm sure there is something I haven't thought of as yet. But it does seem that there is more of a benefit to wring from the mining idea. We have electricity, we have capital, and we have information. Each of those is a potential for a bounty, so as to claw some sense of value back instead of just heating the planet to keep a bunch of libertarians with coins in their pockets. Comments?

April 20, 2014

Code as if everyone is the thief.

This is what financial cryptography is about (h/t to Jeroen). Copied from Qi of the TheCodelessCode:

A novice asked of master Bawan: “Say something about the Heartbleed Bug.”

Said Bawan: “Chiuyin, the Governor’s treasurer, is blind as an earthworm. A thief may give him a coin of tin, claim that it is silver and receive change. When the treasury is empty, which man is the villain? Speak right and I will spare you all blows for one week. Speak wrong and my staff will fly!”

The novice thought: if I say the thief, Bawan will surely strike me, for it is the treasurer who doles out the coins. But if I say the treasurer he will also strike me, for it is the thief who takes advantage of the situation.

When the pause grew too long, Bawan raised his staff high. Suddenly enlightened, the novice cried out: “The Governor! For who else made this blind man his treasurer?”

Bawan lowered his staff. “And who is the Governor?”

Said the novice: “All who might have cried out ‘this man is blind!’ but failed to notice, or even to examine him.”

Bawan nodded. “This is the first lesson. Too easily we praise Open Source, saying smugly to each other, ‘under ten thousand eyeballs, every bug is laid bare’. Yet when the ten thousand avert their gaze, they are no more useful than the blind man. And now that I have spared you all blows for one week, stand at ease and tell me: what is the second lesson?”

Said the novice: “Surely, I have no idea.”

Bawan promptly struck the novice’s skull with his staff. The boy fell to the floor, unconscious.

As he stepped over the prone body, Bawan remarked: “Code as if everyone is the thief.”

April 06, 2014

The evil of cryptographic choice (2) -- how your Ps and Qs were mined by the NSA

One of the excuses touted for the Dual_EC debacle was that the magical P & Q numbers that were chosen by secret process were supposed to be defaults. Anyone was at liberty to change them.

Epic fail! It turns out that this might have been just that, a liberty, a hope, a dream. From last week's paper on attacking Dual_EC:

"We implemented each of the attacks against TLS libraries described above to validate that they work as described. Since we do not know the relationship between the NIST- specified points P and Q, we generated our own point Q′ by first generating a random value e ←R {0,1,...,n−1} where n is the order of P, and set Q′ = eP. This gives our trapdoor value d ≡ e−1 (mod n) such that dQ′ = P. (Our random e and its corresponding d are given in the Appendix.) We then modified each of the libraries to use our point Q′ and captured network traces using the libraries. We ran our attacks against these traces to simulate a passive network attacker.

In the new paper that measures how hard it was to crack open TLS when corrupted by Dual_EC, the authors changed the Qs to match the P delivered, so as to attack the code. Each of the four libraries they had was in binary form, and it appears that each had to be hard-modified in binary in order to mind their own Ps and Qs.

So did (a) the library implementors forget that issue? or (b) NIST/FIPS in its approval process fail to stress the need for users to mind their Ps and Qs? or (c) the NSA knew all along that this would be a fixed quantity in every library, derived from the standard, which was pre-derived from their exhaustive internal search for a special friendly pair? In other words:

"We would like to stress that anybody who knows the back door for the NIST-specified points can run the same attack on the fielded BSAFE and SChannel implementations without reverse engineering.

Defaults, options, choice of any form has always been known as bad for users, great for attackers and a downright nuisance for developers. Here, the libraries did the right thing by eliminating the chance for users to change those numbers. Unfortunately, they, NIST and all points thereafter, took the originals without question. Doh!

April 01, 2014

The IETF's Security Area post-NSA - what is the systemic problem?

In the light of yesterday's newly revealed attack by the NSA on Internet standards, what are the systemic problems here, if any?

I think we can question the way the IETF is approaching security. It has taken a lot of thinking on my part to identify the flaw(s), and not a few rants, with many and aggressive defences and counterattacks from defenders of the faith. Where I am thinking today is this:

First the good news. The IETF's Working Group concept is far better at developing general standards than anything we've seen so far (by this I mean ISO, national committees, industry cartels and whathaveyou). However, it still suffers from two shortfalls.

1. the Working Group system is more or less easily captured by the players with the largest budget. If one views standards as the property of the largest players, then this is not a problem. If OTOH one views the Internet as a shared resource of billions, designed to serve those billions back for their efforts, the WG method is a recipe for disenfranchisement. Perhaps apropos, spotted on the TLS list by Peter Gutmann:

Documenting use cases is an unnecessary distraction from doing actual work. You'll note that our charter does not say "enumerate applications that want to use TLS".

I think reasonable people can debate and disagree on the question of whether the WG model disenfranchises the users, because even though a a company can out-manouver the open Internet through sheer persistence and money, we can still see it happen. In this, IETF stands in violent sunlight compared to that travesty of mouldy dark closets, CABForum, which shut users out while industry insiders prepared the base documents in secrecy.

I'll take the IETF any day, except when...

2. the Working Group system is less able to defend itself from a byzantine attack. By this I mean the security concept of an attack from someone who doesn't follow the rules, and breaks them in ways meant to break your model and assumptions. We can suspect byzantium disclosures in the fingered ID:

The United States Department of Defense has requested a TLS mode which allows the use of longer public randomness values for use with high security level cipher suites like those specified in Suite B [I-D.rescorla-tls-suiteb]. The rationale for this as stated by DoD is that the public randomness for each side should be at least twice as long as the security level for cryptographic parity, which makes the 224 bits of randomness provided by the current TLS random values insufficient.

Assuming the story as told so far, the US DoD should have added "and our friends at the NSA asked us to do this so they could crack your infected TLS wide open in real time."

Such byzantine behaviour maybe isn't a problem when the industry players are for example subject to open observation, as best behaviour can be forced, and honesty at some level is necessary for long term reputation. But it likely is a problem where the attacker is accustomed to that other world: lies, deception, fraud, extortion or any of a number of other tricks which are the tools of trade of the spies.

Which points directly at the NSA. Spooks being spooks, every spy novel you've ever read will attest to the deception and rule breaking. So where is this a problem? Well, only in the one area where they are interested in: security.

Which is irony itself as security is the field where byzantine behaviour is our meat and drink. Would the Working Group concept past muster in an IETF security WG? Whether it does or no depends on whether you think it can defend against the byzantine attack. Likely it will pass-by-fiat because of the loyalty of those involved, I have been one of those WG stalwarts for a period, so I do see the dilemma. But in the cold hard light of sunlight, who is comfortable supporting a WG that is assisted by NSA employees who will apply all available SIGINT and HUMINT capabilities?

Can we agree or disagree on this? Is there room for reasonable debate amongst peers? I refer you now to these words:

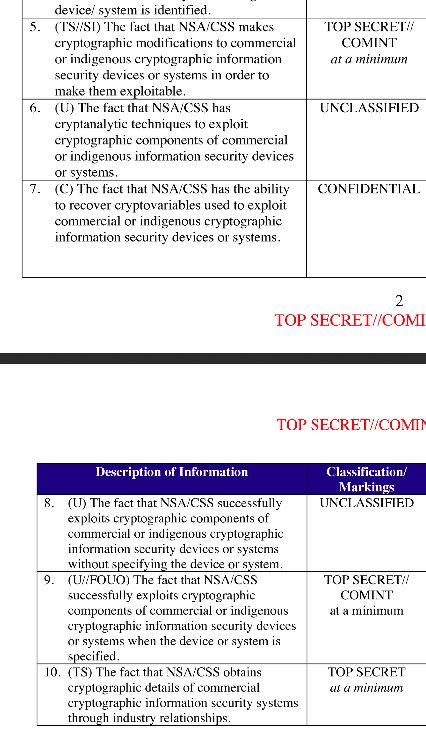

On September 5, 2013, the New York Times [18], the Guardian [2] and ProPublica [12] reported the existence of a secret National Security Agency SIGINT Enabling Project with the mission to “actively [engage] the US and foreign IT industries to covertly influence and/or overtly leverage their commercial products’ designs.” The revealed source documents describe a US $250 million/year program designed to “make [systems] exploitable through SIGINT collection” by inserting vulnerabilities, collecting target network data, and influencing policies, standards and specifications for commercial public key technologies. Named targets include protocols for “TLS/SSL, https (e.g. webmail), SSH, encrypted chat, VPNs and encrypted VOIP.”

The documents also make specific reference to a set of pseudorandom number generator (PRNG) algorithms adopted as part of the National Institute of Standards and Technology (NIST) Special Publication 800-90 [17] in 2006, and also standardized as part of ISO 18031 [11]. These standards include an algorithm called the Dual Elliptic Curve Deterministic Random Bit Generator (Dual EC). As a result of these revelations, NIST reopened the public comment period for SP 800-90.

And as previously written here. The NSA has conducted a long term programme to breach the standards-based crypto of the net.

As evidence of this claim, we now have *two attacks*, being clear attempts to trash the security of TLS and freinds, and we have their own admission of intent to breach. In their own words. There is no shortage of circumstantial evidence that NSA people have pushed, steered, nudged the WGs to make bad decisions.

I therefore suggest we have the evidence to take to a jury. Obviously we won't be allowed to do that, so we have to do the next best thing: use our collective wisdom and make the call in the public court of Internet opinion.

My vote is -- guilty.

One single piece of evidence wasn't enough. Two was enough to believe, but alternate explanations sounded plausible to some. But we now have three solid bodies of evidence. Redundancy. Triangulation. Conclusion. Guilty.

Where it leaves us is in difficulties. We can try and avoid all this stuff by e.g., avoiding American crypto, but it is a bit broader that that. Yes, they attacked and broke some elements of American crypto (and you know what I'm expecting to fall next.). But they also broke the standards process, and that had even more effect on the world.

It has to be said that the IETF security area is now under a cloud. Not only do they need to analyse things back in time to see where it went wrong, but they also need some concept to stop it happening in the future.

The first step however is to actually see the clouds, and admit that rain might be coming soon. May the security AD live in interesting times, borrow my umbrella?

March 31, 2014

NSA caught again -- deliberate weakening of TLS revealed!?

In a scandal that is now entertaining that legal term of art "slam-dunk" there is news of a new weakness introduced into the TLS suite by the NSA:

We also discovered evidence of the implementation in the RSA BSAFE products of a non-standard TLS extension called "Extended Random." This extension, co-written at the request of the National Security Agency, allows a client to request longer TLS random nonces from the server, a feature that, if it enabled, would speed up the Dual EC attack by a factor of up to 65,000. In addition, the use of this extension allows for for attacks on Dual EC instances configured with P-384 and P-521 elliptic curves, something that is not apparently possible in standard TLS.

This extension to TLS was introduced 3 distinct times through an open IETF Internet Draft process, twice by an NSA employee and a well known TLS specialist, and once by another. The way the extension works is that it increases the quantity of random numbers fed into the cleartext negotiation phase of the protocol. If the attacker has a heads up to those random numbers, that makes his task of divining the state of the PRNG a lot easier. Indeed, the extension definition states more or less that:

4.1. Threats to TLS

When this extension is in use it increases the amount of data that an attacker can inject into the PRF. This potentially would allow an attacker who had partially compromised the PRF greater scope for influencing the output.

The use of Dual_EC, the previously fingered dodgy standard, makes this possible. Which gives us 2 compromises of the standards process that when combined magically work together.

Our analysis strongly suggests that, from an attacker's perspective, backdooring a PRNG should be combined not merely with influencing implementations to use the PRNG but also with influencing other details that secretly improve the exploitability of the PRNG.

Red faces all round.

March 06, 2014

Eat this, Bitcoin -- Ricardo now has cloud!

Ricardo is now cloud-enabled.

Which I hasten to add, is not the same thing as cloud-based, if your head is that lofty. Not the same thing, at all, no sir, feet firmly placed on planet earth!

Here's the story. Apologies in advance for this self-indulgent rant, but if you are not a financial cryptographer, the following will appear to be just a lot of mumbo jumbo and your time is probably better spent elsewhere... With that warning, let's get our head up in the clouds for a while.

As a client-server construction, much like a web arrangement, and like Bitcoin in that the client is in charge, the client is of course vulnerable to loss/theft. So a backup of some form is required. Much analysis revealed that backup had to be complete, it had to be off-client, and also system provided.

That work has now taken shape and is delivering backups in bench-conditions. The client can backup its entire database into a server's database using the same point-to-point security protocol and the same mechanics as the rest of the model. The client also now has a complete encrypted object database using ChaCha20 as the stream cipher and Poly1305 as the object-level authentication layer. This gets arranged into a single secured stream which is then uploaded dynamically to the server. Which latter offers a service that allows a stream to be built up over time.

Consider how a client works: Do a task? Make a payment? Generate a transaction!

Remembering always it's only a transaction when it is indeed transacted, this means that the transaction has to be recorded into the database. Our little-database-that-could now streams that transaction onto the end of its log, which is now stream-encrypted, and a separate thread follows the appends and uploads additions to the server. (Just for those who are trying to see how this works in a SQL context, it doesn't. It's not a SQL database, it follows the transaction-log-is-the-database paradigm, and in that sense, it is already stream oriented.)

In order to prove this client-to-server and beginning to end, there is a hash confirmation over the local stream and over the server's file. When they match, we're golden. It is not a perfect backup because the backup trails by some amount of seconds; it is not therefore /transactional/. People following the latency debate over Bitcoin will find that amusing, but I think this is possibly a step too far in our current development; a backup that is latent to a minute or so is probably OK for now, and I'm not sure if we want to try transactional replication on phone users.

This is a big deal for many reasons. One is that it was a quite massive project, and it brought our tiny startup to a complete standstill on the technical front. I've done nothing but hack for about 3 months now, which makes it a more difficult project than say rewriting the entire crypto suite.

Second is the reasoning behind it. Our client side asset management software is now going to be using in a quite contrary fashion to our earlier design anticipations. It is going to manage the entire asset base of what is in effect a financial institution (FI), or thousands of them. Yet, it's going to live on a bog-standard Android phone, probably in the handbag of the Treasurer as she roves around the city from home to work and other places.

Can you see where this is going? Loss, theft, software failure, etc. We live in one of the most crime ridden cities on the planet, and therefore we have to consider that the FI's entire book of business can be stolen at any time. And we need to get the Treasurer up and going with a new phone in short order, because her customers demand it.

Add in some discussions about complexity, and transactions, and social networking in the app, etc etc and we can also see pretty easily that just saving the private keys will not cut the mustard. We need the entire state of phone to be saved, and recovered, on demand.

But wait, you say! Of course the solution is cloud, why ever not?

No, because, cloud is insecure. Totally. Any FI that stores their customer transactions in the cloud is in a state of sin, and indeed it is in some countries illegal to even consider it. Further, even if the cloud is locally run by the institution, internally, this exposes the FI and the poor long suffering customer to fantastic opportunities for insider fraud. What I failed to mention earlier is that my user base considers corruption to be a daily event, and is exposed to frauds continually, including from their FIs. Which is why Ricardo fills the gap.

When it comes to insider fraud, cloud is the same as fog. Add in corruption and it's now smog. So, cloud is totally out, or, cloud just means you're being robbed blind like you always were, so there is no new offering here. Following the sense of Digicash from 2 decades earlier, and perhaps Bitcoin these days, we set the requirement: The server or center should not be able to forge transactions, which, as a long-standing requirement (insert digression here into end-to-end evidence and authentication designs leading to triple entry and the Ricardian Contract, and/or recent cases backing FIs doing the wrong thing).

To bring these two contradictions together however was tricky. To resolve, I needed to use a now time-honoured technique theorised by the capabilities school, and popularised by amongst others Pelle's original document service called wideword.net and Zooko's Tahoe-LAFS: the data that is uploaded over UDP is encrypted to keys only known to the clients.

And that is what happens. As my client software database spits out data in an append-only stream (that's how all safe databases work, right??) it stream-encrypts this and then sends the stream up to the server. So the server simply has to offer something similar to the Unix file metaphor: create, read, write, delete *and append*. Add in a hash feature to confirm, and we're set. (It's similar enough to REST/CRUD that it's worth a mention, but different enough to warrant a disclaimer.)

A third reason this is a big deal is because the rules of the game have changed. In the 1990s we were assuming a technical savvy audience, ones who could manage public keys and backups. The PGP generation, if you like. Now, we're assuming none of that. The thing has to work, and it has to keep working, regardless of user foibles. This is the Apple-Facebook generation.

This benchmark also shines adverse light on Bitcoin. That community struggles to deal with theft, lurching from hack to bug to bankruptcy. As a result of their obsession over The Number One Criminal (aka the government) and with avoiding control at the center, they are blinded to the costly reality of criminals 2 through 100. If Bitcoin hypothetically were to establish the user-friendly goal that they can keep going in the face of normal 2010s user demands and failure modes, it'd be game over. They basically have to handwave stuff away as 'user responsibility' but that doesn't work any more. The rules of the game have changed, we're not in the 1990s anymore, and a comprehensive solution is required.

Finally, once you can do things like cloud, it opens up the possibilities for whole new features and endeavours. That of course is what makes cloud so exciting for big corporates -- to be able to deliver great service and features to customers. I've already got a list of enhancements we can now start to put in, and the only limitation I have now is capital to pay the hackers. We really are at the cusp of a new generation of payment systems; crypto-plumbing is fun again!

January 30, 2014

Hard Truths about the Hard Business of finding Hard Random Numbers

Editorial note: this rant was originally posted here but has now moved to a permanent home where it will be updated with new thoughts.

As many have noticed, there is now a permathread (Paul's term) on how to do random numbers. It's always been warm. Now the arguments are on solid simmer, raging on half a dozen cryptogroups, all thanks to the NSA and their infamous breach of NIST, American industry, mom's apple pie and the privacy of all things from Sunday school to Angry Birds.

Why is the topic of random numbers so bubbling, effervescent, unsatisfying? In short, because generators of same (RNGs), are *hard*. They are in practical experience trickier than most of the other modules we deal with: ciphers, HMACs, public key, protocols, etc.

Yet, we have come a long way. We now have a working theory. When Ada put together her RNG this last summer, it wasn't that hard. Out of our experience, herein is a collection of things we figured out; with the normal caveat that, even as RNs require stirring, the recipe for 'knowing' is also evolving.

- Use what your platform provides. Random numbers are hard, which is the first thing you have to remember, and always come back to. Random numbers are so hard, that you have to care a lot before you get involved. A hell of a lot. Which leads us to the following rules of thumb for RNG production.

- Use what your platform provides.

- Unless you really really care a lot, in which case, you have to write your own RNG.

- There isn't a lot of middle ground.

- So much so that for almost all purposes, and almost all users, Rule #1 is this: Use what your platform provides.

- When deciding to breach Rule #1, you need a compelling argument that your RNG delivers better results than the platform's. Without that compelling argument, your results are likely to be more random than the platform's system in every sense except the quality of the numbers.

- Software is our domain.

- Software is unreliable. It can be made reliable under bench conditions, but out in the field, any software of more than 1 component (always) has opportunities for failure. In practice, we're usually talking dozens or hundreds, so failure of another component is a solid possibility; a real threat.

- What about hardware RNGs? Eventually they have to go through some software, to be of any use. Although there are some narrow environments where there might be a pure hardware delivery, this is so exotic, and so alien to the reader here, that there is no point in considering it. Hardware serves software. Get used to it.

- As a practical reliability approach, we typically model every component as failing, and try and organise our design to carry on.

- Security is also our domain, which is to say we have real live attackers.

- Many of the sciences rest on a statistical model, which they can do in absence of any attackers. According to Bernoulli's law of big numbers, models of data will even out over time and quantity. In essence, we then can use statistics to derive strong predictions. If random numbers followed the law of big numbers, then measuring 1000 of them would tell us with near certainty that the machine was good for another 1000.

- In security, we live in a byzantine world, which means we have real live attackers who will turn our assumptions upside down, out of spite. When an attacker is trying to aggressively futz with your business, he will also futz with any assumptions and with any tests or protections you have that are based on those assumptions. Once attackers start getting their claws and bits in there, the assumption behind Bernoulli's law falls apart. In essence this rules out lazy reliance on statistics.