June 12, 2016

Where is the Contract? - a short history of the contract in Financial Cryptography systems

(Editor's note: Dates are approximate. Written in May of 2014 as an educational presentation to lawyers, notes forgotten until now. Freshened 2016.09.11.)

Where is the contract? This is a question that has bemused the legal fraternity, bewitched the regulator, and sent the tech community down the proverbial garden path. Let's track it down.

Within the financial cryptography community, we have seen the discussion of contracts in approximately these ways:

- Smart Contracts, as performance machines with money ,

- Ricardian Contract which captures the writings of an agreement ,

- Compositions: of elements such as the "offer and acceptance" agreement into a Russian Doll Contracts pattern, or of clause-code pairs, or of split contract constructions.

Let's look at each in turn.

a. Performance

a(i) Nick Szabo theorised the notion of smart contracts as far back as 1994. His design postulated the ability of our emerging financial cryptography technology to automate the performance of human agreements within computer programs that also handled money. That is, they are computer programs that manage performance of a contract with little or less human intervention.

At an analogous level at least, smart contracts are all around. So much of the performance of contracts is now built into the online services of corporations that we can't even count them anymore. Yet these corporate engines of performance were written once then left running forever, whereas Szabo's notion went a step further: he suggested smart contracts as more of a general service to everyone: your contractual-programmer wrote the smart contract and then plugged it into the stack, or the service or the cloud. Users would then come along and interact with this machine, to get services.

a(ii). Bitcoin. In 2009 Bitcoin deployed a limited form of Smart Contracts in an open service or cloud setting called the blockchain. This capability was almost a side-effect of a versatile payments transaction of smart contracts. After author Satoshi Nakamoto left, the power of smart contracts was reduced in scope somewhat due to security concerns.

To date, success has been limited to simple uses such as Multisig which provides a separation of concerns governance pattern by allowing multiple signers to release funds.

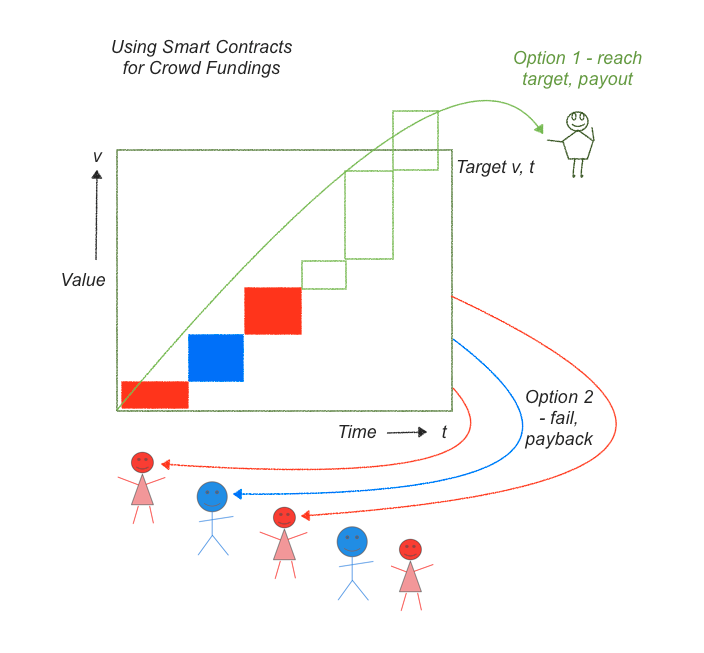

If we look at the above graphic we can see a fairly complicated story that we can now reduce into one smart contract. In a crowd funding, a person will propose a project. Many people will contribute to a pot of money for that project until a particular date. At that date, we have to decide whether the pot of money is enough to properly fund the project and if so, send over the funds. If not, return the funds.

To code this up, the smart contract has to do these steps:

- describe the project, including an target value v and a strike date t.

- collect and protect contributions (red, blue, green boxes)

- on the strike date /t/, count the total, and decide on option 1 or 2:

- if the contributions reach the amount, pay all over to owner (green arc), else

- if the contributions do not exceed the target v, pay them all back to funders (red and blue arcs).

A new service called Lighthouse now offers crowdfunding but keep your eyes open for crowdfunding in Ethereum as their smart contracts are more powerful.

b. Writings of the Contract

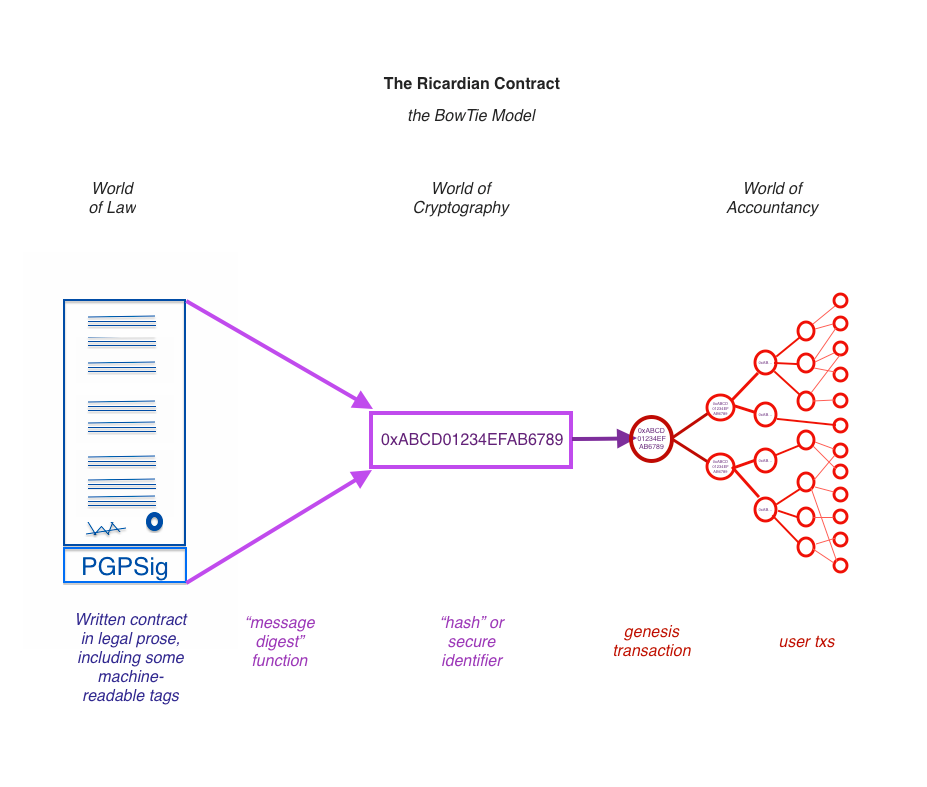

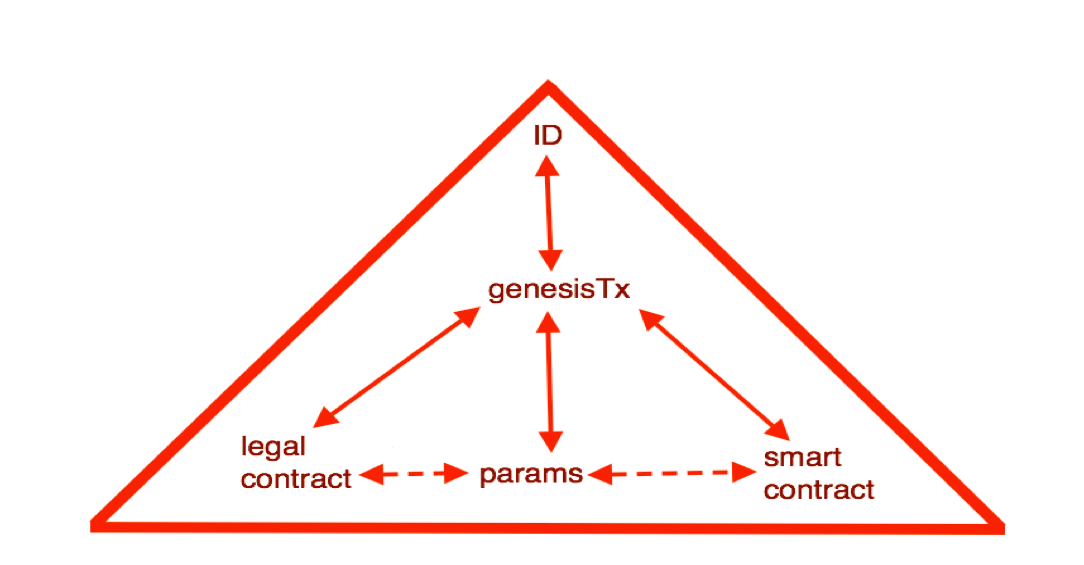

Back in 1996, as part of a startup doing bond trading on the net, I created a method to bring a classical 'paper' contract into touch with a digital accounting system such as cryptocurrencies. The form, which became known as the Ricardian Contract, was readily usable for anything that you could put into a written contract, beyond its original notion of bonds.

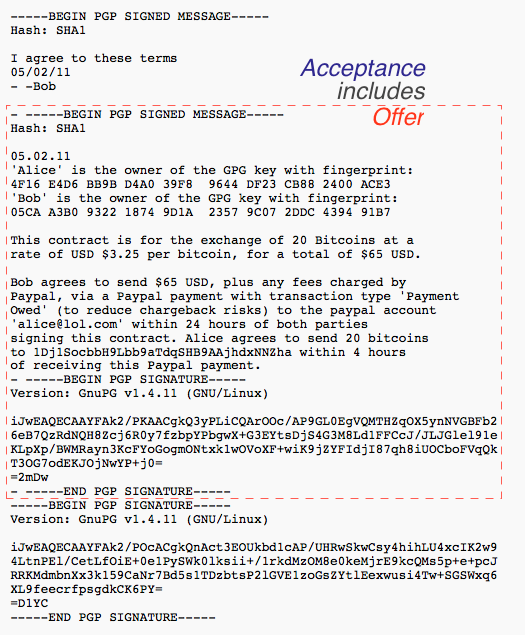

In short: write a standard contract such as a bond. Insert some machine-readable tags that would include parties, amounts, dates, etc that the program also needed to display. Then sign the document using a cleartext digital signature, one that preserves the essence as a human-readable contract. OpenPGP works well for that. This document can be seen on the left of this bow-tie diagram.

Then - hash the document using a cryptographic message digest function that creates a one-for-one identifier for the contract, as seen in the middle. Put this identifier into every transaction to lock in which instrument we're paying back and forth. As the transactions start from one genesis transaction and then fan out to many transactions, all of them including the Ricardian hash, with many users, this is shown in the right hand part of the bow-tie.

See 2004 paper and wikipedia page on the Ricardian contract. We have then a contract form that is readable by person and machine, and can be locked into every transaction - from the genesis transaction, value trickles out to all others.

The Ricardian Contract is now emerging in the Bitcoin world. Enough businesses are looking at the possibilities of doing settlement and are discovering what I found in 1996 - we need a secure way to lock tangible writings of a contract on to the blockchain. A highlight might be NASDAQ's recent announcements, and Coinprism's recent work with OpenAssets project [1, 2, 3], and some of the 2nd generation projects have incorporated it without much fuss.

c. Composition

c(i). Around 2006 Chris Odom built OpenTransactions, a cryptocurrency system that extended Ricardian Contract beyond issuance. The author found:

"While these contracts are simply signed-XML files, they can be nested like russian dolls, and they have turned out to become the critical building block of the entire Open Transactions library. Most objects in the library are derived, somehow, from OTContract. The messages are contracts. The datafiles are contracts. The ledgers are contracts. The payment plans are contracts. The markets and trades are all contracts. Etc.I originally implemented contracts solely for the issuing, but they have really turned out to have become central to everything else in the library."

In effect Chris Odom built an agent-based system using the Ricardian Contract to communicate all its parameters and messages within and between its agents. He also experimented with Smart Contracts, but I think they were a server-upload model.

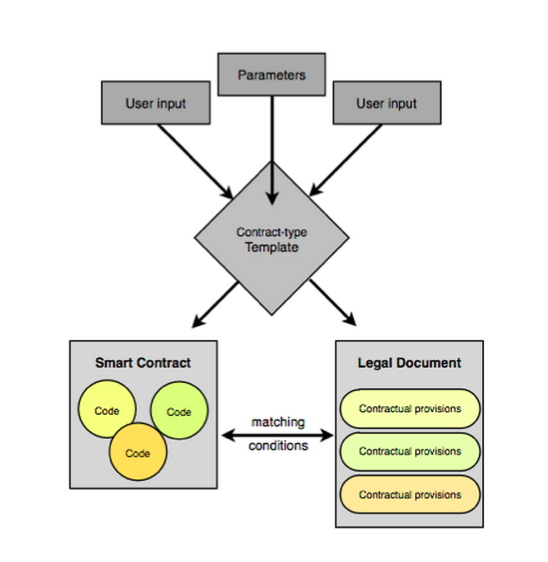

c(ii). CommonAccord construct small units containing matching smart code and prose clauses, and then compose these into full contracts using the browser. Once composed, the result can be read, verified and hashed a la Ricardian Contracts, and performed a la smart contracts.

c(iii) Let's consider person to person trading. With face-to-face trades, the contract is easy. With mail order it is harder, as we have to identify each components, follow a journey, and keep the paper work. With the Internet it is even worse because there is no paperwork, it's all pieces of digital data that might be displayed, might be changed, might be lost.

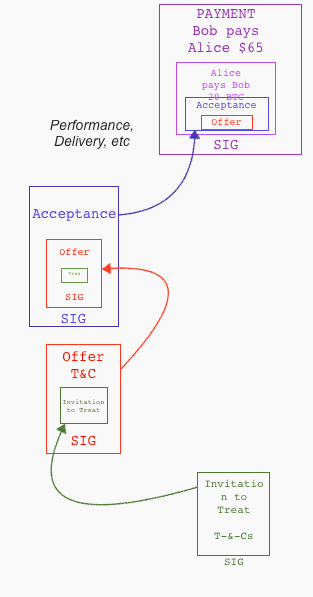

Shifting forward to 2014 and OpenBazaar decided to create a version of eBay or Amazon and put it onto the Bitcoin blockchain. To handle the formation of the contract between people distant and anonymous, they make each component into a Ricardian Contract, and place each one inside the succeeding component until we get to the end.

Let's review the elements of a contract in a cycle:

Let's review the elements of a contract in a cycle:

✓ Invitation to treat is found on blockchain similar to web page.

✓ offer by buyer

✓ acceptance by merchant

✓ (performance...)

✓ payment (multisig partner controls the money)

The Ricardian Contract finds itself as individual elements in the formation of the wider contract formation around a purchase. In each step, the prior step is included within the current contractual document. Like the lego blocks above, we can create a bigger contract by building on top of smaller components, thus implementing the trade cycle into Chris Odom's vision of Russian Dolls.

Conclusion

In conclusion, the question of the moment was:

Where is the contract?

So far, as far as the technology field sees it, in three areas:

- as performance - the Smart Contract

- as writing - the Ricardian Contract

- as composition - elements packaged into Russian Dolls, clause-code pairs and convergance as split contracts.

I see the future as convergence of these primary ideas: the parts or views we call smart & legal contracts will complement each other and grow together, being combined as elements into fuller agreements between people.

For those who think nothing much has changed in the world of contracts for a century or more, I say this: We live in interesting times!

(Editor's reminder: Written in May of 2014, and the convergence notion fed straight into "The Sum of all Chains".)

June 28, 2015

The Nakamoto Signature

The Nakamoto Signature might be a thing. In 2014, the Sidechains whitepaper by Back et al introduced the term Dynamic Membership Multiple-party Signature or DMMS -- because we love complicated terms and long impassable acronyms.

Or maybe we don't. I can never recall DMMS nor even get it right without thinking through the words; in response to my cognitive poverty, Adam Back suggested we call it a Nakamoto signature.

That's actually about right in cryptology terms. When a new form of cryptography turns up and it lacks an easy name, it's very often called after its inventor. Famous companions to this tradition include RSA for Rivest, Shamir, Adleman; Schnorr for the name of the signature that Bitcoin wants to move to. Rijndael is our most popular secret key algorithm, from the inventors names, although you might know it these days as AES. In the old days of blinded formulas to do untraceable cash, the frontrunners were signatures named after Chaum, Brands and Wagner.

On to the Nakamoto signature. Why is it useful to label it so?

Because, with this literary device, it is now much easier to talk about the blockchain. Watch this:

The blockchain is a shared ledger where each new block of transactions - the 10 minutes thing - is signed with a Nakamoto signature.

Less than 25 words! Outstanding! We can now separate this discussion into two things to understand: firstly: what's a shared ledger, and second: what's the Nakamoto signature?

Each can be covered as a separate topic. For example:

the shared ledger can be seen as a series of blocks, each of which is a single document presented for signature. Each block consists of a set of transactions built on the previous set. Each succeeding block changes the state of the accounts by moving money around; so given any particular state we can create the next block by filling it with transactions that do those money moves, and signing it with a Nakamoto signature.

Having described the the shared ledger, we can now attack the Nakamoto signature:

A Nakamoto signature is a device to allow a group to agree on a shared document. To eliminate the potential for inconsistencies aka disagreement, the group engages in a lottery to pick one person's version as the one true document. That lottery is effected by all members of the group racing to create the longest hash over their copy of the document. The longest hash wins the prize and also becomes a verifiable 'token' of the one true document for members of the group: the Nakamoto signature.

That's it, in a nutshell. That's good enough for most people. Others however will want to open that nutshell up and go deeper into the hows, whys and whethers of it all. You'll note I left plenty of room for argument above; Economists will look at the incentive structure in the lottery, and ask if a prize in exchange for proof-of-work is enough to encourage an efficient agreement, even in the presence of attackers? Computer scientists will ask 'what happens if...' and search for ways to make it not so. Entrepreneurs might be more interested in what other documents can be signed this way. Cryptographers will pounce on that longest hash thing.

But for most of us we can now move on to the real work. We haven't got time for minutia. The real joy of the Nakamoto signature is that it breaks what was one monolithic incomprehensible problem into two more understandable ones. Divide and conquer!

The Nakamoto signature needs to be a thing. Let it be so!

NB: This article was kindly commented on by Ada Lovelace and Adam Back.

June 17, 2015

Cash seizure is a thing - maybe this picture will convince you

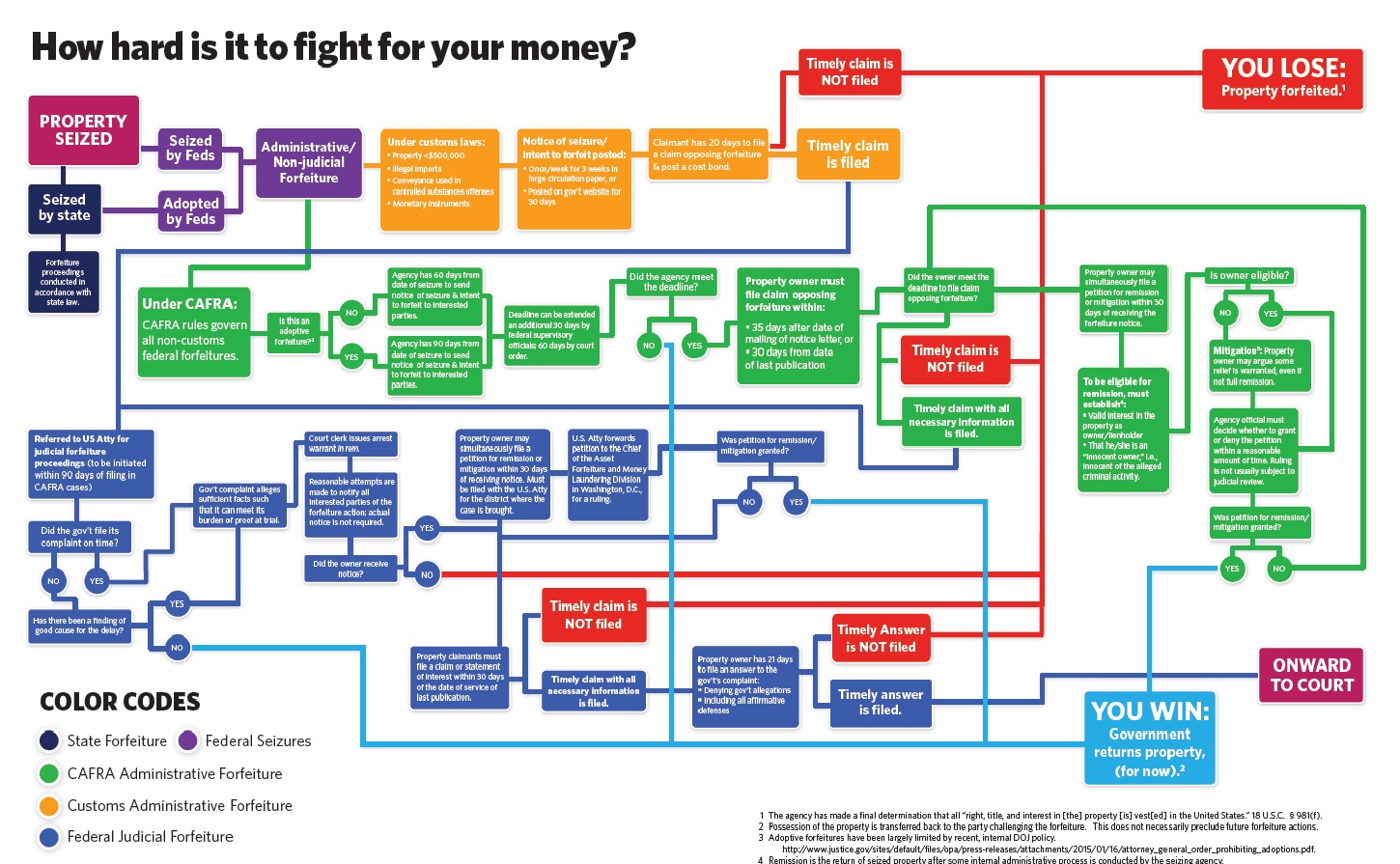

There are many many people who do not believe that the USA police seize cash from people and use it for budget. The system is set up for the benefit of police - budgetary plans are laid, you have no direct recourse to the law because it is the cash that defends itself, the proceeds are carved up.

Maybe this will convince you - if cash seizure by police wasn't a 'thing' we wouldn't need this chart:

May 12, 2015

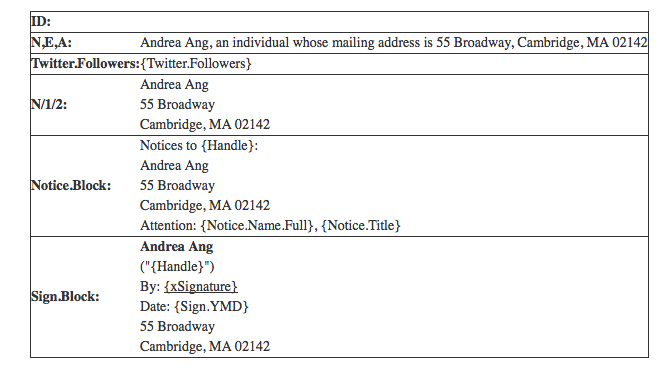

Using CommonAccord to build "First Class Persons"

| Paraphrasing from James' Twitter storm response to The Sum of All Chains: |  |

|

A way to organize similarity of "First Class Person" is to build them from objects. Andrea -> ID_She -> ID_Individual - ID |  |

|

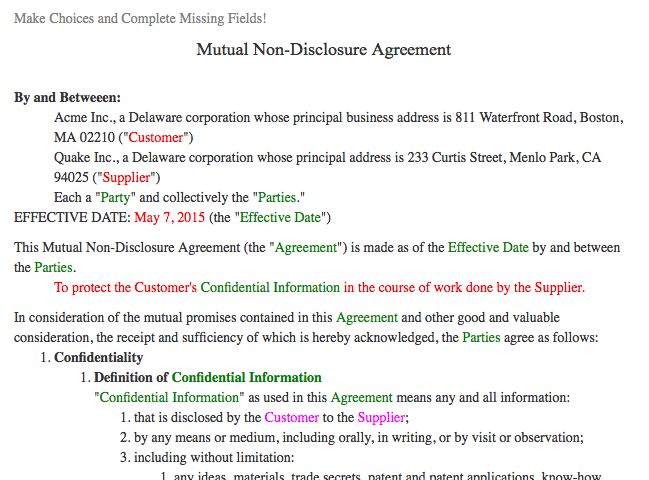

which permits an example such as an NDA: P1-> Acme -SIGN-> Andrea (in graph notation). The text is codified in layers as with CSS, as per an example template including work by #CooleyGo and @Emperor_Chan, and stored in GitHub with history. The proof of the transaction is k=v pairs on the blockchain. The proof of the boilerplate is either on chain or off, as you wish. |  |

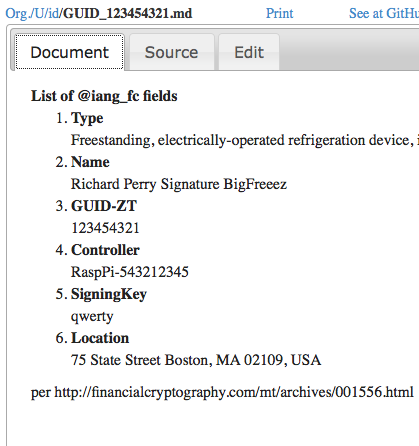

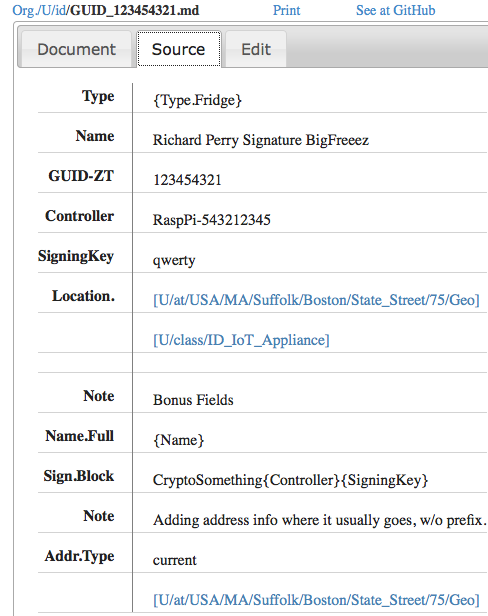

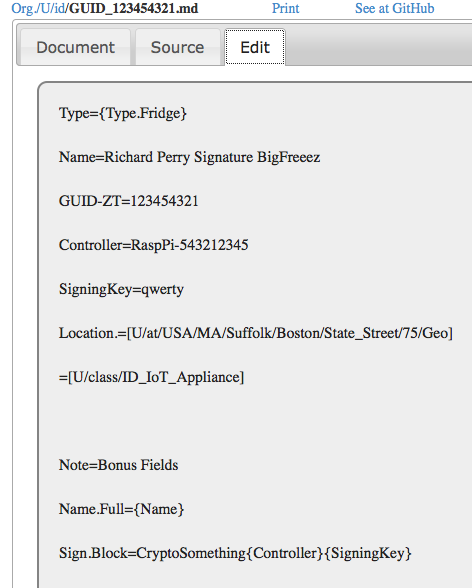

If corporations have rights, maybe your fridge should assert some too? Here below is the three tabs of the CommonAccord interface that in this case is asserting the identity of an Internet of Things device (ID-IoT).

|  |  |

Which leaves open - how to manage identity?

October 19, 2009

Denial of Service is the greatest bug of most security systems

I've had a rather troubling rash of blog comment failure recently. Not on FC, which seems to be ok ("to me"), but everywhere else. At about 4 in the last couple of days I'm starting to get annoyed. I like to think that my time in writing blog comments for other blogs is valuable, and sometimes I think for many minutes about the best way to bring a point home.

I've had a rather troubling rash of blog comment failure recently. Not on FC, which seems to be ok ("to me"), but everywhere else. At about 4 in the last couple of days I'm starting to get annoyed. I like to think that my time in writing blog comments for other blogs is valuable, and sometimes I think for many minutes about the best way to bring a point home.

But more than half the time, my comment is rejected. The problem is on the one hand overly sophisticated comment boxes that rely on exotica like javascript and SSO through some place or other ... and spam on the other hand.

These things have destroyed the credibility of the blog world. If you recall, there was a time when people used blogs for _conversations_. Now, most blogs are self-serving promotion tools. Trackbacks are dead, so the conversational reward is gone, and comments are slow. You have to be dedicated to want to follow a blog and put a comment on there, or stupid enough to think your comment matters, and you'll keep fighting the bl**dy javascript box.

The one case where I know clearly "it's not just me" is John Robb's blog. This was a *fantastic* blog where there was great conversation, until a year or two back. It went from dozens to a couple in one hit by turning on whatever flavour of the month was available in the blog system. I've not been able to comment there since, and I'm not alone.

This is denial of service. To all of us. And, this denial of service is the greatest evidence of the failure of Internet security. Yet, it is easy, theoretically easy to avoid. Here, it is avoided by the simplest of tricks, maybe one per month comes my way, but if I got spam like others get spam, I'd stop doing the blog. Again denial of service.

Over on CAcert.org's blog they recently implemented client certs. I'm not 100% convinced that this will eliminate comment spam, but I'm 99.9% convinced. And it is easy to use, and it also (more or less) eliminates that terrible thing called access control, which was delivering another denial of service: the people who could write weren't trusted to write, because the access control system said they had to be access-controlled. Gone, all gone.

According to the blog post on it:

The CAcert-Blog is now fully X509 enabled. From never visited the site before and using a named certificate you can, with one click (log in), register for the site and have author status ready to write your own contribution.

Sounds like a good idea, right? So why don't most people do this? Because they can't. Mostly they can't because they do not have a client certificate. And if they don't have one, there isn't any point in the site owner asking for it. Chicken & egg?

But actually there is another reason why people don't have a client certificate: it is because of all sorts of mumbo jumbo brought up by the SSL / PKIX people, chief amongst which is a claim that we need to know who you are before we can entrust you with a client certificate ... which I will now show to be a fallacy. The reason client certificates work is this:

If you only have a WoT unnamed certificate you can write your article and it will be spam controlled by the PR people (aka editors).If you had a contributor account and haven’t posted anything yet you have been downgraded to a subscriber (no comment or write a post access) with all the other spammers. The good news is once you log in with a certificate you get upgraded to the correct status just as if you’d registered.

We don't actually need to know who you are. We only need to know that you are not a spammer, and you are going to write a good article for us. Both of these are more or less an equivalent thing, if you think about it; they are a logical parallel to the CAPTCHA or turing test. And we can prove this easily and economically and efficiently: write an article, and you're in.

Or, in certificate terms, we don't need to know who you are, we only need to know you are the same person as last time, when you were good.

This works. It is an undeniable benefit:

There is no password authentication any more. The time taken to make sure both behaved reliably was not possible in the time the admins had available.

That's two more pluses right there: no admin de-spamming time lost to us and general society (when there were about 290 in the wordpress click-delete queue) and we get rid of those bl**dy passwords, so another denial of service killed.

Why isn't this more available? The problem comes down to an inherent belief that the above doesn't work. Which is of course a complete nonsense. 2 weeks later, zero comment spam, and I know this will carry on being reliable because the time taken to get a zero-name client certificate (free, it's just your time involved!) is well in excess of the trick required to comment on this blog.

No matter the *results*, because of the belief that "last-time-good-time" tests are not valuable, the feature of using client certs is not effectively available in the browser. That which I speak of here is so simple to code up it can actually be tricked from any website to happen (which is how CAs get it into your browser in the first place, some simple code that causes your browser to do it all). It is basically the creation of a certificate key pair within the browser, with a no-name in it. Commonly called the self-signed certificate or SSC, these things can be put into the browser in about 5 seconds, automatically, on startup or on absence or whenever. If you recall that aphorism:

There is only one mode, and it is secure.

And contrast it to SSL, we can see what went wrong: there is an *option* of using a client cert, which is a completely insane choice. The choice of making the client certificate optional within SSL is a decision not only to allow insecurity in the mode, but also a decision to promote insecurity, by practically eliminating the use of client certs (see the chicken & egg problem).

And this is where SSL and the PKIX deliver their greatest harm. It denies simple cryptographic security to a wide audience, in order to deliver ... something else, which it turns out isn't as secure as hoped because everyone selects the wrong option. The denial of service attack is dominating, it's at the level of 99% and beyond: how many blogs do you know that have trouble with comments? How many use SSL at all?

So next time someone asks you, why these effing passwords are causing so much grief in your support department, ask them why they haven't implemented client certs? Or, why the spam problem is draining your life and destroying your social network? Client certs solve that problem.

SSL security is like Bismarck's sausages: "making laws is like making sausages, you don't want to watch them being made." The difference is, at least Bismark got a sausage!

SSL security is like Bismarck's sausages: "making laws is like making sausages, you don't want to watch them being made." The difference is, at least Bismark got a sausage!

Footnote: you're probably going to argue that SSCs will be adopted by the spammer's brigade once there is widespread use of this trick. Think for minute before you post that comment, the answer is right there in front of your nose! Also you are probably going to mention all these other limitations of the solution. Think for another minute and consider this claim: almost all of the real limitations exist because the solution isn't much used. Again, chicken & egg, see "usage". Or maybe you'll argue that we don't need it now we have OpenID. That's specious, because we don't actually have OpenID as yet (some few do, not all) and also, the presence of one technology rarely argues against another not being needed, only marketing argues like that.

August 06, 2008

_Electronic Signatures in Law_, Stephen Mason, 2007

Electronic signatures are now present in legal cases to the extent that while they remain novel, they are not without precedence. Just about every major legal code has formed a view in law on their use, and many industries have at least tried to incorporate them into vertical applications. It is then exceedingly necessary that there be an authoritative tome on the legal issues surrounding the topic.

Electronic Signatures in Law is such a book, and I'm now the proud owner of a copy of the recent 2007 second edition, autographed no less by the author, Stephen Mason. Consider this a review, although I'm unaccustomed to such. Like the book, this review is long: intro, stats, a description of the sections, my view of the old digsig dream, and finally 4 challenges I threw at the book to measure its paces. (Shorter reviews here.)

First the headlines: This is a book that is decidedly worth it if you are seriously in the narrow market indicated by the title. For those who are writing directives or legislation, architecting software of reliance, involved in the Certificate Authority business of some form, or likely to find themselves in a case or two, this could well be the essential book.

At £130 or so, I'd have to say that the Financial Cryptographer who is not working directly in the area will possibly find the book too much for mild Sunday afternoon reading, but if you have it, it does not dive so deeply and so legally that it is impenetrable to those of us without an LLB up our sleeves. For us on the technical side, there is welcome news: although the book does not cover all of the failings and bugs that exist in the use of PKI-style digital signatures, it covers the major issues. Perhaps more importantly, those bugs identified are more or less correctly handled, and the criticism is well-ground in legal thinking that itself rests on centuries of tradition.

Raw stats: Published by Tottel publishing. ISBN 978-1-84592-425-6. At over 700 pages, it includes a comprehensive indexes of statutory instruments, legislation and cases that runs to 55 pages, by my count, and a further 10 pages on United Kingdom orders. As well, there are 54 pages on standards, correspondents, resources, glossary, followed by a 22 page index.

Description. Mason starts out with serious treatments on issues such as "what is a signature?" and "what forms a good signature?" These two hefty chapters (119 pages) are beyond comprehensive but not beyond comprehension. Although I knew that the signature was a (mere) mark of intent, and it is the intent which is the key, I was not aware of how far this simple subject could go. Mason cites case law where "Mum" can prove a will, where one person signs for another, where a usage of any name is still a good signature, and, of course, where apparent signatures are rejected due to irregularities, and others accepted regardless of irregularities.

Next, there is a fairly comprehensive (156 pages) review of country and region legal foundations, covering the major anglo countries, the European Union, and Germany in depth, with a chapter on International comparisons covering approaches, presumptions, liabilities and other complexities and a handful of other countries. Then, Mason covers electronic signatures comprehensively and then seeks to compare them to Parties and risks, liability, non-contractual issues, and evidence (230 pages). Finally, he wraps up with a discussion of digital signatures (42 pages) and data protection (12 pages).

Let me briefly summarise the financial cryptography view of the history of Digital Signatures: The concept of the digital signature had been around since the mid-1970s, firstly in the form of the writings by the public key infrastructure crowd, and secondly, popularised to a small geeky audience in the form of PGP in the early 1990s. However, deployment suffered as nobody could quite figure out the application. When the web hit in 1994, it created a wave that digital signatures were able to ride. To pour cold water on a grand fire-side story, RSA Laboratories manage to convince Netscape that (a) credit cards needed to be saved from the evil Mallory, (b) the RSA algorithm was critical to that need, and (c) certificates were the way to manage the keys required for RSA. Verisign was a business created by (friends of) RSA for that express purpose, and Netscape was happily impressed on the need to let other friends in. For a while everything was mom's apple pie, and we'll all be rich, as, alongside VeriSign and friends, business plans claiming that all citizens would need certificates for signing purposes were floated around Wall Street, and this would set Americans back $100 a pop.

Neither the fabulous b-plans nor the digital signing dream happened, but to the eternal surprise of the technologists, some legislatures put money down on the cryptographers' dream to render evidence and signing matters "simpler, please." The State of Utah led the way, but the politicians dream is now more clearly seen in the European Directive on Electronic Signatures, and especially in the Germanic attitude that digital signatures are as strong by policy, as they are weak in implementation terms. Today, digital signatures are relegated to either tight vertical applications (e.g., Ricardian contracts), cryptographic protocol work (TLS-style key exchanges), or being unworkable misfits lumbered with the cross of law and the shackles of PKI. These latter embarrassments only survive in those areas where (a) governments have rolled out smart cards for identity on a national basis, and/or (b) governments have used industrial policy to get some of that certificate love to their dependencies.

In contrast to the above dream of digital signatures, attention really should be directed to the mere electronic signature, because they are much more in use than the cryptographic public key form, and arguably much more useful. Mason does that well, by showing how different forms are all acceptable (Chapter 10, or summarised here): Click-wrap, typing a name, PINs, email addresses, scanned manuscript signatures, and biometric forms are all contrasted against actual cases.

The digital signature, and especially the legal projects of many nations get criticised heavily. According to the cases cited, the European project of qualified certificates, with all its CAs, smart cards, infrastructure, liabilities, laws, and costs ad infinitum ... are just not needed. A PC, a word processor program and a scan of a hand signature should be fine for your ultimate document. Or, a typewritten name, or the words "signed!" Nowhere does this come out more clearly than the Chapter on Germany, where results deviate from the rest of the world.

Due to the German Government's continuing love affair with the digital signature, and the backfired-attempt by the EU to regularise the concept in the Electronic Signature Directives, digital and electronic signatures are guaranteed to provide for much confusion in the future. Germany especially mandated its courts to pursue the dream, with the result that most of the German case results deal with rejecting electronic submissions to courts if not attached with a qualified signature (6 of 8 cases listed in Chapter 7). The end result would be simple if Europeans could be trusted to use fax or paper, but consider this final case:

(h) Decision of the BGH (Federal Supreme Court, 'Bundesgerichtshof') dated 10 October 2006,...: A scanned manuscript signature is not sufficient to be qualified as 'in writing' under §130 VI ZPO if such a signature is printed on a document which is then sent by facsimile transmission. Referring to a prior decision, the court pointed out that it would have been sufficient if the scanned signature was implemented into a computer fax, or if a document was manually signed before being sent by facsimile transmission to court.

How deliciously Kafkaesque! and how much of a waste of time is being imposed on the poor, untrustworthy German lawyer. Mason's book takes on the task of documenting this confusion, and pointing some of the way forward. It is extraordinarily refreshing to find that the first to chapters, and over 100 pages, are devoted to simply describing signatures in law. It has been a frequent complaint that without an understanding of what a signature is, it is rather unlikely that any mathematical invention such as digsigs would come even close to mimicing it. And it didn't, as is seen in the 118 pages romp through the act of signing:

What has been lost in the rush to enact legislation is the fact that the function of the signature is generally determined by the nature and content of the document to which it is affixed.

Which security people should have recognised as a red flag: we would generally not expect to use the same mechanism to protect things of wildly different values.

Finally, I found myself pondering these teasers:

Athenticate. I found myself wondering what the word "authenticate" really means, and from Mason's book, I was able to divine an answer: to make an act authentic. What then does "authentic" mean and what then is an "act"? Well, they are both defined as things in law: an "act" is something that has legal significance, and it is authentic if it is required by law and is done in the proper fashion. Which, I claim, is curiously different to whatever definition the technologists and security specialists use. OK, as a caveat, I am not the lawyer, so let's wait and see if I get the above right.

Burden of Liability. The second challenge was whether the burden of liability in signing has really shifted. As we may recall, one of the selling points of digital signatures was that once properly formed, they would enable a relying party to hold the signing party to account, something which was sometimes loosely but unreliably referred to as non-repudiation.

In legal terms, this would have shifted the burden of proof and liability from the recipient to the signer, and was thought by the technologists to be a useful thing for business. Hence, a selling point, especially to big companies and banks! Unfortunately the technologists didn't understand that burden and liability are topics of law, not technology, and for all sorts of reasons it was a bad idea. See that rant elsewhere. Still, undaunted, laws and contracts were written on the advice of technologists to shift the liability. As Mason puts it (M9.27 pp270):

For obvious reasons, the liability of the recipient is shaped by the warp and weft of political and commercial obstructionism. Often, a recipient has no precise rights or obligations, but attempts are made using obscure methods to impose quasi-contractual duties that are virtually impossible to comply with. Neither governments nor commercial certification authorities wish to make explicit what they seek to achieve implicitly: that is, to cause the recipient to become a verifying party, with all the responsibilities that such a role implies....

So how successful was the attempt to shift the liability / burder in law? Mason surveys this question in several ways: presumptions, duties, and liabilities directly. For a presumption that the sender was the named party in the signature, 6 countries said yes (Israel, Japan, Argentina, Dubai, Korea, Singapore) and one said no (Australia) (M9.18 pp265) Britain used statutory instruments to give a presumption to herself, the Crown only, that the citizen was the sender (M9.27 pp270). Others were silent, which I judge an effective absence of a presumption, and a majority for no presumption.

Another important selling point was whether the CA took on any especial presumption of correctness: the best efforts seen here were that CAs were generally protected from any liability unless shown to have acted improperly, which somewhat undermines the entire concept of a trusted third party.

How then are a signer and recipient to share the liability? Australia states quite clearly that the signing party is only considered to have signed, if she signed. That is, she can simply state that she did not sign, and the burden falls on the relying party to show she did. This is simply the restatement of the principle in the English common law; and in effect states that digital signatures may be used, but they are not any more effective than others. Then, the liability is exactly as before: it is up the to relying party to check beforehand, to the extent reasonable. Other countries say that reliance is reasonable, if the relying party checks. But this is practically a null statement, as not only is it already the case, it is the common-sense situation of caveat emptor deriving from Roman times.

Although murky, I would conclude that the liability and burden for reliance on a signature is not shifted in the electronic domain, or at least governments seem to have held back from legislating any shift. In general, it remains firmly with the recipient of the signature. The best it gets in shiftyville is the British Government's bounty, which awards its citizens the special privilege of paying for their Government's blind blundering; same as it ever was. What most governments have done is a lot of hand-waving, while permitting CAs to utilise contract arrangements to put the parties in the position of doing the necessary due diligence,. Again, same as it ever was, and decidedly no benefit or joy for the relying party is seen anywhere. This is no more than the normal private right to a contract or arrangement, and no new law nor regulation was needed for that.

Digital Signing, finally, for real! The final challenge remains a work-in-progress: to construct some way to use digital signatures in a signing protocol. That is, use them to sign documents, or, in other words, what they were sold for in the first place. You might be forgiven for wondering if the hot summer sun has reached my head, but we have to recall that most of the useful software out there does not take OpenPGP, rather it takes PKI and x.509 style certificate cryptographic keys and certificates. Some of these things offer to do things called signing, but there remains a challenge to make these features safe enough to be recommended to users. For example, my Thunderbird now puts a digital signature on my emails, but nobody, not it, not Mozilla, not CAcert, not anyone can tell me what my liability is.

To address this need, I consulted the first two chapters, which lay out what a signature is, and by implication what signing is. Signing is the act of showing intent to give legal effect to a document; signatures are a token of that intention, recorded in the act of signing. In order, then, to use digital certificates in signing, we need to show a user's intent. Unfortunately, certificates cannot do that, as is repeatedly described in the book: mostly because they are applied by the software agent in a way mysterious and impenetrable to the user.

Of course, the answer to my question is not clearly laid out, but the foundations are there: create a private contract and/or arrangement between the parties, indicate clearly the difference between a signed and unsigned document, and add the digital signature around the document for its cryptographic properties (primarily integrity protection and confirmation of source).

The two chapters lay out the story for how to indicate intention in the English common law: it is simple enough to add the name, and the intention to sign, manually. No pen and ink is needed, nor more mathematics than that of ASCII, as long as the intention is clear. Hence, it suffices for me to write something like signed, iang at the bottom of my document. As the English common law will accept the addition of merely ones name as a signature, and the PKI school has hope that digital signatures can be used as legal signatures, it follows that both are required to be safe and clear in all circumstances. For the champions of either school, the other method seems like a reduction to futility, as neither seems adequate nor meaningful, but the combination may ease the transition for those who can't appreciate the other language.

Finally, I should close with a final thought: how does the book effect my notions as described in the Ricardian Contract, still one of the very few strong and clear designs in digital signing? I am happy to say that not much has changed, and if anything Mason's book confirms that the Ricardo designs were solid. Although, if I was upgrading the design, I would add the above logic. That is, as the digital signature remains impenetrable to the court, it behoves to add the words seen below somewhere in the contract. Hence, no more than a field name-change, the tiniest tweak only, is indicated:

Signed By: Ivan

June 30, 2008

Cross-border Notarisations and Digital Signatures

My notes of a presentation by Dr Ugo Bechini at the Int. Conf. on Digital Evidence, London. As it touches on many chords, I've typed it up for the blog:

The European or Civil Law Notary is a powerful agent in commerce in the civil law countries, providing a trusted control of a high value transaction. Often, this check is in the form of an Apostille which is (loosely) a stamp by the Notary on an official document that asserts that the document is indeed official. Although it sounds simple, and similar to common law Notaries Public, behind the simple signature is a weighty process that may be used for real estate, wills, etc.

It works, and as Eliana Morandi puts it, writing in the 2007 edition of the Digital Evidence and Electronic Signature Law Review:

Clear evidence of these risks can be seen in the very rapid escalation, in common law countries, of criminal phenomena that are almost unheard of in civil law countries, at least in the sectors where notaries are involved. The phenomena related to mortgage fraud is particularly important, which the Mortgage Bankers Association estimates to have caused the American system losses of 2.5 trillion dollars in 2005.

OK, so that latter number came from Choicepoint's "research" (referenced somewhere here) but we can probably agree that the grains of truth sum to many billions.

Back to the Notaries. The task that they see ahead of them is to digitise the Apostille, which to some simplification is seen as a small text with a (dig)sig, which they have tried and tested. One lament common in all European tech adventures is that the Notaries, split along national lines, use many different systems: 7 formats indicating at at least 7 softwares, frequent upgrades, and of course, ultimately, incompatibility across the Eurozone.

To make notary documents interchangeable, there are (posits Dr Bechini) two solutions:

- a single homogenous solution for digsigs; he calls this the "GSM" solution, whereas I thought of it as a potential new "directive failure".

- a translation platform; one-stop shop for all formats

A commercial alternative was notably absent. Either way, IVTF (or CNUE) has adopted and built the second solution: a website where documents can be uploaded and checked for digsigs; the system checks the signature, the certificate and the authority and translates the results into 4 metrics:

- Signed - whether the digsig is mathematically sound

- Unrevoked - whether the certificate has been reported compromised

- Unexpired - whether the certificate is out of date

- Is a notary - the signer is part of a recognised network of TTPs

In the IVTF circle, a notary can take full responsibility for a document from another notary when there are 4 green boxes above, meaning that all 4 things check out.

This seems to be working: Notaries are now big users of digsigs, 3 million this year. This is balanced by some downsides: although they cover 4 countries (Deustchland, España, France, Italy), every additional country creates additional complexity.

Question is (and I asked), what happens when the expired or revoked certificate causes a yellow or red warning?

The answer was surprising: the certificates are replaced 6 months before expiry, and the messages themselves are sent on the basis of a few hours. So, instead of the document being archived with digsig and then shared, a relying Notary goes back to the originating Notary to request a new copy. The originating Notary goes to his national repository, picks up his *original* which was registered when the document was created, adds a fresh new digsig, and forwards it. The relying notary checks the fresh signature and moves on to her other tasks.

You can probably see where we are going here. This isn't digital signing of documents, as it was envisaged by the champions of same, it is more like real-time authentication. On the other hand, it does speak to that hypothesis of secure protocol design that suggests you have to get into the soul of your application: Notaries already have a secure way to archive the documents, what they need is a secure way to transmit that confidence on request, to another Notary. There is no problem with short term throw-away signatures, and once we get used to the idea, we can see that it works.

One closing thought I had was the sensitivity of the national registry. I started this post by commenting on the powerful position that notaries hold in European commerce, the presenter closed by saying "and we want to maintain that position." It doesn't require a PhD to spot the disintermediation problem here, so it will be interesting to see how far this goes.

A second closing thought is that Morandi cites

... the work of economist Hernando de Soto, who has pointed out that a major obstacle to growth in many developing countries is the absence of efficient financial markets that allow people to transform property, first and foremost real estate, into financial capital. The problem, according to de Soto, lies not in the inadequacy of resources (which de Soto estimates at approximately 9.34 trillion dollars) but rather in the absence of a formal, public system for registering property rights that are guaranteed by the state in some way, and which allows owners to use property as collateral to obtain access to the financial captal associated with ownership.

But, Latin America, where de Soto did much of his work, follows the Civil Notary system! There is an unanswered question here. It didn't work for them, so either the European Notaries are wrong about their assertation that this is the reason for no fraud in this area, or de Soto is wrong about his assertation as above. Or?

June 16, 2008

Digital Signing: new category for FC

A comment by Stephen Mason this morning caused me to realise that there is no digital signing category in FC. I have now added it, and you can see the link in the 'menu' section to the right of the main page, under Governance. Or click here.

Here's an older reference to an apparent application of digital signing of serious documents, spotted in the wild:

Great Lakes Educational Loan Services used an external e-signature service from DocuSign to help it deal with the flood of loan requests it gets around this time each year. It combined the service with its loan application system on its Web site. In the first two months, 80 percent of its 72,000 applicants used e-signatures, which cut its costs in this area by 75 percent, Musser said.

The rest of the article seems off-topic.

April 17, 2008

On the search for the perfect Identity Biometric: scratch Iris

Our world is obsessed with determining who you are. Part of this is working out who you are now, and then determining whether you were the same person 10 or 20 years back. Reliably. And that includes in the presence of some nasty attacker, who wants your money or your cooperation or worse.

Our world is obsessed with determining who you are. Part of this is working out who you are now, and then determining whether you were the same person 10 or 20 years back. Reliably. And that includes in the presence of some nasty attacker, who wants your money or your cooperation or worse.

In the race for future biometric, here are today's favourites: fingerprints (criminals and visitors to America), facial pictures (passports), and eyes (some systems). As Richard Clayton points out, the latter dark horse, has some disadvantages:

...Here is a summary of how I [John Daugman] established for the Society that the above portraits show the same person, by running my Iris Recognition algorithms on magnified images of the eye regions in the 1984 and 2002 photographs.First I computed IrisCodes (see the mathematical explanation on this website) from both of her eyes as photographed in 1984. A processed portion of the 1984 photograph is shown here. (The superimposed graphics show the automatic localization of the iris and its boundaries, the scrubbing of some specular reflections from the eye, and a representation of the IrisCode.)

Then I computed IrisCodes from both of her eyes in the 2002 photograph. Those processed images can be seen here for her left and right eyes.

When I ran the search engine (the matching algorithm) on these IrisCodes, I got a Hamming Distance of 0.24 for her left eye, and 0.31 for her right eye. As may be seen from the histogram that arises when DIFFERENT irises are compared by their IrisCodes, these measured Hamming Distances are so far out on the distribution tail that it is statistically almost impossible for different irises to show so little dissimilarity. The mathematical odds against such an event are 6 million to one for her right eye, and 10-to-the-15th-power to one for her left eye.

Which unfortunately means that the biometric is too copyable. Scratch your Iris. If we can measure it from a photograph, that means I can photograph you and then insert your Iris into some other situation. Although an attack is not clear, the theoretical possibility is strong, and as we do not have many systems using this process yet, the attacks won't be clear.

I wonder how long it will be before we get Iris cameras that can work in the open?

And, as Philipp Güring points out, it rather raises a bit of a difficulty for the perfect biometric. If a machine can grab it, how do we stop ... any machine ... grabbing all of them?

March 20, 2008

World's biggest PKI goes open source: DogTag is released

One of the frequently lamented complaints of PKI is that it simply didn't scale (IT talk for not delivering enough grunt to power a big user base), and there was no evidence to the contrary. Well, that's not quite true, there is one large-scale PKI on the planet:

Red Hat has teamed up with August Schell to run and support the U.S. Department of Defense’s (DoD) public key infrastructure (PKI). The DoD PKI is the world’s largest and most advanced PKI installation, supporting all military and civilian personnel throughout the DoD worldwide.Red Hat Certificate Authority (CA) and Lightweight Directory Access Protocol (LDAP) are used to operate the DoD identity management infrastructure with August Schell providing hands-on support for the source-code-level implementation. The Red Hat Certificate System has issued more than 10 million digital credentials, many of which were issued immediately preceding conflicts in Iraq and Afghanistan.

It seems that its success or growth rode the wave of the recent Iraq military expedition (or war or whatever they call it these days). The reason for stressing the military context is that in such circumstances, things can be pushed out which would never evolve of their own pace in the government or private economy. This may make it a trendsetter, because it finally breached the barriers for the rest of the world. Or it may simply underline the reasons why it won't set any trends; only future history will tell us which it is.

Today's news is that the code behind the above PKI has just gone open source under the name of DogTag (a reference to the 2 metal identity tags that US servicemen and women wear around their necks). Bob Lord announces:

In December of 2004, Red Hat purchased several technologies and products from AOL. The most prominent of those products were the Directory Server and the Certificate System. Since then we opened the source code to the Directory Server (see http://directory.fedoraproject.org/ for all the details). However a number of factors kept the work to release the Certificate System on the back burner. That's about to change.Today, I'm extremely happy to announce the release of the Certificate System source code to the world.

This isn't a “Lite” or demo version of the technology, but the entire source code repository, the one we use to build the Red Hat branded version of the product. It's the real deal.

Another barrier breached! This could change the map for small, boutique or startup CAs. For those who think that this will lead to an explosion of interest in crypto and CAs and PKIs, I cannot resist adding some thoughts from Frank Hecker, who commented:

It's ... the end of an era: When I was working at Netscape ten years ago we were dealing with patented crypto algorithms (RSA), classified crypto algorithms (FORTEZZA), proprietary crypto libraries (RSA BSafe) and crypto applications (Netscape Communicator, Netscape Enterprise Server, Netscape Certificate Management System), and crypto export control. All gone now, or at least gone for most practical purposes (e.g., export control).Of course, now that people have all the open-source patent-free no-export-hassle crypto that they could possibly want, they're realizing that crypto in and of itself wasn't nearly the panacea they thought it was :-)

I cannot say it better!

March 06, 2008

Microsoft acquires Stefan Brands (patents and friends)

Interesting news: According to the posts over at identity corner, Microsoft is picking up (some of? all of?) Credentica's patent portfolio, and Stefan Brands himself will join the team.

|

Quick summary: Stefan Brand's patent portfolio is the "extension" of David Chaum's better known blinding and privacy work into a comprehensive claims-based framework. I don't know how it works, but it does things like reveal age, preferences, gender, and so forth without breaching privacy. That is, it can reveal these things in a way that means no other information can be divined. (It can also do digital cash, and it has some advantages over Chaum's original blinding patents, but I don't recall why.)

Now, it has been pretty clear that this discussion has been going on for a long time. The reason? It's never easy to speculate on how big companies plan to do things, but I would say it like this: Brands technology is being viewed as the next generation CardSpace/InfoCard.

What that means in branding, package, and timeline issues is anyone's guess. The important point here is that CardSpace/InfoCard is Microsoft's first generation of framework where individual / commercial identities can live (nymous versus sold). Later on we'll see Brands technology being used to add interesting things to that.

The significance of this is either huge or its not, we'll know in a few years whether Microsoft are able to do something interesting here. This is not a given. Not because they haven't got the people and other assets to make this happen, but because they've got *all* the people and *all* the assets facing them, and those people and assets don't move easily.

By way of example, you probably saw my mention of nymous identities above. Yes, Microsoft. You already know what that means and don't believe it ... or you don't want it to happen because you don't understand it or you think the sky will fall in. Resistance is huge to new technologies, and, as we saw earlier today, the market for silver bullets is no respecter of sources.

Stefan's technology plus Microsoft's challenge takes this to the point where even *I* don't know what it means, so we are talking about really cracking up the equilibrium, not just shifting a few clicks like EV. (This also makes that Microsoft team the hottest place to work for the next few years in Rights, if not all of FC. Brush up on your CVs, guys.)

November 11, 2007

Oddly good news week: Google announces a Caps library for Javascript

Capabilities is one of the few bright spots in theoretical computing. In Internet software terms, caps can be simply implemented as nymous public/private keys (that is, ones without all that PKI baggage). The long and deep tradition of capabilities is unchallenged seriously in the theory literature.

It is heavily challenged in the practical world in two respects: the (human) language is opaque and the ideas are simply not widely deployed. Consider this personal example: I spent many years trying to figure out what caps really was, only to eventually discover that it was what I was doing all along with nymous keys. The same thing happens to most senior FC architects and systems developments, as they end up re-inventing caps without knowing it: SSH, Skype, Lynn's x95.9, and Hushmail all have travelled the same path as Gary Howland's nymous design. There's no patent on this stuff, but maybe there should have been, to knock over the ivory tower.

These real world examples only head in the direction of caps, as they work with existing tools, whereas capabilities is a top-down discipline. Now Ben Laurie has announced that Google has a project to create a Caps approach for Javascript (hat tip to JPM, JQ, EC and RAH :).

Rather... than modify Javascript, we restrict it to a large subset. This means that a Caja program will run without modification on a standard Javascript interpreter - though it won’t be secure, of course! When it is compiled then, like CaPerl, the result is standard Javascript that enforces capability security. What does this mean? It means that Web apps can embed untrusted third party code without concern that it might compromise either the application’s or the user’s security.

Caja also means box in Spanish which is a nice cross-over as capabilities is like the old sandbox ideas of Java applet days. What does this mean, other than the above?

We could also point to the Microsoft project Cardspace (was/is now Inforcard) and claim parallels, as that, at a simplistic level, implements a box for caps as well. Also, the HP research labs have a constellation of caps fans, but it is not clear to me what application channel exists for their work.

There are then at least two of the major financed developers pursuing a path guided by theoretical work in secure programming.

What's up with Sun, Mozilla, Apple, and the application houses, you may well ask! Well, I would say that there is a big difference in views of security. The first-mentioned group, a few tiny isolated teams within behemoths, are pursuing designs, architectures and engineering that is guided by the best of our current knowledge. Practically everyone else believes that security is about fixing bugs after pushing out the latest competitive feature (something I myself promote from time to time).

Take Sun (for example, as today's whipping boy, rather than Apple or Mozo or the rest of Microsoft, etc).

They fix all their security bugs, and we are all very grateful for that. However, their overall Java suite becomes more complex as time goes on, and has had no or few changes in the direction of security. Specifically, they've ignored the rather pointed help provided by the caps school (c.f., E, etc). You can see this in J2EE, which is a melting pot of packages and add-ons, so any security it achieves is limited to what we might called bank-level or medium-grade security: only secure if everything else is secure. (Still no IPC on the platform? Crypto still out of control of the application?)

Which all creates 3 views;

- low security, which is characterised by the coolness world of PHP and Linux: shove any package in and smoke it.

- medium security, characterised by banks deploying huge numbers of enterprise apps that are all at some point secure as long as the bits around them are secure.

- high security, where the applications are engineered for security, from ground up.

The Internet as a whole is stalled at the 2nd level, and everyone is madly busy fixing security bugs and deploying tools with the word "security" in them. Breaking through the glass ceiling and getting up to high security requires deep changes, and any sign of life in that direction is welcome. Well done Google.

September 10, 2007

Threatwatch - more data on cost of your identity

In the long-running threatwatch theme of how much a set of identity documents will cost you, Dave Birch spots new data:

Other than data breaches, another useful rule-of-thumb figure, I reckon, might come from identity card fraud since an identity card is a much better representation of a persons identity than a credit card record. Luckily, one of the countries with a national smart ID card just had a police bust: in Malyasia, the police seized fake MyKad, foreign workers identity cards, work permits and Indonesian passports and said that they thought the fake documents were sold for between RM300 and RM500 (somewhere between $100 to $150) each. That gives us a rule-of-thumb of $20 for a "credit card identity" and $100, say, for a "full identity". Since we don't yet have ID cards in the U.K., I thought that fake passports might be my best proxy. Here, the police says that 1,800 alleged counterfeit passports recovered in raid in North London were valued at £1m. If we round it up to 2,000 fakes, then that's £500 each. This, incidentally, was the largest seizure of fake passports in the U.K. so far and vincluded 200 U.K. passports, which, according to police, are often considered by counterfeiters to be too difficult to reproduce. Not!The point I actually wanted make is not that these figures a very variable, which they are, but that they're not comparing apples with apples. Hence the simplistic "what's your identity worth?" question cannot be answered with a simple number.

OK, that's consistent with my long-standing estimate of 1000 (in the major units, pounds, dollars, euros) to get a set of docs. It is important to track this because if you are building a system based on identity, this gives you a solid number on which to base your economic security. E.g., don't protect much more than 1000 on the basis of identity, alone.

As a curious footnote, I recently acquired a new high-quality document from the proper source, and it cost me around 1000, once all the checking, rechecking, couriered documents and double phase costs were all added up. If a data set of one could be extrapolated, this would tell us that it makes no difference to the user whether she goes for a fully authentic set or not!

Luckily my experiences are probably an outlier, but we can see a fairly damning data point here: the cost of an "informal" document is far to similar to the cost of a "formal" document.

Postscript: It turns out that there is no way to go through FC archives and see all the various categories, so I've added a button at the right which allows you to see (for example) the cost of your identity, in full posted-archive form.

June 07, 2007

What is the DRM problem?

A Digital Rights Management system is a system to manage digital rights. But, if you read some news blogs, you get the impression that Apple has stopped managing the digital rights of its music sales (a.k.a. iTunes). E.g., 1, 2, 3.

No such. Managing digital rights can be done by putting an email address into a song. There is nothing in the business requirements of DRM that says it can't be broken, unless it was put there by an over-zealous cryptographer who has never owned a compact cassette recorder.

Breaking DRM is not what it is about. DRM is about creating a system to distribute the content to those who will pay, and make it hard for those who pay to avoid the system.

Note that this is not the same as stopping the distribution of content to those who won't pay. We don't care about them, as they won't pay. What we do care about is whether those that won't pay (a) get access to the content and (b) make it easy for those who will pay to get access to it. It's that second part that is the important part.

In all the history of MP3s, and indeed content of all forms, we have (a) in dumper-loads, and little or none of (b). Apple have come closest as a commercial enterprise, but they are still a long way from (b). If you think that this is wrong, help me (please!) with this little test: Tell me where the button is on my iTunes to get access to the paid content, unpaid?

Why is this so? It's simple to write but harder to grasp: it is because marketing is driven by marketing laws, and in this case, an economic law known as "discrimination." The DRM problem is to create a discrimination between those that will pay and those that will not. Sticking an email address in a song sounds like a way to do that, presuming that other things are going on too.

June 05, 2007

Identity resurges as a debate topic

RAH pointed last week to a series of blog postings on Identity. This seems to be a discussion between Ben Laurie, Kim Cameron and Stefan Brands. In contrast, Hasan points out the same debate is happening on slashdot. I'd vote for slashdot, this time. They say it's hard to do. They're right.

Why? As slashdot people suggest, this is a typical bottom-up versus top-down approach to a question you shouldn't be asking.

Drilling down, it's the same old story. High level managers say "we need to know who the consumer is." Or, as Dave says,

What is it about smart cards and health? Health ought to be one of the places where getting someone's identity right -- and being able to authenticate them quickly and efficiently -- is a driver.

Engineers in the space then address that problem, with varying degrees of modification of the original requirement. Note the temptation introduced by David Chaum to introduce privacy architectures so as to address the perceived harms of things like linkability, etc, continues.

The people over at Microsoft, Credentica, and probably Google are trying to build the toolkit. What they are not doing is establishing a clear user-driven set of requirements. That's because they can't, they are platform providers, and they are trying to establish a one-size-fits-all approach to the Rights space. And then impose that on the users.

Instead, we should address the business problem at its core. Why do you need to know who the consumer is? Stefan Brands' techniques go *some* way towards suggesting this by pushing the notion of a claims-based toolkit based on sophisticated cryptography, but it is still only a suggestion, it's still a toolkit, and it still imposes bottom-up thinking on a top-down world.

The debate will rumble on, because the big(gest) corporations and governments are going to invest capital in this. In the direct FC space, the same thing happened in the 90s between Netscape, Sun and Microsoft. Then, as now, the business case was flawed.

A flawed case doesn't mean a failed business, necessarily. Instead, it suggests that the real battle is going on in the business strategy space, not in the FC space. This means we can be relatively relaxed about the various claims batting back and forth in the rights layer, and instead keep our eyes on the business battle.

April 02, 2007

The One True Identity -- cracks being examined, filled, and rotted out from the inside

Preaching to the converted again, but we all know that the critical flaw in the Identity push is that there isn't One True Identity. If we assume Identity is One and True in our designs, our systems or our society, this will come back to haunt us.

Now, in response to wide-scale evidence of failure of Identity-centric systems, there are emerging signs of people starting to realise that this is one of the foundations of America's Crisis of Identity. Here's one from an influential report by The Royal Academy of Engineering:

One of the issues that Dilemmas of Privacy and Surveillance - challenges of technological change looks at is how we can buy ordinary goods and services without having to prove who we are. For many electronic transactions, a name or identity is not needed; just assurance that we are old enough or that we have the money to pay. In short, authorisation, not identification should be all that is required. Services for travel and shopping can be designed to maintain privacy by allowing people to buy goods and use public transport anonymously. "It should be possible to sign up for a loyalty card without having to register it to a particular individual - consumers should be able to decide what information is collected about them," says Professor Nigel Gilbert, Chairman of the Academy working group that produced the report. "We have supermarkets collecting data on our shopping habits and also offering life insurance services. What will they be able to do in 20 years' time, knowing how many donuts we have bought?"

One of the technical people who know this -- that names and identity are no good for systems design -- is Gunnar Peterson. He points to someone called Mike who jumped on the Bandwagon:

Over the last few weeks, I’ve made an effort to become an OpenID power user. OK, ok, so maybe I’m just responding to the sound and the fury over this deceptively simple technology. But OpenID caught my imagination because it’s ostensibly something I get to own for myself—not something handed to me by the federal-industrial complex.

So, centralised, big-company naming schemes are bad, right? Therefore we need an open source, decentralised, every-ones-in-control alternative, right?

But don’t get me wrong: OpenID isn’t the problem here.

Bite the bullet. Copying the bad guy won't work.

OpenID simply calls into sharp focus something I’ve believed for years. It’s a kind of axiom, so I’d like to give it a name. I’ll call it, “identifiers.axiom.neunmike’s.axiomproxy.info”—that way you can easily refer to it unambiguously from anywhere. Here it is:There are no identifiers, only attributesNames are slippery. Most people have many more than one legal name, none of which are unique. They also have several dozen nicknames. There’s no practical way to get any of these every-day-use names onto a global namespace. And what’s a name after all but a synthetic attribute—a foreign key that we hope the receiving party stores somewhere so we can remember them later? Names are invaluable communication aids, but they have little to do with recognition, which is what’s at issue in most identity management contexts. Biologically, creatures don’t recognize others based on names but rather the confluence of attributes appearing within a certain context.

Right. And the unavoidable conclusion is that names don't cut it. Names are wrong for the job. What is right for the job is ... something else, but let's settle for that simple message.

"Names are no good." We need a catchy meme to invade the mindspace of the public on this one. Mike suggests:

Lao Tzu (who goes by several dozen names) had a pretty good post on this idea over 2000 years ago. In a section called “Ineffability,” he writes:The Way that can be told of is not an Unvarying Way;

The names that can be named are not unvarying names.

It was from the Nameless that Heaven and Earth sprang;

The named is but the mother that rears the ten thousand creatures, each after its kind. (chap. 1, tr. Waley)

Not that it is encouraging to know that this bug has been in existance for as long as history recorded names ... So the new European project of collecting info so as to breach nyms and find our One True Identity should come as no surprise:

In privacy-conscious Europe, some governments seek stricter rules on online anonymityFebruary 23, 2007 - 4:20AM

The cloak of online anonymity could be lifted in parts of Europe as some governments seek to make it easier to identify people who use fake names to set up e-mail accounts and Web sites.

The German and Dutch governments have taken the lead, writing proposals that would make the use of false or fake information illegal in opening a Web-based e-mail account and require phone companies to save detailed records, including when customers make calls, where and to whom.

The measures, none of which have yet become law, would not outlaw having false or misleading names on e-mail or other Internet addresses _ only providing false information to Internet service providers.

The aim, analysts say, is to make it easier for law enforcement officials to get information when they investigate crimes or terrorist attacks.

The Dutch! Tell me it ain't so! On the good news front, there is no truth to the rumour that privacy activists will be out of a job due to lack of interest.

January 09, 2007

Cat's Credit Card

Please read the following story, writes guest quizmeister Philipp, about Cat´s Credit Card, and afterwards please answer the Puzzling Identity Question:

Bank of Queensland issues credit card to catAustralia's Bank of Queensland has apologised for issuing a credit card to a customer's cat after its owner decided to test the bank's identity screening system.

The bank issued a credit card to Messiah the cat after its owner, Katherine Campbell from Melbourne, applied for a secondary card on her account under its name.

According to local press reports the cat was issued a Visa credit card with a A$4200 limit.

Campbell told reporters that the bank requested identification from Messiah but later sent a credit card without receiving any proof of ID. To make matters worse Campbell - who is the primary credit card holder - says she was not notified that a secondary credit card attached to her account had been issued.

The bank has apologised for the error but stated that people who apply for credit cards must sign to confirm the information provided is true. The bank says it will not be taking any legal action against Campbell in this instance.

And here is the question: Think twice!

Who is to blame?

[ ] The bank

[ ] The people working in the bank

[ ] The bad security technology, the should have used XYZ

[ ] The risk manager in the bank

[ ] The risk analysis of the bank

[ ] The credit card standards

[ ] The identity standards in that particular country

[ ] The general identity model

[ ] The missing species rights, allowing cats to have credit cards

[ ] The woman

[ ] The cat

[ ] Nobody

[ ] The one who didn´t limit their liability beforehand

[ ] I don´t know

[ ] All of the above

[ ] ________________

Best regards,

Philipp Gühring

PS: (;-)-CC (Smiley that shows a smiling cat with a creditcard)

October 10, 2006

NZ on Identity

It is almost but not quite a truism that if you make identity valuable, then you make identity theft economic, amongst other things. Here's New Zealand's take on the issue, at the end of a long article on government reform:

Let me share with you one last story: The Department of Transportation came to us one day and said they needed to increase the fees for driver's licenses. When we asked why, they said that the cost of relicensing wasn't being fully recovered at the current fee levels. Then we asked why we should be doing this sort of thing at all. The transportation people clearly thought that was a very stupid question: Everybody needs a driver's license, they said. I then pointed out that I received mine when I was fifteen and asked them: "What is it about relicensing that in any way tests driver competency?" We gave them ten days to think this over. At one point they suggested to us that the police need driver's licenses for identification purposes. We responded that this was the purpose of an identity card, not a driver's license. Finally they admitted that they could think of no good reason for what they were doing - so we abolished the whole process! Now a driver's license is good until a person is 74 years old, after which he must get an annual medical test to ensure he is still competent to drive. So not only did we not need new fees, we abolished a whole department. That's what I mean by thinking differently.

The rest of the article is very well worth reading, for a summary of NZ's economics successes.

August 22, 2006

Identity v. anonymity -- that is not the question

An age-old debate has sprung up around something called Identity 2.0. David Weinburger related it to transparency (and thus to open governance). David indicates that transparency is good, but it has its limits as an overarching framework:

So, all hail transparency... except......Except it's important that we preserve some shadows. Opaqueness in the form of anonymity protects whistleblowers and dissidents, women being beaten by their husbands, girls looking for abortion advice, people working through feelings of shame about who they are, and more. Anonymity and pseudonymity allow people to participate on the Web who perhaps aren't as self-confident as the loudest voices we hear there. It's even been known to enable snarky bloggers to comment archly on their industry, even if sometimes they play too rough.

Where he's heading is that he's suggesting that anonymity is a good thing in and of itself, and that it is something that should be in Identity 2.0. I'll leave you to wonder whether this is a political point or a savvy marketing angle aimed at early adopters.

Ben Laurie made a more incisive plea for anonymity:

But the point is this: unless you have anonymity as your default state, you don’t get to choose where on that spectrum you lie.Eric Norlin says

Further, every “user-centric” system I know of doesn’t seek to make “identity” a default, so much as it seeks to make “choice” (including the choice of anonymity) a default.as if identity management systems were the only way you are identified and tracked on the ‘net. But that’s the problem: the choices we make for identity management don’t control what information is gathered about us unless we are completely anonymous apart from what we choose to reveal.

Unless anonymity is the substrate, choice in identity management gets us nowhere. This is why I am not happy with any existing identity management proposal - none of them even attempt to give you anonymity as the substrate.

There is a bit of a insiders' secret here that Ben almost gets to the nub of (my emphasis). Unfortunately, nobody will believe the secret until they've lost their shirt for not believing it, and even then, it's optional. FWIW I'll reveal it here, you get to keep your shirt on and your beliefs intact, though.

Identity systems fail and fail again. From the annals of financial cryptography (Rights layer), the reason is that the design starts from a position of "identity," an assumption that is embedded as a meta-requirement from the very earliest days. I sometimes call this the "one true identity problem," meaning there isn't one true identity. That is, identity is simply too soft a concept to be called a requirement, and is thus a flawed foundation on which to build a large scale project. (And, if your concept calls for identity, assume large scale from the start.)

In order to accomodate different views of what identity is -- something necessary because it's not only soft but contentious in more ways that intimated by David above -- we need a flexible system. A very flexible system, indeed a system of such exeedingly flexible quantities it will work without any identity at all.

In fact, it turns out that the best way to build an identity system is to build an identity-free system, and then to layer your favourite identity flavour over the top. The system should aim to work well without any identity, so it can support all. To develop this further, by far the best base for such a system is public key based psuedonym systems. It is easy to put at least one identity system over the top of a standard psuedonym system, and it is plausible to put most identity concepts over a really good psuedonym system.

Yet, it is somewhere between traumatic and impractical to do it any other way. As an example, look at what Kim Cameron writes: