August 31, 2005

The HR Malaise in Britain - 25% of CVs are fiction

As discussed here a while back in depth, there is an increasing Human Resources problem in some countries. Here's actual testing of the scope of the issue whereby job employers ask for people to lie to them in the interview, and jobseekers happily oblige:

One CV in four is a work of fictionBy Sarah Womack, Social Affairs Correspondent (Filed: 19/08/2005)

One in four people lies on their CV, says a study that partly blames the "laxity" of employers.

The average jobseeker tells three lies but some employees admitted making up more than half their career history.

A report this month from The Chartered Institute of Personnel and Development highlights the problem. It says nearly a quarter of companies admitted not always taking up candidates' references and a similar percentage routinely failed to check absenteeism records or qualifications.

Example snipped...The institute said that the fact that a rising number of public sector staff lie about

qualifications or give false references was a problem not just for health services and charities, where staff could be working with vulnerable adults or children, but many public services.The institute said a quarter of employers surveyed ''had to withdraw at least one job offer. Others discover too late that they have employed a liar who is not competent to do the job."

Research by Mori in 2001 showed that 7.5 million of Britain's 25.3 million workers had misled potential employers. The figure covered all ages and management levels.

The institute puts the cost to employers at Ł1 billion.

© Copyright of Telegraph Group Limited 2005.

If it found 25% of the workers admitted to making material misrepresentations, that shows it is not an abnormality, rather lying to get a job is normal. Certainly I'd expect similar results in computing and banking (private) sectors, and before you get too smug over the pond, I'd say if anything the problem is worse in the US of A.

There is no point in commenting further than to point to this earlier essay: Lies, Uncertainty and Job Interviews. I wonder if it had any effect?

August 30, 2005

How to Build a Secure Credit Card Authoriser - 5 mins biz plan

Business 2.0 runs an innovative idea - ask the VCs what they'll spend money on right now! First cab of the rank is FC, with building an app to secure CC purchases from the mobile / cell phone / PDA platform:

$5M Mobile ID for Credit Card Purchases

WHO: John Occhipinti, Woodside Fund, Redwood Shores, Calif.

WHO HE IS: A former executive at Oracle and Netscape, Occhipinti is a managing director and security specialist, leading investments in BorderWare and Tacit.

WHAT HE WANTS: Fraudproof credit card authorization via cell phones and PDAs.

WHY IT'S SMART: Credit card fraud is more rampant than ever, and consumers aren't the only ones feeling the pain. Last year banks and merchants lost more than $2 billion to fraud. Most of that could be eliminated if they offered two-part authentication with credit and debit purchases -- something akin to using a SecureID code as well as a password to access e-mail. Occhipinti thinks the cell phone, packaged with the right software, presents an ideal solution. Imagine getting a text message on your phone from a merchant, prompting you for a password or code to approve the $100 purchase you just made on your home PC or at the mall. It's an extra step, but one that most consumers would be happy to take to safeguard their privacy. More important, Occhipinti says, big banks would pay dearly to be able to offer the service. "It's a killer app no one's touched yet," Occhipinti says, "but the technology's within reach."

WHAT HE WANTS FROM YOU: A finished prototype application within eight months. "I'm looking for the best technologists in security and wireless, the top 2 percent in their industry," Occhipinti says. The team would need to be working with a handful of banks and merchants ready to start trials, in hopes of licensing the technology or selling the company.

SEND YOUR PLAN TO: jco@woodsidefund.com

FCers know well how long we've been pushing this idea (Pelle beat me to it by a day, and Odio.us beats anyone any time you push the button). For the newcomers, here are some scratch notes:

The basic essence of doing anything securely has been known for more than a decade, but the business models in place can best be described as blocking and non- secure, which has led to the current situation with credit cards. We know how to fix this, but it requires a ground-up replacement (a.k.a., ignoring all prior "popular security.")

Tech would be like this: Create the key on the PDA/cell platform, then register it in a human process with a server. Use that key to then authenticate each transaction. This could be done with a simple token, supplied by the merchant, remitted to a gateway that then matches it into the backends of the CC system.

Two alternates: No CC info need be transmitted on the net, and the gateway escrows the details in waiting, OR have the CC packet encrypted and sent. Either works as the key is the foundation on which you can build anything you like.

Phones now have the grunt to do the proper crypto. The problem is not a crypto or tech problem, but a "right crypto and right protocols" problem. It is critical to avoid heavy-weight PKI, or connection oriented technologies, or WAP or like telco gateway server models. None of these will work, for various reasons.

Beyond that the problem is a business one, being flexible and sexy enough at the *gateway* and for that you need both tech-savvy and CC-savvy people. You also

need some handy phone hackers, maybe 2-3 and one and only one young opportunistic crypto guy, as long as your core architect can communicate the ideas well enough. Selling into the banks is hard. Look for banking entre and also, consider the securities market as their transaction profile is much more suited to this market. Further, recall that the basis of the phone is lifestyle, so the rollout should be done p2p thus WoT is used as the basic authentication.

(Names of suitable FCers all available on request!)

Bear in mind that Paypal started out with this model, and dumped it. Things were different then, they didn't have the phones, nor the security imperitive.

August 28, 2005

The Rise and Absorption of Paypal - a lesson for offshore

Over on Cato, there is an article bemoaning the fate of Paypal:

The company would no longer permit customers to use the service for purchases associated with "mature audiences," gambling, hate paraphernalia, or prescription drugs, along with a long list of other prohibitions. It would also fine its customers up to $500 for attempting such transactions. Those terms apparently applied to donations to blogs with content PayPal found objectionable.That's a far cry from the libertarian vision founders Peter Thiel and Max Levchin originally had for PayPal, an online payment service that enables account holders to send money to anyone in the world with an e-mail address. Thiel and Levchin had hoped PayPal would grow to become an extra-governmental system of currency, something reminiscent of the world described in Neal Stephenson's novel Cryptonomicon, in which programmers use encryption to create an offshore data haven free from government control.

What follows in that article is mostly a review of The Paypal Wars, by Eric M. Jackson. I've not read it, but can offer my opinions: Paypal should have failed a dozen times over, and the book seems to agree. The fact that they survived is good testament to their persistence; hundreds if not thousands of their competitors failed in these and similar ways.

The book also reads as testimony to the offshore theory of early digital money pundits. Back in the mid 90s when we were building these things, the wiser voices amongst us realised that we should go offshore, not for any conceivable tax benefits, but for the simplicity of regulation and the cost savings in reduction in 'enemies' to use the Paypal meme.

Offshore is like that - it has a higher startup cost in capital because everything is more expensive there. But if you are to succeed, then it quickly becomes more effective, simply because there are far fewer external problems to deal with. It makes a difference when the jurisidiction is small: not only are the regulators somewhat limited in pandering to whoever has their ear, but you can also meet the entire team around a small coffee table.

So what about the payment systems that did go offshore? Primarily this would be the gold units (or, these are the ones I know well). e-gold, the leader in transactional volume, branched offshore in late 2000, splitting into e-gold Ltd as the issuance company in the Caribbean and G&SR as the trading company in mainland USA. In practical effect, e-gold then contracted all operations back to G&SR so the physical move was not of great import, but the jurisdictional move was quite significant.

It worked out quite well, notwithstanding the frequent criticism. Considering always that the market of choice was mostly the US and the major market maker was a monopoly provider there, this move created just enough of a jurisdictional separation to establish a suitable distance between the 'enemies' and the operators; there always remains a possibility that if an enemy pushes too hard then the stuff will really move offshore.

In a similar timeframe, goldmoney started up and is now the leader by value under management. It had a more fullsome offshore arrangement, including a more or less complete range of 5PM governance partners. An early decision to place itself in the heavy jurisdiction of Jersey put goldmoney under the beady eye of the regulators there, which I would count as a mistake. For this benefit of brand name offshore finance center, it had to adopt a very stringent due diligence regime that slowed spread down dramatically. As the DD was regrettably far more severe than a mainland USA bank or that of any other operator, this placed its survival in doubt in the critical years 2-4.

However, goldmoney may have overcame this deadweight drag by coupling up with Kitco, and now something like 80% of sales go through that US coins and bullion seller. Does the DD still slow them down? Yes, and in spades, but it would seem that the Kitco channel is so strong that it has overcome these difficulties.

What then to conclude for new operators? Doing the partial offshore thing seems like a good compromise. It's not a completely safe choice, as even e-gold faced many life threatening challenges (and, finding good offshore advice is like asking Don Juan to chaperone your daughters). But if you can appreciate the statistics of the Paypal story then it still makes sense to consider.

And, as a side note, the Financial Cryptography conference returns to Anguilla, our spiritual home for the 10th edition in 2006. Back in 1997 it seemed that Anguilla had a chance of being somewhere special, but two things killed the excitement that the conference generated: lack of any real net (Cable and Wireless had/has a death grip on Anguilla, say no more ...) and the dramatic difficulties in importing financial cryptographers past the normal anti-immigration policies (common around the world) meant that no serious operation could take root. (Addendum: Pelle also comments in general.)

I calculated that the interest generated would have naturally led to about 100 FCers by 2000 and created a very welcome third sector for the island, but for those factors; at one stage we were seeing serious plans for half a dozen FCers every month. Only a few brave fools were stupid enough to ship in, in spite of the two big barriers, and by about 2002 they were all gone.

Microsoft to release 'Phishing Filter'

It looks like Microsoft are about to release their anti-phishing (first mooted months ago here):

WASHINGTON _ Microsoft Corp. will soon release a security tool for its Internet browser that privacy advocates say could allow the company to track the surfing habits of computer users. Microsoft officials say the company has no intention of doing so.The new feature, which Microsoft will make available as a free download within the next few weeks, is prompting some controversy, as it will inform the company of Web sites that users are visiting.

The browser tool is being called a "Phishing Filter." It is designed to warn computer users about "phishing," an online identity theft scam. The Federal Trade Commission estimates that about 10 million Americans were victims of identity theft in 2005, costing the economy $52.6 billion dollars.

What follows in that article is lots of criticsm. I actually agree with that criticism but in the wider picture, it is more important for Microsoft to weigh into the fight than to get it right. We have a crying need to focus the problem on what it is - the failure of security tools and the security infrastructure that Microsoft and other companies (RSADSI, VeriSign, CAs, Mozilla, Opera...) are peddling.

Microsoft by dint of the fact that they are the only large player taking phishing seriously are now the leaders in security thinking. They need to win some I guess.

(Meanwhile, I've reinstated the security top tips on the blog, by popular demand. These tips are designed for ordinary users. They are so brief that even ordinary users should be able to comprehend - without needing further reference. And, they are as comprehensive as I can get them. Try them on your mother and let me know...)

Application mirroring - In which I strike another blow against the System Programmer's Guild

Once upon a time I was a systems programmer. I was pretty good too; not brilliant but broad and sensible enough to know my limitations, perhaps the supreme asset of all. I attribute this good fortune to having attended perhaps the best place outside Bell Labs for early Unix work - UNSW - and my own innate skepticism which saved me from the self-glorification of the "guru culture" that pervaded those places and days.

Being a systems programmer is much more technically challenging than their alter ego, the applications programmer. The technology, the concepts, the things you can do are simply at a higher intellectual plane. Yet there is a fundamental limit - the human being. Wherever and however you do systems programming, you always strike up against that immovable yet indefinable barrier of the end user. At the end of the day, your grand complex and beautiful design falls flat on its face, because the user pressed the wrong button.

A good systems programmer then reaches a point where he can master his entire world, yet never advance intellectually. So one day I entered the world of applications programming and looked back only rarely. Still, I carried the ethos and the skepticism with me...

This meandering preamble is by way of reaching here: Recently I hit a tipping point and decided to throw a Core Systems Programming Construct into the trash. RAID, mirrored drives and all that have been a good old standby of well managed systems for a decade or more. In one act, Confined and Plonk. To hell with it, and all this sodding special drivers and cards and SANs and NASes and what-not - out the window. For the last three or four years we'd been battling with mirroring technologies of one form or another, and here's the verdict: they all suck. In one way or another, and I was very glad to spy research (since lost) that claimed that something like one in eight of all mirrors system end in tears when the operator inserts the wrong drive and wipes his last backup... It restores faith to know that we weren't the only ones.

So...

I dived into our secure backend code and discovered that coding up mirroring at the application-level took all of a day. That's all! In part, that's because the data requirements are already strictly refined into one log feed and one checkpoint feed, and they both go through the same hashed interface. Also, another part is that I'd been mulling it over for months; my first attempt had ended in retreat and a renewed attack on the mirroring drivers... In those ensuing months the grey matter had been working the options as nice -n 20 task.

But, still! A day! It is a long time since I'd coded up a feature in only a day - I was somewhat shocked to have got self-testing done by the time it was dark.

OK, I thought, surely more problems will turn up in production? Well, this morning I moved a server from an unprotected machine to a new place. I turned on mirroring and then deliberately and painfully walked through starting it up without the application-defined mirror. Bang, bang, bang, it broke at each proper place, and forced me to repair. Within 30 mins or so, the migration was done, and turning on mirroring was only about 10 mins of that!

So where is all this leading? Not that I'm clever or anything; but the continuing observation that many of the systems, frameworks, methodologies, and what have you are just ... Junk. We would be better off without them, but the combined weight of marketing and journalistic wisdom scare us from bucking the trend.

Surely so many people can't be wrong? we say to ourselves, but the history seems to provide plenty of evidence that they were indeed just that: Wrong. Here's a scratch list:

SSO, PKI, RAID and mirrored drives (!), Corba, SQL, SOAP, SSL, J2EE, firewalls, .NET, ISO, Struts, provable security, AI, 4GLs, IPSec, IDS, CISPs, rich clients,

Well, ok, so I inserted a few anti-duds in there. Either way, we'd probably all agree that the landscape of technology hasn't changed that much in the last N years, but what has changed is the schlockware. Every year portends a new series of must-have technologies, and it seems that the supreme skill of all is knowing which to adopt, and which to smile sweetly at and wait until they fail under their own mass of incongrueties.

August 22, 2005

Buying ID documents

Another structural beam just rusted and fell out of the foundations of the Identity Society. There is now a (thriving?) offshore market in forged secondary documents. Things like electricity bills, phone bills, credit documents, council or city papers can be drawn up for cash.

Beverley Young of Cifas, a fraud advice service set up by the credit industry, said: 'It is hard to prevent criminals using the internet to find false documentation, which can then be used to steal people's identities.

'We can warn people about false documents, but the sophisticated techniques used by the fraudsters mean that in most cases we cannot stop them. The regulators are powerless, too. ...Cifas said the replica documents looked authentic because the people operating many of the websites had bought up printing equipment that was used by the companies whose documents they fake.

Fraud investigating company CPP of York accessed one of these websites and paid Ł200 for false statements from British Gas, Barclaycard, Barclays bank and Revenue & Customs.

As a reminder - what's left to establish Rights? There are a wealth of other techniques in Rights:

- web of trust like OpenPGP, CACert,

- nymous techniques leading up to capabilities (hard assets not credit)

- tokens popularised by the blinded cash formula

- Brands framework

From a technology pov we have no issue. But for society at large this is yet more evidence of a looming clash between the unstoppable machine of Identity and the unimpressible rock of reality.

August 20, 2005

Notes on security defences

Adam points to a great idea by EFF and Tor:

Tor is a decentralized network of computers on the Internet that increases privacy in Web browsing, instant messaging, and other applications. We estimate there are some 50,000 Tor users currently, routing their traffic through about 250 volunteer Tor servers on five continents. However, Tor's current user interface approach — running as a service in the background — does a poor job of communicating network status and security levels to the user.The Tor project, affiliated with the Electronic Frontier Foundation, is running a UI contest to develop a vision of how Tor can work in a user's everyday anonymous browsing experience. Some of the challenges include how to make alerts and error conditions visible on screen; how to let the user configure Tor to use or avoid certain routes or nodes; how to learn about the current state of a Tor connection, including which servers it uses; and how to find out whether (and which) applications are using Tor safely.

This is a great idea!

User interfaces is one of our biggest challenges in security, alongside the the challenge of shifting mental models from no-risk cryptography across to opportunistic cryptography. We've all seen how incomplete UIs can drain a project's lifeblood, and we all should know by now just how expensive it is to create a nice one. I wish them well. Of the judges, Adam was part of the Freedom project which was a commercial forerunner for Tor (I guess) and is a long time privacy / security hacker. Ping runs Usable Security - the nexus between UIs and secure coding. (The others I do not know.)

In other good stuff, Brad Templeton was spotted by FM carrying on the good fight against the evils of no-risk cryptography (1, 2, 3). Unfortunately there is no blog to point at but the debate echoes what was posted here on Skype many moons back.

The mistakes that the defender, SS, makes are routine. 1. confused threat models in the normal fashion: because we can identify one person on the planet who needs ultimate, top notch security, then we can assert that all people need this. (c.f., airbags and human rights workers. Pah.) 2, confused user needs model, again normal. The needs of a corporation to sell to fee-paying clients is completely disjoint from the needs of the Internet user. The absence of concern for the Internet's security at systemic levels in place of the need to sell product is the mess we have to clean up today. E.g., "There is an abundance of encryption in use today where it's needed." should be read as "Cryptography should be everywhere where we can sell it, and is dangerous elsewhere." 3. Ye Olde Mythe of security-by-obscurity results in a false sense of security. In practice, security-by-sales has led to a much greater and thus falser false sense of security, and is part and parcel of the massive security mess we face now. 4. because opportunistic cryptography so challenges the world view of old timers and commercial security providers, they have a lot of trouble being scientific about it and often feel they are being attacked personally. This means they are unable to contribute to the debate about phishing, malware and the like, as they are still blocked on selling the very model that brought about these ills. Every time they get close to discovering how these frauds come about, they have to reject the logic that points at the solution as the problem.

Breaking through this barrier, getting people to think scientifically and use cryptography to benefit has proven to be the hardest thing! But the meme might be starting to take hold. Scanning Ping's blog, it seems he has been pushing the idea of a competition for some time:

The best I’ve been able to come up with so far is this:Hold yearly competitive user studies in which teams compete to design secure user interfaces and develop attacks against other teams’ user interfaces. Evaluate the submissions by testing on a large group of users.

And this logic is in direct response to the discord between what users will use and what security practitioners will secure. (And, note that Brad Templeton, Jedi noted above, is apparently the chair of the EFF running this competition.)

Ping asks is there any other way? Yes, I believe so. If you are mild of heart, or are not firmly relaxed, stop reading now.

<ContoversyAlert>The ideal security solution is built with no security at all. Only later, after the model has been proven as actually sustainable in an economic fashion, should security be added.

And then, only slowly, and only in ways that attempt not to change or limit the user's ability. The only reason to take away usability from the users is if the system is performing so badly it is in danger of collapse.

</ControversyAlert>

This model is at the core of the successful security systems we generally talk about. Credit cards worked this way, adding security hacks little by little as fraud rates rise. Indeed, the times something really truly secure was added, it either flopped (smart cards were rejected) or backfired (SSL led to phishing).

To switch sides and maintain the same point :-) just-in-time cryptography is how the web was secured! First HTTP was designed, and then a bandaid was added. (Well, actually TLS is more of a plaster cast where a bandaid should have been used, but the point remains valid, and bidirectional.)

Same with SSH - first Telnet proved the model, then it was replaced by SSH. PGP came later than email and secured it after email was proven. WEP is adequate for wireless in strength but is too hard to turn on so it has failed to impress. Why it is too hard to turn on is because it was built too securely, perversely.

Digital cash never succeeded - and I suggest it is in part because it was designed to be secure from the ground up. In contrast look at Paypal and the gold currencies. Basically, they are account money with that SSL-coloured plaster cast slapped on. (They succeeded, and note that the gold currencies were also the first to treat and defeat phishing on a mass scale.)

So, over to you! We already know this is an outrageous claim. The question is why is JIT crypto so close to reality? How real is it? Why is it that everything we've practiced as a discipline has failed, or only worked by accident?

(See also Security Usability with Peter Gutmann.)

Notes on today's market for threats

A good article on Malware for security people to brush up their understanding. On honey clients Balrog writes (copied verbatim):

In my earlier post about Microsoft’s HoneyMonkey project I mentioned that the HoneyNet Project will probably latch on and develop something along the same lines.In the meantime, I was notified of Kathy Wang's Honeyclient project and the client-side honeypots diploma project at the Laboratory for Dependable Distributed Systems at Rheinisch-Westfälische Technische Hochschule in Aachen.

From PaymentNews:

TowerGroup has announced new research examining the impact that phishing attacks may be having on fraud perpetrated at ATMs and debit POS locations that concludes that losses from fraud due to phishing runs about $81 million annually in the US.

That report is confused, it is looking at card skimming and seems to be conflating that with phishing. This may explain the lower-than-others estimate of $81m, or it may be explained by the fact that they only looked at identifiable banks' losses, not consumer losses and other costs. So I feel this number is a low outlyer, rather that really representative of phishing.

(Addendum: Having read the Tower link, I can now see that they are more just looking at the crossover from phishing to ATM Fraud,)

There is a lot of buzz on how wireless networks are being used "routinely" to attack people. So far it's all the same: the attacks are generally of access, rarely listening and no known cases of MITMs _even though they are trivial_! Here's a typical case pointed out by Jeroen from El Reg where the attack is misrepresented as a bank hack over wireless:

The data security chief at the Helsinki branch of financial services firm GE Money has been arrested on suspicion of conspiracy to steal €20,000 from the firm's online bank account. The 26 year-old allegedly copied passwords and e- banking software onto a laptop used by accomplices to siphon off money from an unnamed bank."Investigators told local paper Helsingin Sanomat that the suspects wrongly believed that the use of an insecure wireless network in commission of the crime would mask their tracks. This failed when police identified the MAC address of the machine used to pull off the theft from a router and linked it to a GE Money laptop. Police say that stolen funds have been recovered. Four men have been arrested over the alleged theft with charges expected to follow within the next two months. ®

Now, we have to read that fairly carefully to figure out what happened, and the information is potentially unreliable, but here goes. To me, it looks like the perpetrator stole the passwords from the inside and then used a wireless connected laptop (in a cafe?) to empty the account. So this is an inside job! The use of the wireless was nothing more than a forlorn hope to cover tracks and is totally incidental to the nature of the crime.

(Also, it doesn't say much for the security at GE Money ... "Maybe they should have employed a CISP" ... or whatever those flyswatter certifications are called.)

Addendum See here for some new wireless threats.

August 12, 2005

WoT in Pictures, p2p lending, mailtapping

Rick points at a nice page showing lots of OpenPGP web of trust metrics.

The web of trust in OpenPGP is an informal idea based on signing each other's keys. As it was never really specified what this means, there are two schools of thought, being the one where "I'll sign anyone's key if they give me the fingerprint" and the other more European inspired one that Rick lists as "it normally involves reviewing a proof of their identity." Obviously these two are totally in conflict. Yet, the web of trust seems not to care too much, perhaps because nobody would really rely on the web of trust only to do anything serious.

So an open question is due - how many out there believe in the model of "proving identity then signing" and how many out there subscribe to the more informal "show me your fingerprint and I'll trust your nym?"

What's this got to do with Financial Cryptography? PKI, the white elephant of the Internet security, is getting a shot in the arm from web of trust. In order to protect web browsing, CACert is issuing certificates for you, based on your subscription and your entry into a web of trust. In one sense they have outsourced (strong) identity checking to subscribers, in another they've said that this is a much better way to get certificates to users, which is where security begins, not ends.

More pennies: I've got my Thunderbird and Firefox back, so now I can see the RSS feeds. I came across this from Risks: How to build software for use in a den of thieves. We'd call that Governance and insider threats in the FC world - some nice tips there though.

PaymentNews reports that PayPal CEO Jeff Jordan presented to Etail 2005:

Nearly 10 percent of all U.S. e-commerce is funneled through PayPal, according to Jordan. One out of seven transactions crosses national boundaries. Consumers in more than 40 countries send PayPal, and those in more than 20 countries receive this currency."Our goal," he said, "is to be the global standard for online payments."

(More on Paypal.) And more from Scott:

Eliminate the banking middle man -- that's what Zopa's about. Rebecca Jarvis reports for Business 2.0 on what the UK's Richard Duvall is up to with Zopa.Are you a better lender than a bank is? Richard Duvall, who helped launch Britain's largest online bank, Egg, thinks you are. His new venture, Zopa, is an eBay-like website that lets ordinary citizens borrow money from other regular Joes -- no bank needed.

In mailtapping news from Lynn, a US court of appeals reversed a ruling, and said that ISPs could not copy and read emails. Meanwhile a survey found that small firms were failing to copy and escrow emails as instructed. And we now have the joy of companies competing to datamine the outgoing packets in order to spy on insider's net habits. The sales line? "every demo results in a sacked employee..."

E-mail wiretap case can proceed, court says

Study Finds Small Securities Firms Still Fail To Comply With SEC E-mail Archiving Regulations

When E-Mail Isn't Monitored

In closing, Everquest II faced off with hackers who had found a bug to create currency. We've seen this activity in the DGC world, and it no doubt has hit the Paypal world from time to time; it's what makes payment systems serious.

August 11, 2005

Is Security Compatible with Commerciality?

A debate has erupted over the blogspace where some security insiders (Tao, EC)are saying that there is real serious exploit code sitting there waiting to be used, but "if we told you where, we'd have to kill you."

This is an age old dilemma. The outsiders (Spire) say, "tell us what it is, or we believe you are simply making it up." Or, in more serious negotiating form, "tell us where or you're buying the beers."

I have to agree with that point of view as I've seen the excuse used far too many times, from security to finance to war plans. Both inside and outside. In my experience, when I do find out the truth, the person who made the statement was more often wrong than right, or the situation was badly read, and nowhere near representative.

Then, of course, people say that they have no choice because they are under NDA. Well, we all need to eat, don't we? And we need to maintain faith and reputation for our next job, so the logic goes.

This is a trickier one. Again, I have my reservations. If a situation is a real security risk, then what ever happened to the ethics of a security professional? Are we saying that it's A-OK to claim that one is a security professional, but anything covered by an NDA doesn't count? Or that when a company operates under NDA, it's permitted to conduct practices that would ordinarily be deemed insecure?

Fundamentally, an NDA switches your entire practices from whatever you believed you were before to an agent of the company. That's the point. So you are now under the company's agenda - and if the company is not interested in security then you are no longer interested in security, even if the job is chief security blah blah. Is that harsh? Not really, most security companies are strictly interested in selling whatever sells, and they'll sell an inadequate or insecure tool with a pretty security label with no problem whatsoever. Find us a company that withdrew an insecure tool and said it was no longer serving users, and we might have a debate on this one.

At the minimum, once you've signed an NDA, you can't go around purporting to have a public opinion on issues such as disclosure if you won't disclose the things you know yourself. Or, maybe, if you chose to participate in the security practices covered under NDA, you are effectively condoning this as a security practice, so you really are making it all up as you go. So in a sense, the only value to these comments is simply as an advert for your "insideness," like the HR people used to mention as a deal breaker.

It is for these reasons that I prefer security to be conducted out in the open - conflicts like these tend to be dealt with. I've written before about how secret security policies are inevitably perverted to other agendas, and I am now wondering whether the forces of anti-security are wider than that, even.

It may be that it is simply incompatible to do security in a closed corporate environment.

Consider the last company you worked at where security was under NDA - was it really secure? Consider the last secure operating system you used - was it one of the closed ones, or one of the free open source implementations with a rabid and angry security forum? Was security just window dressing because customers liked that look, ticked that box?

Recent moves towards commercialism (by two open organisations in the browser field) seem to confirm this; the more they get closer to the commercial model, the more security baby gets thrown out with the bath water.

What do you think? Is it possible to do security in a closed environment? And how is that done? No BS please - leave out the hype vendors. Who seriously delivers security in a closed environment? And how do they overcome the conflicts?

Or, can't you say because of the NDA?

A Small Experiment with Voting - Mana v. Medici

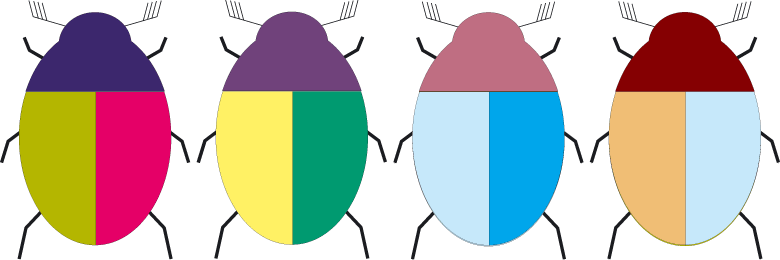

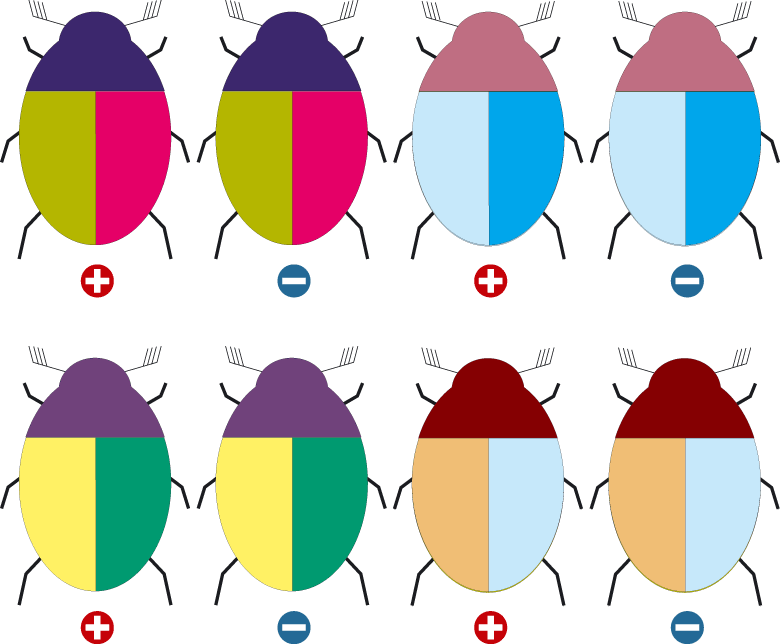

Voting is a particularly controversial application (or feature) for FC because of the difficulty in both setting the requirements, and the 'political requirement' of ensuring a non-interfered vote for each person. I've just got back from an alpine retreat where I participated in a small experiment to test votes with tokens, called Beetle In A Box. The retreat was specifically purposed to do the early work to build a voting application, so it was fun to actually try out some voting.

Following on from our pressed flower production technique, we 'pressed & laminated' about 100 'beatles,' or symbols looking like squashed beatles. These were paired in plus and minus form, and created in sets of similar symbols, with 5 colours for different purposes. Each person got a set of 10, being 5 subsets of two complementary pairs each.

The essence of the complicated plus and minus tokens was to try out delegated voting. Each user could delegate their plus token to another user, presumably on the basis that this other user would know more and was respected on this issue. But they could always cast their minus to override the plus, if they changed their minds. More, it is a sad fact of voting life that unless you are in Australia, where political voting is compulsory, most people don't bother to turn up anyway.

To simulate this, we set up 4 questions (allocating 4 colours) to be held at 4 different places - a deliberate conflict. One of the questions was the serious issue of naming the overall project and we'd been instructed to test that; the others were not so essential. Then we pinned up 21 envelopes for all the voters and encouraged people to put their plus tokens in the named envelope of their delegatee.

When voting time came, chaos ensued. Many things went wrong, but out of all that we did indeed get a vote on the critical issue (not that this was considered binding in any way). Here's the stats:

Number of direct voters: 4 Number of delegated votes: 3 Therefore, total votes cast: 7 Winning project name: Mana, with 3 votes.

So, delegated voting increased the participation by 75%, taking total participation to 33% (7 of 21 participants). That's significant - that's a huge improvement over the alternate and indicates that delegated voting may really be useful or even needed. But, another statistic indicates there is a lot more that we could have done:

Number of delegated votes, not cast: 9

That is, in the chaos of the game, many more people delegated their votes, but the tokens didn't make it to the ballot. The reasons for this are many: one is just the chaos of understanding the rules and the way they changed on the fly! Another is that many delegatees simply didn't participate at all, and in particular the opinion leaders who collected fat envelopes forgot they were supposed to vote, and just watched the madness around them (in increasing frustration!).

Canny FCers will recognise another flaw in the design - having placed the tokens into envelopes, the delegators then had to become delegatees and collect from their envelopes. And, if they were not to then attend that meeting (there were 4 conflicting meetings, recall) then the delegatees would become delegators again and re-delegate. Thus forcing the cycle to start again, ad infinitum.

Most people only went to the pinboard once. So the formal delegation system simply failed on efficiency grounds, and it is lucky that some smart political types did some direct swaps and trades on their delegated votes.

How then to do this with physical tokens is an open question. If one wants infinite delegation, I can't see how to do it efficiently. With a computer system, things become more plausible, but even then how do we model a delegated vote in software?

Is it a token? Not quite, as the delegate vote can be overridden and thus we need a token that can be yanked back. Or overridden or redirected. So it could be considered to be an accounting entry - like nymous account money - but even then, the records of a payment from alice to bob need to be reversable.

One final result. Because I was omnipresent (running the meeting that took the important vote) I was able to divine which were the delegated votes. And, in this case, if the delegated votes had been stripped out, and only direct voting handled, the result of the election decision would have changed: the winning name would have been Medici, which was what I voted for.

Which I count as fairly compelling evidence that whatever the difficulties in implementing delegated voting, it's a worthwhile goal for the future.

August 05, 2005

tracking tokens

Wired and Boston report on a new mechanism to read a fingerprint from paper. Not the fingerprint of a person touching it, but the fingerprint of the paper itself. Scanning the micro-bumps with a laser is a robust way to create this index, and it even works on plastic cards. (Note, don't be distracted about the marketing bumph about passports, it is way too early to see where this will be used as yet.)

Our recent pressed flowers adventure resulted in a new discovery along similar lines - we can now make a token like a banknote with cheap available tools that is unforgeable and uncopyable. It does this by means of the unique identifier of the flower itself; we can couple this digitally by simply scanning and hashing (a routine act in the ongoing adventure of FC). What's more, it integrates well with the pre-monetary economics that is built into our very genes, if you subscribe to the startling new theory presented in "Shelling Out," a working paper by Nick Szabo.

In other tracking information, the EFF has started tracking printers that track pages. Some manufacturers print a tiny fingerprint of the printer onto every page that gets produced. Originally "suggested" by monetary authorities so as to trace forgeries and copies of paper money, it will of course make its way into evidence in general court proceedings. Predictably the EFF finds that there is no protection whatsoever for this.

(older DRM techniques by the ECB.)

From the benches of the Montana Supreme Court, a judgement that instantiates the Orwell Society. Dramatically written by Judge Nelson and brave for its refreshing honesty, it recalls that famous line from Scott McNeally, "you have no privacy left, get used to it." It's worth reading for its clear exposition of how your garbage is abandoned and therefore open to collection by ... anyone. But it should also serve as a wakeup call to the limits of privacy. Judge Nelson writes (from Wired):

In short, I know that my personal information is recorded in databases, servers, hard drives and file cabinets all over the world. I know that these portals to the most intimate details of my life are restricted only by the degree of sophistication and goodwill or malevolence of the person, institution, corporation or government that wants access to my data.I also know that much of my life can be reconstructed from the contents of my garbage can.

I don't like living in Orwell's 1984; but I do. And, absent the next extinction event or civil libertarians taking charge of the government (the former being more likely than the latter), the best we can do is try to keep Sam and the sub-Sams on a short leash.

In such a world, we should be delivering privacy, as we cannot rely on anyone else to do it. In this sense, the recent (popularish) argument between Phil Zimmerman's new VoIP product and some PKI apologists is easily defended by Phil as such:

My secure VoIP protocol also requires almost no activation energy, so I expect it to do well.

No more need be said. Go Phil. (See the recent article on Security Usability (PDF only, sorry) for IEEE's Security & Privacy mag as well.)

August 03, 2005

The Phishing Borg - now absorbing IM, spam, viruses, lawyers, courts and you

Dramatic increase in threats to IM (instant messaging or chat) seen as the IMLogic Threat Center reports a 28 times increase over the last year.

Right on cue. Meanwhile, new tool to download for your browser shows that independent researchers at Stanford know where to put the protection: Spoofguard detects and warns against phishing, and PwdHash augments the password calculation to make each transmitted password site-dependent.

Good stuff guys! We need to induct you into the anti-fraud coffee room before you get swallowed up by the anti-borg of secret committees in smoke-filled rooms.

And in Korea is looking to legalise class-action suits in cases where small losses make it uneconomic for victims to punish negligent providers.

Much as I wonder if class action suits aren't a net loss to society and shouldn't be treated within the threat model rather than the security model, they do seem to be the only non-technical defence that suppliers will listen to. Such suits and others by regulators are filed against data providers (and losers), banks and Microsoft on various causes. Nobody has yet pinned one directly on phishing, but I give it a better than evens chance that it will be tried on the banks, and then on the software suppliers.

Although it is hard to decipher, a new report from IBM reports that spam is down from 83% of all email to 67% in June. That's the "good news." The bad news is that it's almost certainly because phishing and viruses have skyrocketed even this year, with IBM reporting that phishing has now reached around 20% and viruses around 4% of all email. The article is ridiculously muddled in its use of numbers, but I make that around a 91% garbage rate in email.

This to my mind confirms predictions made here that phishing is still the #1 threat to email (by value!), browsing and Internet commerce; viruses are now economically being driven by phishing; and email is dying under the one-two punch of spam and phishing.

Is phishing and related fraud becoming the #1 threat to the net, or is it already there?