September 16, 2005

PayPal protected with Trustbar and Petnames

Here's how your Paypal browsing can be protected with Trustbar:

Apologies for the huge image! Notice the extra little window that has "PayPal, Inc" in it. That's a label that indicates that you have selected this site as one of your trusted ones. You can go even further and type in your own label such as "Internet money" or "How I Pay For Things" in which case it will turn green as a further indicator that you're in control.

This label of yours is called a petname, and indicates that Trustbar has found the certificate that you've labelled as PayPal. And, as that's the certificate used to secure the connection, it is really your Paypal you are talking to, not some other bogus one.

Trustbar also allows you to assign logos to your favourite sites. These logos allow you to recognise more quickly than words which site you are at. (Scribblers note: there are more screen shots at Trustbar's new help page.)

These are simple powerful ideas and the best we have at the moment for phishing. You can do the same thing with the Petname toolbar which just implements the own-label part. It's smaller, neater, and works on more platforms such as OSX and FreeBSD, but is not as powerful.

One thing that both of these tools rely heavily upon is SSL. That's because the SSL certificate is the way they know that it's really the site - if you take away the certificate then there is no way to be sure, and we know that the phishers are generally smart enough to trick any maybes that might be relied upon.

Trustbar allows you to assign a name to a site that hasn't SSL protection - but Petnames does not. In this sense, Trustbar says that most attacks occur outside SSL and we should protect against the majorit of attacks, whereas Petnames draws a line in the sand - the site must be using SSL and must be using a certificate in order to make reliable statements. Both of these are valid security statements, and will eventually converge over time.

For security insiders, Philipp posts news of a recent phishing survey. I've skimmed it briefly and it puts heady evidence on the claim that phishing is now institutionalised. Worth a visit!

The Economy of Phishing: A survey of the operations of the phishing marketChristopher Abad

Abstract:

Phishing has been defined as the fraudulent acquisition of personal information by tricking an individual into believing the attacker is a trustworthy entity. Phishing attacks are becoming more sophisticated and are on the rise. In order to develop effective strategies and solutions to combat the phishing problem, one needs to understand the infrastructure in which phishing economies thrive.We have conducted extensive research to uncover phishing networks. The result is detailed analysis from 3,900,000 phishing e-mails, 220,000 messages collected from 13 key phishing-related chat rooms, 13,000 chat rooms and 48,000 users, which were spidered across six chat networks and 4,400 compromised hosts used in botnets.

This paper presents the findings from this research as well as an analysis of the phishing infrastructure.

Closing notes. This page I am updating as new info comes in.

nother note for journalists: over at the Anti-fraud mailing list Anti-Fraud Coffee Room you can find the independent researchers who are building tools based on the nature of the attack, not the current flavour of the month.

September 14, 2005

RSA keys - crunchable at 1024?

New factoring hardware designs suggest that 1024 bit numbers can be factored for $1 million. That's significant - that brings ordinary keys into the reach of ordinary agencies.

If so, that means most intelligence agencies can probably already crunch most common key sizes. It still means that the capability is likely limited to intelligence agencies, which is some comfort for many of us, but not of comfort if you happen to live in a country where civil liberties are not well respected and keys and data are considered to be "on loan" to citizens - you be the judge on that call.

Either way, with SHA1 also suffering badly at the hands of the Shandong marauders, it puts DSA into critical territory - not expected to survive even given emergency surgery and definately no longer Pareto-complete. For RSA keys, jump them up to 2048 or 4096 if you can afford the CPU.

Here is the source of info, posted by Steve Bellovin.

Open to the PublicDATE: TODAY * TODAY * TODAY * WEDNESDAY, Sept. 14 2005

TIME: 4:00 p.m. - 5:30 p.m.

PLACE: 32-G575, Stata Center, 32 Vassar Street

TITLE: Special-Purpose Hardware for Integer Factoring

SPEAKER: Eran Tromer, Weizmann InstituteFactoring of large integers is of considerable interest in cryptography and algorithmic number theory. In the quest for factorization of larger integers, the present bottleneck lies in the sieving and matrix steps of the Number Field Sieve algorithm. In a series of works, several special-purpose hardware architectures for these steps were proposed and evaluated.

The use of custom hardware, as opposed to the traditional RAM model, offers major benefits (beyond plain reduction of overheads): the possibility of vast fine-grained parallelism, and the chance to identify and exploit technological tradeoffs at the algorithmic level.

Taken together, these works have reduced the cost of factoring by many orders of magnitude, making it feasible, for example, to factor 1024-bit integers within one year at the cost of about US$1M (as opposed to the trillions of US$ forecasted previously). This talk will survey these results, emphasizing the underlying general ideas.

Joint works with Adi Shamir, Arjen Lenstra, Willi Geiselmann, Rainer Steinwandt, Hubert K?pfer, Jim Tomlinson, Wil Kortsmit, Bruce Dodson, James Hughes and Paul Leyland.

Some other notes:

http://www.keylength.com/index.php

http://citeseer.ist.psu.edu/287428.html

September 11, 2005

Spooks' corner: listening to typing, Spycatcher, and talking to Tolkachev

A team of UCB researchers have coupled the sound of typing to various artificial intelligence learning techniques and recovered the text that was being typed. This recalls to mind Peter Wright's work. Poking around the net, I found that Shamir and Tromer started from here:

Preceding modern computers, one may recall MI5's "ENGULF" technique (recounted in Peter Wright's book Spycatcher), whereby a phone tap was used to eavesdrop on the operation of an Egyptian embassy's Hagelin cipher machine, thereby recovering its secret key.

I haven't _Spycatcher_ to hand, but from memory the bug was set up by fiddling the phone in the same room to act as a microphone, and the different sounds of the typewriter keys hitting being pressed on the cipher machine were what allowed the secret key to be recovered. Here's some more of Wright's basic techniques:

One of Peter Wright's successes was in listening to (i.e. bugging) the actions of a mechanical cipher machine, in order to break their encryption. This operation was code-named ENGULF, and enabled MI5 to read the cipher of the Egyptian embassy in London at the time of the Suez crisis. Another cipher-reading operation, code-named STOCKADE, read the French embassy cipher by using the electro-magnetic echoes of the input teleprinter which appeared on the output of the cipher machine. Unfortunately, Wright says this operation "was a graphic illustration of the limitations of intelligence" - Britain was blocked by the French from joining the Common Market and no amount of bugging could change that outcome.Particularly interesting is MI5's invention code-named RAFTER, which is used to detect the frequency a radio receiver is tuned to, by tracing emissions from the receiver's local oscillator circuit. RAFTER was used against the Soviet embassy and consulate in London to detect whether they were listening in to A4-watcher radios. Wright also used this technique to try to track down Soviet "illegals" (covert agents) in London who received their instructions by radio from the USSR.

Unlike Wright's techniques from the 60s, the UCB team and their forerunners have the ability to couple up their information to vastly more powerful processing (Ed Felton comments, the paper and pointer from Adam). They manage to show how not only can the technique extract pretty accurate text, it can do so after listening to only 10-15 minutes of typing without prior clues.

That's a pretty impressive achievement! Does this mean that next time a virus invades your PC, you also need to worry about whether it captures your microphone and starts listening to your password typing? No, it's still not that likely, as if the audio card can be grabbed your windows PC is probably "owned" already and the keyboard will be read directly. Mind you, the secure Mac that you use to do your online banking next to it might be in trouble :-)

While we are on the subject, Adam also points at (Bruce who points at) the CIA's Tolkachev case, the story of an agent who passed details on Russian avionics until caught in 1985 (and executed a year later for high treason).

The tradecraft information in there is pretty interesting. Oddly, for all their technical capability the thing that worked best was old-fashioned systems. At least the way the story reads, microfilm cameras, personal crypto-communicators and efforts to forge library passes all failed to make the grade and simpler systems were used:

In November 1981, Tolkachev was passed a commercially purchased shortwave radio and two one-time pads, with accompanying instructions, as part of an "Interim-One-Way Link" (IOWL) base-to-agent alternate communication system. He was also passed a demodulator unit, which was to be connected to the short wave radio when a message was to be received.Tolkachev was directed to tune into a certain short wave frequency at specific times and days with his demodulator unit connected to his radio to capture the message being sent. Each broadcast lasted 10 minutes, which included the transmission of any live message as well as dummy messages. The agent could later break out the message by scrolling it out on the screen of the demodulator unit. The first three digits of the message would indicate whether a live message was included for him, in which case he would scroll out the message, contained in five-digit groups, and decode the message using his one-time pad. Using this system, Tolkachev could receive over 400 five-digit groups in any one message.

Tolkachev tried to use this IOWL system, but he later informed his case officer that he was unable to securely monitor these broadcasts at the times indicated (evening hours) because he had no privacy in his apartment. He also said that he could not adhere to a different evening broadcast schedule by waiting until his wife and son went to bed, because he always went to bed before they did.

As a result, the broadcasts were changed to the morning hours of certain workdays, during which Tolkachev would come home from work using a suitable pretext. This system also ran afoul of bad luck and Soviet security. Tolkachev's institute initiated new security procedures that made it virtually impossible for him to leave the office during work hours without written permission. In December 1982, Tolkachev returned his IOWL equipment, broadcast schedule, instructions, and one-time pad to his case officer. The CIA was never able to use this system to set up an unscheduled meeting with him.

Sounds like a familiar story! The most important of Kherchkoffs' 6 laws is that last one, which says that a crypto-system must be usable. The article also describes another paired device that could exchange encrypted messages over distances of a few hundred metres, with similar results (albeit with some successful message deliveries).

September 10, 2005

Open Source Insurance, Dumb Things, Shuttle Reliability

(Perilocity reports that) LLoyds and OSRM to issue open source insurance, including being attacked by commercial vendors over IP claims.

(Adam -> Bruce -> ) an article of the "Six Dumbest Ideas in Computer Security" by Marcus Ranum. I'm not sure who Marcus is but his name keeps cropping up - and his list is pretty good:

- Default Permit (open by default)

- Enumerating Badness (cover all those we know about)

- Penetrate and Patch

- Hacking is Cool

- Educating Users

- Action is Better Than Inaction

I even agree with them, although I have my qualms about these two "minor dumbs:"

- "Let's go production with it now and we can secure it later" - no, you won't. A better question to ask yourself is "If we don't have time to do it correctly now, will we have time to do it over once it's broken?" Sometimes, building a system that is in constant need of repair means you will spend years investing in turd polish because you were unwilling to spend days getting the job done right in the first place.

The reason this doesn't work is basic economics. You can't generate revenues until the business model is proven and working, and you can't secure things properly until you've got a) the revenues to do so and b) the proven business model to protect! The security field is littered with business models that secured properly and firstly, but very few of them were successful, and often that was sufficient reason for their failure.

Which is not to dispute the basic logic that most production systems defer security until later and later never comes ... but there is an economic incentives situation working here that more or less explains why - only valuable things are secured, and a system that is not in production is not valuable.

- "We can't stop the occasional problem" - yes, you can. Would you travel on commercial airliners if you thought that the aviation industry took this approach with your life? I didn't think so.

There's several errors here, starting with a badly formed premise leading to can/can't arguments. Secondly, we aren't in general risking our life, just our computer (and identity as of late...). Thirdly, it's a risk based thing - there is no Axiomatic Right From On High that you have to have secure computing, nor be able to drive safely to work, nor fly.

Indeed no less than Richard Feynman is quoted (to support #3) which talks about how to deal and misdeal with the occasional problem.

Richard Fenyman's [sic] "Personal Observations on the Reliability of the Space Shuttle" used to be required reading for the software engineers that I hired. It contains some profound thoughts on expectation of reliability and how it is achieved in complex systems. In a nutshell its meaning to programmers is: "Unless your system was supposed to be hackable then it shouldn't be hackable."

Feynman found that the engineering approach to Shuttle problems was (often or sometimes) to rewire the procedures. Instead of fixing them, the engineers would move the problems into the safety zone created conveniently by design tolerances; Insert here the normal management pressures including the temptation to call the reliability as 1 in 100,000 where 1 in 100 is more likely! (And even that seems too low to me.)

Predictibly Feynman suggests not doing that, and finishes with this quote:

"For a successful technology, reality must take precedence over public relations, for nature cannot be fooled."

A true engineer :-)

See earlier writings on failure in complex systems and also compare Feynman's comments on the software and hardware reliability with this article and earlier comments.

September 06, 2005

IP on IP

One class of finance applications that is interesting is that of developing new Intellectual Property (IP) over the net (a.k.a. IP). Following on from Ideas markets and Task markets, David points to ransomware, a concept where a piece of art or other IP is freed into the public domain once a certain sum is reached.

People seem ready for this idea. I've seen a lot of indications that there is readiness to try this from the open source Horde) and arts communities. The tech is relatively solvable (I can say that because I built it way back when...) but the cultural issues and business concepts surrounding IP in a community setting are still holding back the push.

Meanwhile, the CIA has decided to open up a little and build something like what we do on the net:

Opening up the CIA

Porter Goss plans to launch a new wing of the CIA that will sort through non-secret data

By TIMOTHY J. BURGER Aug. 15, 2005In what experts say is a welcome nod to common sense, the CIA, having spent billions over the years on undercover agents, phone taps and the like, plans to create a large wing in the spookhouse dedicated to sorting through various forms of data that are not secret-such as research articles, religious tracts, websites, even phone books-but yet could be vital to national security. Senior intelligence officials tell TIME that CIA Director Porter Goss plans to launch by Oct. 1 an " open source" unit that will greatly expand on the work of the respected but cash-strapped office that currently translates...

The reason this is interesting is their obscure reference to translation, which we can reverse engineer with a little intelligence: the way that the spooks get things translated is to farm out paragraphs to different people and then combine them. They do this so that nobody knows the complete picture and therefore the translators can't easily spy on them.

Now, this farming out of packets is something that we know how to do using FC over the net. In fact, we can do it well with the tech we have already built (authenticated, direct-cash-settled, psuedonymous, reliable, traceable or untraceable) which would support remote secret-but-managed translators so well it'd be scary. That they haven't figured it out as yet is a bit of a surprise. Hmm, no, apparently it's scary, says Eric Umansky.

Unfortunately, they didn't open up enough to publish their article for free, and on one page at least Time were asking $$$ for the rest. More found here:

... foreign-language broadcasts and documents like declarations by extremist clerics. The budget, which could be in the ballpark of $100 million, is to be carefully monitored by John Negroponte, the Director of National Intelligence (DNI), who discussed the new division with Goss in a meeting late last month. "We will want this to be a separate, identifiable line in the CIA program so we know precisely what this center has in terms of investment, and we don't want money moved from it without [Negroponte's] approval," said a senior official in the DNI's office. Critics have charged in the past that despite the proven value of open-source information, the government has tended to give more prominence to reports gained through cloak-and-dagger efforts. One glaring example: the CIA failed in 1998 to predict a nuclear test in India, even though the country's Prime Minister had campaigned on a platform promising a robust atomic-weapons program."If it doesn't have the SECRET stamp on it, it really isn't treated very seriously," says Michael Scheuer, former chief of the CIA's Osama bin Laden unit. The idea of an open-source unit didn't gain traction until a White House commission recommended creating one last spring. Utilizing it will require "cultural and attitudinal changes," says the senior DNI official. Sure, watching TV and listening to the radio may not sound terribly sexy, but, says Scheuer, "there's no better way to find out what Osama bin Laden's going to do than to read what he says." --By Timothy J. Burger

So what's this got to do with Intellectual Property? Well, all the systems that will work to distribute IP over IP (and especially what is being discussed at the moment) also look uncannily like systems designed to pass intelligence around. It's no wonder - they are both combining small parts from many places and creating greater works from it. Content management is not the exlusive domain of the recording world.

SSL v2 Must Die - Notice of Extinction to be issued

A Notice of Extinction for prehistoric SSL v2 web servers is being typed up as we speak. This dinosaur should have been retired net-centuries ago, and it falls to Mozilla to clean up.

In your browser, turn off SSL v2 (a two-clawed footprint in protocol evolution). Go here and follow the instructions. You may discover some web sites that can't be connected to in HTTPS mode. Let everyone know where they are and to avoid them. (Add to bug 1 or bug 2.)

Maybe they'll receive a Notices of Imminent Extinction. When I last looked at SecuritySpace there were no more than 4445 of them, about 2%. But Gerv reports it is down to 2000 or so. (Measurement of websites is not an accurate science.)

Elsewhere, Eric Rescorla published some slides on a talk he'd given on "Evidence" (apologies, URL mislaid). Eric is the person who wrote the book on SSL and TLS (literally) and also served as the editor of the IETF committee. In this talk, he presented the case for "evidence-based security" which he refers to as looking at the evidence and acting on what it tells you. Very welcome to see this approach start to take root.

Another factoid - relevent to this post - he gave was that the half-life of an OpenSSL exploit is about 50 days (see chart half way down). That's the time it takes for half of the OpenSSL servers out there to be patched with a known exploit fix. Later on, he states that the half life for windows platforms with automated patching is 21 days for external machines and 62 days for internal machines (presumably inside some corporate net). This is good news, this means there isn't really any point in delaying the extinction of SSL v2: The sooner browsers ditch it the sooner the dinosaurs will be retired - we can actually make a big difference in 50 days or so.

Why is this important? Why do we care about a small group of sites are still running SSL v2. Here's why - it feeds into phishing:

1. In order for browsers to talk to these sites, they still perform the SSL v2 Hello. 2. Which means they cannot talk the TLS hello. 3. Which means that servers like Apache cannot implement TLS features to operate multiple web sites securely through multiple certificates. 4. Which further means that the spread of TLS (a.k.a. SSL) is slowed down dramatically (only one protected site per IP number - schlock!), and 5, this finally means that anti-phishing efforts at the browser level haven't a leg to stand on when it comes to protecting 99% of the web.

Until *all* sites stop talking SSL v2, browsers will continue to talk SSL v2. Which means the anti-phishing features we have been building and promoting are somewhat held back because they don't so easily protect everything.

(There's more to it than that, but that's the general effect: one important factor in addressing phishing is more TLS. To get more TLS we have to get rid of SSL v2.)

September 01, 2005

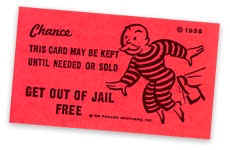

KPMG establishes the price of the get-out-of-jail card

The monopoly game of accounting goes on another round. A few rounds back, Arthur Andersen was knocked out, and now the KPMG is losing its houses on the plush side of the board:

Accounting firm KPMG LLP on Monday agreed to pay $456 million and to cooperate with authorities investigating tax shelter deals. Rival Ernst & Young LLP remains under grand jury investigation for its role in selling shelters.

which works out as $300k per partner for the get-out-of-jail card. Given how much each partner makes per year, this looks like no more than a blip in their yearly income; the more important question is ... whither accounting reputation now?

Serious Financial Cryptographers have always known that accounting practices and audits are but a fig leaf of respectibility. Arthur Andersen seemed to blow away that paltry covering for the first time, according to the public's viewpoint. Above we see that Ernst & Young are also under investigation, and there is every reason to believe that they were all doing it, whatever it was.

What "it" was is somewhat apropos - raking in huge fees for shady transactions. No matter your views on government, taxes and the equally shady concept of trading justice that resulted in a fine but no indictment, what is clear is that the accountants are not serving the public interest.

Serving the public interest by means of public audits is their being and meaning in life. It is the reason they are special, the reason they have privileges and the reason they can ask high fees. It's the reason that the regulator instructs public companies to get audited. It's the reason that any difficult hidden thing gets a secret report from an auditor, for a fat fee.

For this privilege they get to serve the public interest. It is a very easy test to apply and equally easy to see that this not terrifically high standard is not being met by the public auditors.

For this privilege the accountants have earnt huge fees for huge periods. We can fairly comfortably agree that they got out their rewards; no partner at a big N firm could reasonably claim that too much was asked of them for too little pay.

I have no hesitation therefore in stripping from them any specialness and calling for the end to KPMG. And the rest - society needs to move on and consider other ways in which we audit and confirm to our public that what we are doing is in the interests of our shareholders.

I know that ignoring these words is easy - and that "jobs are at stake." But those are poor excuses for supporting a rotten system. Why does a poor job (or worse - basically theft) by the auditors mean that their jobs must be protected?

Before the veins burst, here's today's ludicrous audit news:

CardSystems auditor completes compliance report Thu Sep 1, 2005 11:31 AM ET NEW YORK, Sept 1 (Reuters) - Payments processor CardSystems Solutions Inc., where a security breach exposed more than 40 million credit card accounts to fraud, on Thursday said its auditor had completed a report to payment networks and concluded it complies with industry data-security standards.CardSystems, which handles payments for more than 119,000 merchants, in July hired AmbironTrustWave, a data security assessment firm, to review its compliance with payment card industry security standards. The report was submitted to MasterCard, Visa, American Express Co. (AXP.N: Quote, Profile, Research) and Morgan Stanley's (MWD.N: Quote, Profile, Research) Discover on Wednesday, as scheduled.

But they already had the t-shirt that said "I got my systems audited and only exposed 40 million accounts..." No mention of what happened to the predecessor auditor. Anyways, back to the the monopoly game, I wonder who's going to pick up Mayfair?