May 29, 2006

Users do not need online banking

I drew a bit of flack on my post examining Opera on security. (Summary -- reading from the same prayer book as always.) One thing keeps popping up though and it needs addressing. This is the persistent notion that users need access to their online banks. This is bunk, and the reasons are quite strong.

It is what the Americans call a strawman. It may be true at some level that the users need access to their online banking. But it is not true that the browser manufacturers are totally responsible for that provision. They are not.

The users have alternates -- at many levels. All users of Firefox and Opera generally have another browser. All users of banks have an ability to walk to the branch. Most users have more than one bank, most American users have a dozen. All banks have an ability to ship a downloaded client, or a hardware token. All server operators have an ability to upgrade. There are so many alternates in this equation that it would take days or months to document them all (it's probably already available from Netcraft or securityspace or verisign for $2500, and if it wasn't going to put you to sleep, it would be free, here).

All of these questions of access can be solved, notwithstanding notable exceptions to the above generalisms. Why then do the browser manufacturers take it upon their heads to assume complete responsibility for this? Well, perhaps we can see the answer in this response by Hallvord:

>> (Do you think the commerciality of the equation might

>> explain the laggardness of browser manufacturers here?)

>

> Now that's complete rubbish. Users feeling secure is essential to

> our existence while we get none of the payments to ISPs for those

> non-shared IP addresses. Where is the logic in this accusation?

Although every one of the other actors above has alternatives, they all cost money. Their browser does not. The banks make money on fees, and the users *pay* for their banking access. They choose the cheapest access because they are smart. The banks choose online banking because it reduces branch staff and telephone support staff. So they are smart - they save money that way. Online server operators do not upgrade because upgrading a server is costly. Sysadmin time, user disruption, etc etc. So they only do it when their business pays them to do it. That's smart. (CAs don't bother to check Id because it saves them money - smart. Until someone can show them why they should, they won't, because they are smart, and so are their customers!)

So for everyone one of those (other) actors, need is a relative thing, which dissappears absolutely if the price is not right. I need online banking, but if it's more than $10 a month, I don't need it.

Browsers on the other hand have no money in the equation (somewhat a generalism, but not an unreliable one). They apparently take on all the responsibility for user security for free, for reasons lost in the dim dark corridors of history. They have none of the cruel jungle feedback of the dollar to inform their security, they have instead the infinitely forgiving parental corporation that tells them what to do (Microsoft or Apple) or the feel-good social benefit of open source coolness (KDE, Mozilla) or the cozy position in the "user trust matrix" that comes with a handy dandy prayer book that tells you what to do if they happen to not have the others (Opera).

Browsers are therefore blinded to the alternates that all users have and use, and the users being the silly fools that they are aren't that bothered to inform browsers what they are up to. Because browser manufacturers do not see their sales go down and are therefore not part of the cost-saving cycle of general life, they have no feedback to rectify mistakes, and they fall victim to such stupidities as "browser users need their online banking, and we are responsible for that."

Browser manufacturers are just another victim in this game. Unfortunately, there are other victims - the users - and nobody much takes on their case, although everyone says they do. When I take on the case of the users, I necessarily cannot take on the case of the browser manufacturers - victims though they may be. I'm here to tell you that "we the users" think your reasons for not doing security are daft, and please don't invoke our names in it.

May 28, 2006

British Columbia Supreme Court rules that you should lie back and enjoy it

A little sunday morning governance nightmare. Over in Canada, a media entrepreneur sued his ex-law firm:

Mr. Cheikes says that in 1997 [his lawyer] Mr. Strother advised him he could not legally operate his tax-shelter business because of tax rule changes. Mr. Cheikes shut operations down that fall and asked Mr. Strother to find ways to make Monarch compliant so that the company could re-open.A year later, he found out that Mr. Strother had become a 50% partner in a movie tax shelter business called Sentinel Hill, and that [law firm] Davis & Co. were its attorneys. Mr. Cheikes says that from 1998 to 2002, Sentinel Hill made $140-million in total profits, that Mr. Strother made $32-million for himself and that Davis & Co. had been paid $9-million in fees.

Now, as written, this is a slam dunk, and in absence of a defence, we should be looking at what amounts to fraud here - breach of fiduciary trust, theft of trade secrets and business processes, etc etc.

A few words on where this all comes from. Why do we regularly question audits in the governance department of financial cryptography? Partly it is because the auditor's actual product is so disconnected from what people need. But the big underlying concern - the thing that makes people weak at the news - is the potential for abuse.

And this is much the same for your lawyer. They both get deep into your business. They are your totally trusted parties - TTPs in security lingo. This doesn't mean you trust them because you think they are trustworthy sorts, they speak with a silver toungue and you'd be happy to wave your daughter off with one of them.

No, quite the reverse: it means you have no choice but to trust them. The correct word is vulnerability, not trust. You are totally vulnerable to your lawyer and your auditor, more so than your spouse, and if they wish to rape your business, the only thing that will stop them is if you manage to get out of there with your assets firmly buttoned up. The odds are firmly against that - you are quite seriously relying almost completely on convention, reputation and the strength of the courts here, not on your ability to detect and protect the fraud as you are with almost all other attackers.

Which is why over time institutions have arisen to help you with your vulnerability. One of those institutions is the courts themselves, which is why this is such a shocker:

For example, the B.C. Supreme court agreed with Mr. Strother's argument that he had no obligation to correct his mistaken legal advice even though that advice had led to the closure of Monarch.The lower court also agreed with Davis & Co.'s contention that it should be able to represent two competitors in a business without being obliged to tell one what the other is doing.

What the hell are they smoking in B.C.? The Supreme Court is effectively instructing the vulnerable client to lie back and enjoy it. There is no obligation in words, and it's not because it would be silly to write down "thou shalt not rape your client," but simply because if we write it down, enterprising legal arbitrageurs will realise it's ok to rape as long as they do it using different words. It's for reasons like this that you have "reasonable man" tests - as when your Supreme Court judges are stoked up on the finest that B.C. can offer, we need something to bring them back to reality. It's for reasons like this we also have appeals courts.

In 2003, the B.C. Supreme Court heard the case but found no wrongdoing. Two years later, however, the B.C. Court of Appeal overturned that ruling, finding the lawyer and firm guilty.Mr. Strother was ordered to pay back the $32-million he had made and Davis & Co. was ordered to return $7-million of the $9-million in fees to Mr. Cheikes and his partners.

The high-stakes case was appealed again and the Supreme Court of Canada has granted leave to appeal, probably in October.

To be fair, this article was written from one side. I'd love to hear the case for the law firm! I'd also love to hear what people in BC think about that - is that a law firm you'd go to? What does a client of that law firm think when she reads about the case over his sunday morning coffee?

May 26, 2006

How much is all my email worth?

I have a research question. How much is all my email worth? As a risk / threat / management question.

Of course, that's a difficult thing to price. Normally we would price a thing by checking the market for the thing. So what market deals with such things?

We could look at the various black markets but they are more focussed on specific things not massive data. Sorry, bad guys, not your day.

Alternatively, let's look at the US data brokers market. There, lots and lots of data is shared without necessarily concentrating on tiny pickings like credit theft identifiers. (Some of it you might know about, and you may even be rewarded for some of it. Much is just plain stolen out of sight. But that's not today's question.) So how much would one of those data broker's pay for *full* access to my mailbox?

Let's assume I'm a standard boring rich country middle class worker bee.

Another way to look at this is to look at google. It makes most of the money in advertising, and it does this on the tiny hook of your search query. It is also experimenting with "catalogue your hard drive" products (as with Apple's spotlight and no doubt Microsoft and Yahoo are hyperventilating over this already). So it must have a view as to the value of *everything*.

So, what would it be worth to those companies to *sell* the entire monitoring contents of my email, etc, for a year to Yahoo, Google, Microsoft, or Apple? Imagine a market where instead of credit card offers to my dog clogging up mailbox, I get data sharing agreements from the big friendly net media conglomerates.

www.google.com/headspecials

Failing to nail your hammer? Your marketing seems like all thumbs?

Try Google's get-in-his-head program.

Today's only, Iang's emails, buy one, get two free.

Does anyone know any data brokers? Does anyone have hooks into google that can estimate this?

May 24, 2006

CFP - W. Economics of Securing the Information Infrastructure

October 23-24, 2006 Washington, DC

SECOND CALL FOR PAPERS

Our information infrastructure suffers from decades-old vulnerabilities, from the low-level algorithms that select communications routes to theapplication-level services on which we are becoming increasingly dependent. Are we investing enough to protect our infrastructure? How can we best overcome the inevitable bootstrapping problems that impede efforts to add security to this infrastructure? Who stands to benefit and who stands to lose as security features are integrated into these basic services? How can technology investment decisions best be presented to policymakers?

We invite infrastructure providers, developers, social scientists, computer scientists, legal scholars, security engineers, and especially policymakers to help address these and other related questions. Authors of accepted papers will have the opportunity to present their work to government and corporate policymakers. We encourage collaborative research from authors in multiple fields and multiple institutions.

Submissions Due: August 6, 2006 (11:59PM PST)

Opera talks softly about user security

Opera talks about security features in Opera 9. The good parts - they have totally rewritten their protocol engine, and:

3. We have disabled SSL v2 and the 40 and 56 bit encryption methods supported by SSL and TLS.

The somewhat silly part, they've added more warnings for use of 40 and 56 bit ciphers, but forgotten to warn about that popular zero-bit cipher called HTTP.

The slow part - it must have been two years since we first started talking about it to get to now: SSLv2 in Opera 9 disabled, and TLS Extensions enabled. (SNI itself is not mentioned. I forget where the other suppliers are on this question.)

Why so long?

2. TLS 1.1 and TLS Extensions are now enabled by default.When we tested these features in Opera 7.60 TP in September 2004, our users found 100+ sites that would not work with either or both of these features. So why enable these features now, have we lost our minds?

The reason isn't that the problematic sites have disappeared. Unfortunately, they haven't. The reason we are activating these features now is that we have worked around the problems.

It makes me giddy, just thinking about those 100 sites ... So Opera backed off, just like the others. The reason that this is taking so long is that as far as your average browser goes, security is a second or third priority. Convenience is the priority. Opera's page, above, has lots of references to how they want to be fully standards compliant etc etc, which is good, but if you ask them about security, they'll mumble on about irrelevancies like bits in algorithms and needing to share information with other browsers before doing anything.

SSL v2, originally developed by Netscape, is more than 11 years old. It was replaced by SSL v3 (also developed by Netscape) in 1996. SSL v2 is known to have at least one major weakness. And considering the age of the servers that only supports SSL v2, one can certainly wonder how secure the servers themselves are.Some may wonder why disabling an outdated protocol is news. *Shouldn't these "features" have been removed long ago?*

I like standards as much as the next guy, and I use a browser that is proud of user experience. I even suggest that you use for its incidental security benefits - see the Top Tips on the front page!

But when it comes to protecting people's online bank accounts, something has to break. Is it really more important to connect to a hundred or a thousand old sites than it is to protect users from security attacks from a thousand phishers or a hundred thousand attacks?

The fact is, as we found out when we tried to disable these methods during the 7.60 TP and 8.0 Beta testing, that despite both the new versions of the protocol and the SSL v2 protocol's security problems, there were actually major sites using it as their single available SSL version as recently as a year ago! It is actually only a few years since a major US financial institution upgraded their servers from SSL v2 to TLS 1.0. This meant that it was not practical from a usability perspective to disable the protocol.

Come on guys, don't pussy foot around with user security! If you want to get serious, name names. Pick up the big stick and wack those sites. If you're not serious, then here's what is going to happen: government will move in and pass laws to make you liable. Your choice - get serious or get sued.

The connection between all this dancing around with TLS and end-user security is a bit too hard to see in simple terms. It is all to do with SNI - server name indication. This is a feature only available in TLS. As explained above, to use TLS, SSLv2 must die.

Once we get SNI, each Apache server can *share* TLS certificates for multiple sites over one single IP number. Right now, sharing TLS sites is clumsy and only suitable for the diehards at CAcert (like this site is set up, the certs stuff causes problems). This makes TLS a high-priced option - only for "e-commerce" not for the net masses. Putting each site over a separate IP# is just a waste of good sysadmin time and other resources, and someone has to pay for that.

(Do you think the commerciality of the equation might explain the laggardness of browser manufacturers here?)

With SNI, using TLS then has a goodly chance of becoming virtual - like virtual HTTP sites sometimes called VHosts. Once we start moving more web servers into TLS _by default_, we start to protect more and more ... and we shift the ground of phishing defence over to certificates. Which can defend against phishing but only if used properly (c.f. toolbars).

Yes, I know, there are too many steps in that strategy to make for easy reading. I know, we already lost the battle of phishing, and it has moved into the Microsoft OS as the battleground. I know, TLS is bypassed as a security system. And, I know, SSL was really only for people who could pay for it anyway.

Still, it would be nice, wouldn't it? To have many more sites using crypto by default? Because they can? Imagine if websites were like Skype - always protected? Imagine how much easier it would be to write software - never needing to worry about whether you are on the right page or not.

And if that's not convincing, consider this: what's happening in "other countries" is coming to you. Don't be the one to hold back ubiquitous, opportunistic crypto. You might be reading about RIP and massive datamining in the press today about other countries; in a decade or so you'll be reading about their unintended victims in your country, and you will know the part you played.

In other crypto news:

Microsoft buys SSL VPN vendor Whale

http://www.computerworld.com/action/article.do?command=viewArticleBasic&articleId=9000608

Government to force handover of encryption keys

http://news.zdnet.co.uk/0,39020330,39269746,00.htm

UK law will criminalise IT pros, say experts

http://news.zdnet.co.uk/business/legal/0,39020651,39270045,00.htm

May 22, 2006

It is no longer acceptable to be complex

Great things going on over at FreeBSD. Last month was the surprising news that Java was now distro'd in binary form. Heavens, we might actually see Java move from "write once, run twice" to numbers requiring more than 2 bits, in our lifetimes. (I haven't tried it yet. I've got other things to do.)

More serious is the ongoing soap opera of security. I mean over all platforms, in general. FreeBSD still screams in my rankings (sorry, unpublished, unless someone blogs the secret link again, darnit) as #2, a nose behind OpenBSD for the top dog spot in the race for hard core security. Here's the story.

Someone cunning (Colin?) noticed that a real problem existed in the FreeBSD world - nobody bothers to update, and that includes critical security patches. That's right. We all sit on our haunches and re-install or put it off for 6-12 months at a time. Why?

Welll, why's a tricky word, but I have to hand it to the FreeBSD community - if there is one place where we can find out, that's where it is. Colin Percival, security czar and general good sort, decided to punch out a survey and ask the users why? Or, Why not? We haven't seen the results of the survey, but something already happened:

Polite, professional, thoughtful debate.

No, really! It's unheard of on an Internet security forum to see such reasoned, considered discussion. At least, I've never seen it before, I'm still gobsmacked, and searching for my politeness book, long lost under the 30 volume set of Internet Flames for Champions, and Trollers Almanacs going back 6 generations.

A couple of (other) things came out. The big message was that the upgrade process was either too unknown, too complex, too dangerous, or just too scary. So there's a big project for FreeBSD sitting right there - as if they need another. Actually this project has been underway for some time, it's what Colin has been working on, so to say this is unrecognised is to short change the good work done so far.

But this one's important. Another thing that delicately poked its nose above the waterline was the contrast between the professional sysadmin and the busy other guy. A lot of people are using FreeBSD who are not professional sysadmins. These people haven't time to explore the arcania of the latest tool's options. These people are impressed by Apple's upgrade process - a window pops up and asks if it's a good time, please, pretty please? These people not only manage a FreeBSD platform or 10, but they also negotiate contracts, drive buses, organise logistics, program big apps for big iron, solve disputes with unions and run recruiting camps. A.k.a., business people. And in their lunchbreaks, they tweak the FreeBSD platforms. Standing up, mouth full.

In short, they are gifted part-timers. Or, like me, trained in another lifetime. And we haven't the time.

So it is no longer - I suggest - acceptable for the process of upgrades and installs to be seriously technical. Simplification is called for. The product is now in too many places, too many skill sets and too many critical applications to demand a tame, trained sysadmin full time, right time.

Old hands will say - that's the product. It's built for the expert. Security comes at a cost.

Well, sort of - in this case, FreeBSD is hoisted on its own petard. Security comes at a risk-management cost. FreeBSD happens to give the best compromise for the security minded practitioner. I know I can install my machine, not do a darn thing for 6 months, and still be secure. That's so valuable, I won't even bother to install Linux, let alone look up the spelling of whatever thing the Microsoft circus are pushing this month. I install FreeBSD because I get the best security bang for buck: No necessary work, and all the apps I can use.

Which brings us to another thing that popped out of the discussion - every one of the people who commented was using risk management. Seriously! Everyone was calculating their risk of compromise versus work put in. There is no way you would see that elsewhere - where the stark choice is either "you get what you're given, you lucky lucky microsoft victim" all the way across to the more colourful but unprintable "you will be **&#$&# secure if you dare *@$*@^# utter the *#&$*#& OpenBSD install disk near your (*&@*@! machine in vein."

Not so on FreeBSD. Everyone installs, and takes on their risks. Then politely turns around and suggests how it would be nice to improve the upgrade process, so we can ... upgrade more frequently than those big anniversaries.

Spring is here - that means Pressed Flowers

Dave Birch looks for an explosion of disruptive innovation in currency ideas:

Once digital cash goes into circulation, then the marginal cost of trading (and, for that matter, creating) entirely new currencies (commodity currencies, community currencies, synthetic currencies and the like) will fall substantially. I see that as the second generation -- not digitising existing cash but creating new kinds of cash -- and the potentially disruptive innovation.

Here's one expression. It's Spring time, so switch to monochrom's blog for Johannes' impromtu interview in the field of currency reserves There is an English and Deutsche page of what they call their "Hippyesque Post-Hippie Approach To Changing the World."

I've written before about the evolution of the pressed flowers money so here's no more than a quick summary. The Viennese arts community took Sylvia Berndt's digital issuance of pressed flowers (physical and digital reserves) and attempted to create their own over the last year. In pressing the flowers, they combined their arts use of laminates to create the tokens, which in time burst into life as a fascinating experiment on their own.

A cultural group in Amstetten is repeating the seasonal cycle. This weekend, we collected bunches of wild flowers from the local nature walk, and then repaired to a private club. In there, we arranged the flowers on massive hard boards and pressed them. Their plan is to reconvene in Autumn to laminate - giving them a winter's supply of favour currency.

Normally, I'm well ahead of discussions in this field - but once people like artists start to issue, I fall behind - I can't predict or understand easily what they are doing. In other experiments, we've found the laminated pressed flowers can work as business cards and identity tokens. The unforgeable pressed flower is fascinating - not cheap in time, but monetarily inexpensive, and it compares in tantalising form to other tamper-resistant high tech devices such as RFIDs or smart cards. For small quantities, it adds somethings special.

It all reinforces one direction for us as a society that values our shared efforts - let the money go free! The large issue that we have now is that issuance of value is still a mysterious process, and more experiments are required. The small problem I have is that I'm out of flowers.

May 20, 2006

Indistinguishable from random...

(If you are not a cryptoplumber, the following words will be indistinguishable from random... that might be a good thing!)

When I and Zooko created the SDP1 layout (for "Secure Datagram Protocol #1") one of the requirements wasn't to avoid traffic analysis. So much so that we explicitly left all that out.

But, the times, they are a-changing. SDP1 is now in use in one app full-time, and 3 other apps are being coded up. So I have more experience in how to drive this process, and not a little idea about how to inform a re-design.

And the war news is bleak, we are getting beaten up in Eurasia. Our boys over there sure could use some help.

So how to avoid traffic analysis? A fundamental way is to be indistinguishable from random, as that reveals no information. Let's revisit this and see how far we can get.

One way is to make all packets around the same size, and same frequency. That's somewhat easy. Firstly, we expand out the (internal) Pad to include more mildly random data so that short packets are boosted to the average length. There's little we can do about long packets, except break them up, which really challenges the assumptions of datagrams and packet-independence.

Also, we can statistically generate some chit chat to keep the packets ticking away ... although I'd personally hold short of the dramatically costly demands of the Freedom design, which asked you to devote 64 or 256k permanent on-traffic to its cause. (A laughable demand, until you investigate what Skype is doing, now, successfully, on your computer this very minute.)

But a harder issue is the outer packet layer as it goes over the wire. It has structure, so someone can track it and learn information from it. Can we get rid of the structure?

The consists of open network layout consists of three parts - a token, a ciphertext and a MAC.

| Token | . . . enciphered . . . text . . . | SHA1-HMAC |

Each of these is an array, which itself consists of a leading length field followed by that many bytes.

| Length Field | . . . many . . . bytes . . . length . . . long . . . |

(Also, in all extent systems, there is a leading byte 0x5D that says "this is an SDP1, and not something else." That is, the application provides a little wrapping because there are cases where non-crypto traffic has to pass.)

| 5D |

|

|

|

Those arrays were considered necessary back then - but today I'm not so sure. Here's the logic.

Firstly the token creates an identifier for a cryptographic context. The token indexes into the keys, so it can be decrypted. The reason that this may not be so necessary is that there is generally another token already available in the network layer - in particular the UDP port number. At least one application I have coded up found itself having to use a different port number (create a new socket) for every logical channel, not because of the crypto needs, but because of how NAT works to track the sender and receiver.

(This same logic applies to the 0x5D in use.)

Secondly, skip to the MAC. This is in the outer layer - primarily because there is a paper (M. Bellare and C. Namprempre, Authenticated Encryption: Relations among notions and analysis of the generic composition paradigm Asiacrypt 2000 LNCS 1976) that advises this. That is, SDP1 uses Encrypt-then-MAC mode.

But it turns out that this might have been an overly conservative choice. Earlier fears that MAC-then-Encrypt mode was insecure may have been overdone. That is, if due care is taken, then putting the MAC inside the cryptographic envelope could be strong. And thus eliminate the MAC as a hook to hang some traffic analysis on.

So let's assume that for now. We ditch the token, and we do MAC-then-encrypt. Which leaves us with the ciphertext. Now, because the datagram transport layer - again, UDP typically - will preserve the length of the content data, we do not need the array constructions that tell us how long the data is.

Now we have a clean encrypted text with no outer layer information. One furfie might have been that we would have to pass across the IV as is normally done in crypto protocols. But not in SDP1, as this is covered in the overall "context" - that session management that manages the keys structure also manages the IVs for each packet. By design, there is no IV passing needed.

Hey presto, we now have a clean encrypted datagram which is indistinguishable from random data.

Am I right? At the time, Zooko and I agreed it couldn't be done - but now I'm thinking we were overly cautious about the needs of encrypt-then-Mac, and the needs to identify each packet coming in.

(Hat tip to Todd for pushing me to put these thoughts down.)

May 19, 2006

When they cross the line...

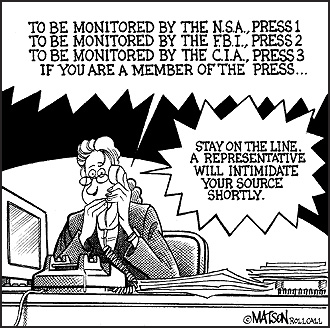

One way to tell when they cross the line is to watch the comics and jokes circuit. Here's another one:

Original source is RollCall.com, so tap their phones, not mine.

Seriously though, the reason this scandal has "legs" is because the Democrats' hands are clean. In previous scandals, it seems that the dirt was evenly spread across the political dipole. This time, the Dems have one they can get their teeth into and not look silly.

May 14, 2006

Markets in Imperfect Information - Lemons, Limes and Silver Bullets

Twan points to a nice slate/FT article on the market for lemons:

In 1966 an assistant economics professor, George Akerlof, tried to explain why this is so in a working paper called "The Market for 'Lemons.'" His basic insight was simple: If somebody who has plenty of experience driving a particular car is keen to sell it to you, why should you be so keen to buy it?Akerlof showed that insight could have dramatic consequences. Buyers' perfectly sensible fears of being ripped off could, in principle, wipe out the entire used-car market: There would be no price that a rational seller would offer that was low enough to make the sale. The deeper the discount, the more the buyer would be sure that the car was a terrible lemon.

If you are unfamiliar with Akerlof's market for lemons, you should read that article in full, and then come back.

This whole area of lemons is sometimes called markets in asymmetric information - as the seller of the car has the information that you the buyer doesn't. Of course, asymmetries can go both ways, and sometimes you have the information whereas the other guy, the seller, does not. What's up with that?

Well, it means that you won't be able to get a good deal, either. This is the market in insurance, as described in the article, and also the market in taxation. These areas were covered by Mirlees in 1970, and Rothschild & Stiglitz in 1976. For sake of differentiation, as sometimes these details matter, I call this the market for limes.

But there is one final space. What happens when neither party knows the good they are buying?

Our gut reaction might be that these markets can't exist, but Michael Spence says they do. His example was the market for education, specifically degrees. In his 1973 paper entitled "Job Market Signalling" he described how the market for education and jobs was stable in the presence of signals that had no bearing on what the nominal goal was. That is, if the market believed a degree in arts was necessary for a job, then that's what they looked for. Likewise, and he covers this, if the market believed that being male was needed for a job, then that belief was also stable - something that cuts right to the core of our beliefs, because such a belief is indeed generally irrelevant but stable, whether we like it or not.

This one I term the Market for Silver Bullets, a term of art in the computing field for a product that is believed to solve everything. I came to this conclusion after researching the market for security, and discovering that security is a good in Spence's space, not in Akerlof's nor Rothschild and Stiglitz's spaces. That is, security is not in the market for lemons nor limes - it's in the tasteless spot in the bottom right hand.

Yup, because it is economics, we must have a two by two diagram:

| The Market for Goods, as described by Information and by Party | Buyer Knows | Buyer Lacks |

|---|---|---|

| Seller Knows | Efficient Goods | Lemons (used cars) |

| Seller Lacks | Limes (Tax, Insurance) | Silver Bullets (Security) |

Michael Spence coined and explored the sense of signals as being proxies for the information that parties were seeking. In his model, a degree was a signal, that may or may not reveal something of use. But it's a signal because we all agree that we want it.

Unfortunately, many people - both economists and people outside the field - have conflated all these markets and thus been lead down the garden path in their search for fruit. Spence's market in silver bullets is not the same thing as Akerlof's market in lemons. The former has signals, the latter does not. The latter has institutions, the former does not. To get the full picture here we need to actually do some hard work like read the original source papers mentioned above (Akerlof and Spence aren't so bad, but Rothschild & Stiglitz were tougher. I've not yet tried Mirrlees, and I got bogged down in Vickery. All of these require a trip to the library, as they are well-pre-net papers.)

In particular, and I expand on this in a working draft paper, the bitter-sweet truth is that the market for security is a market for silver bullets. This has profound implications for security research. But for those, you'll have to read the paper :)

May 12, 2006

Money costs: a dollar, a penny, a system, an experience

John Kyle found some US coin and note costs. For the dollar:

Rep. Jack Metcalf then began questioning Allison about these issues in more detail. Allison explained that when the United States Mint produces a dollar coin, it spends 8 cents on production costs and issues the coin into circulation at face value (100 cents), depositing the coin in the Federal Reserve for 100 cents. The 92 cents difference is seignorage, essentially profit. In the case of a dollar bill, on the other hand, the cost of producing the bill is 4 cents, and the Federal Reserve issues the bill into circulation at face value, investing the 96 cents difference in U.S. Government bonds. The interest the Federal Reserve receives goes to Federal Reserve expenses (about $2 billion), retained earnings (a few hundred million), dividends to member banks (another few hundred million); the rest goes back to the Treasury. Metcalf noted, "It seems like an arcane system that could have been invented only by somebody who was mentally deranged."

And for the penny:

The Mint estimates it will cost 1.23 cents per penny and 5.73 cents per nickel this fiscal year, which ends Sept. 30. The cost of producing a penny has risen 27% in the last year, while nickel manufacturing costs have risen19%. ... But consumers should not hoard coins or melt down the change in their kids' piggy banks, says Michael Helmar, an economist and metals analyst at Moody's Economy.com. He says the process of melting the coins, separating out the metals, then selling would be costly and time-consuming."If they were made out of gold, sure," he says. But "there are just too many other costs."

OK! It's nice to know it is ok to hoard and smelt the gold coins. For those interested in the cost of payment systems, here's a list of the things that the business plan needs to consider. All standard fodder for the FCer. Over at Oyster, they seemed not to have paid attention to the basic recipe:

Back in 2005, Transport for London (TfL) announced it had shortlisted seven potential suppliers to transform Oyster from a ticketing system into a means of paying for goods such as coffee and newspapers. Trials were scheduled to start before the end of the year but didn't materialise. And, at the end of April, TfL announced that none of the shortlisted suppliers had been able to meet their criteria and the rollout had been put on hold for the time being. ... So what went wrong? It seems that the technology behind the scheme was not at fault. Dave Birch, director of consultancy Consult Hyperion and organiser of the Digital Money Forum, told silicon.com: "What's become clear is that it's more complicated to sort out commercial arrangements than to sort out the technical arrangements [with e-money]."It appears that issues with the payment processing side of the project - division of revenues and payment processing costs, for example - were the main reason the e-money scheme was hobbled before it left the starting gates. The question of who would pay for the cost of deploying the necessary infrastructure was a sticking point. For example, without financial support from the banks, retailers were unlikely to agree to cover the equipment costs themselves.

That would be numbers 1, 2, 4, 5, 6, in Win Derman's list. I often characterise the question this way: it costs order of a million to build a software payment system, and order of 100 million to build a hardware token payment system. The difference is the tokens, and who you get to pay for them...

Which leaves us wondering why these lessons aren't learnt? Win Derman says "In looking back over the last 30 years, thereís no question the industry has witnessed (and still is witnessing) tremendous change. But despite the disruption, the same fundamentals still apply. There are 13 questions Iíve learned to ask about any new payment technology to evaluate its potential for success in this space."

Maybe experience is priceless?

In looking back over the last 30 years, thereís no question the industry has witnessed (and still is witnessing) tremendous change. But despite the disruption, the same fundamentals still apply. There are 13 questions Iíve learned to ask about any new payment technology to evaluate its potential for success in this space.

1. Are you solving a problem that needs changes at the point of transaction? Then build a 5-7 year implementation schedule because of terminal replacement cycle.

2. Are you solving a problem that has limited geographic applicability (for example, the 1980s chip card in France because of the poor telephone network versus the magnetic stripe in the zero floor limit environment of the United States)? Either you will be tied up in politics or limited in scope to a subset of the card world.

3. Timing is everything. We worked for 30 years to grow the Visa credit card business into a trillion dollar business. The Visa debit card grew to that level in less than10 years because the network infrastructure already existed, the name Visa was already well known, and the political battle between the credit card and debit card groups within the banks was over.

4. If you are selling a proprietary product or service and hope to extract a royalty on every transaction, you will be trying to push a huge rock up a large hill in an environment where even competitors need to share technology because banks and merchants want standardized services - not proprietary implementations.

5. If your product or service needs approval from more than one industry group (such as airlines and banks), plan on a very long and complicated negotiation to achieve consensus.

6. If you expect to change consumer behavior to implement your product or service, remember issuers will be very reluctant to risk making significant changes that could damage a $4-5 trillion business.

7. If your product or service is aimed at fraud reduction, remember that eliminating 100% of fraud - which is highly unlikely - only changes the issuers' bottom line by a fraction of 1%. That doesn't leave much room if implementation or operational costs are high.

8. Technology by itself without a surrounding set of business rules, prices, and procedures that make economic sense will not fly.

9. Any product or service that does not show significant results within 12 to 18 months will take a long time to get anyoneís attention.

10. After you develop a timeline to get your product or service sold, double it.

11. Who is your champion in the target organization? (We tried to have the credit card departments where Visa had its contacts introduce the Visa debit card to the banks. We made very limited headway for almost 30 years until credit card people became senior managers in the banks. Then implementation went very quickly.)

12. If your plan is to introduce your product or service in competition with the existing card products, remember that you either must spend a great deal of money to get a piece of the business (like Discover did) or you will be vulnerable to the big guys overwhelming you during your buildup phase as they easily could have done with PayPal - if they had focused on that market niche earlier.

13. If your target mark is the bankcard business, the good news is that it is a $4 trillion dollar business and growing. The bad new is that it is a $4 trillion dollar business and growing. There is a potential for a big payoff but it is hard to turn the business in a new direction - kind of like trying to turn the Exxon Valdes in Prudeau Bay.

May 10, 2006

JIBC April 2006 - "Security Revisionism"

The April edition of J. Internet Banking and Commerce is out and includes an essay on "Security Revisionism" that addresses outstanding questions from an economics pov:

Security isn't working, and some of us are turning to economics to address why this is so. Agency theory casts light on cases such as Choicepoint and Lopez . An economics approach also sheds light on what security really is, and social scientists may be able to help us build it. Institutional economics suggests that the very lack of information may lead to results that only appear to speak of security.

Other than my opinions (and others), here's the full list:

General and Review Articles

- India Data Protection in Consumer E-Banking (By: Ankur Gupta, National Law Institute University)

- USA A Security Concern in MS-Windows: Stealing User Information From Internet Browsers Using Faked Windows (By: Lior Shamir, Michigan Tech University)

- India The Indian Information Technology Act and Spamming (By: Rahul Goel, Advocate)

- UK Security Revisionism (By: Ian Grigg, Financial Cryptographer)

Research Papers

- France Technological and organizational preconditions to Internet Banking implementation: Case of a Tunisian bank (By: Achraf AYADI, Researcher/Institut National des Télécommunications d'Evry)

- Nigeria Regulating Internet Banking In Nigeria : Some Success Prescriptions -- Part 2 (By: Abel Ebeh Ezeoha, Ebonyi State University)

- India Effective method of security measures in Virtual banking (By: S.Arumuga perumal, S.T.Hindu College)

- India Alternate Architecture for Domain Name System to foil Distributed Denial of Service Attack (By: P.Yogesh and A.Kannan, Anna University)

- USA Challenges and opportunities of silent commerce - applying Radio Frequency Identification technology (By: Teuta Cata, Northern Kentucky University)

- USA Internet Support Companies: The Impact of Marketing Orientation (By: Jose Gonzalez, Nova Southeastern University, and Larry Chiagouris, Pace University)

- USA A Taxonomy of Metrics for Hosted Databases (By: Jordan Shropshire, Mississippi State University)

May 07, 2006

Reliable Connections Are Not

Someone blogged a draft essay of mine on the limits of reliability in connections. As it is now wafting through the blog world, I need to take remedial action and publish it. It's more or less there, but I reserve the right to tune it some :)

When is a reliable connection not a reliable connection?The answer is - when you really truly need it to be reliable. Connections provided by standard software are reliable up to a point. If you don't care, they are reliable enough. If you do care, they aren't as reliable as you want.

In testing the reliability of the connection, we all refer to TCP/IP's contract and how it uses the theory of networking to guarantee the delivery. As we shall see, this is a fallacy of extension and for reliable applications - let's call that reliability engineering - the guarantee is not adequate.

And, it specifically is not adequate for financial cryptography. For this reason, most reliable systems are written to use datagrams, in one sense or another. Capabilities systems are generally done this way, and all cash systems are done this way, eventually. Oddly enough, HTTP was designed with datagrams, and one of the great design flaws in later implementations was the persistent mashing of the clean request-response paradigm of datagrams over connections.

To summarise the case for connections being reliable and guaranteed, recall that TCP has resends and checksums. It has a window that monitors the packet reception, orders them all and delivers a stream guaranteed to be ordered correctly, with no repeats, and what you got is what was sent.

Sounds pretty good. Unfortunately, we cannot rely on it. Let's document the reasons why the promise falls short. Whether this effects you in practice depends, as we started out, on whether you really truly need reliability.

May 06, 2006

Petrol firm suspends chip-and-pin

Lynn points to BBC on: Petrol giant Shell has suspended chip-and-pin payments in 600 UK petrol stations after more than £1m was siphoned out of customers' accounts. Eight people, including one from Guildford, Surrey and another from Portsmouth, Hants, have been arrested in connection with the fraud inquiry.

The Association of Payment Clearing Services (Apacs) said the fraud related to just one petrol chain. Shell said it hoped to restore the chip-and-pin service before Monday.

"These Pin pads are supposed to be tamper resistant, they are supposed to shut down, so that has obviously failed," said Apacs spokeswoman Sandra Quinn. Shell has nearly 1,000 outlets in the UK, 400 of which are run by franchisees who will continue to use chip-and-pin.

A Shell spokeswoman said: "We have temporarily suspended chip and pin availability in our UK company-owned service stations. This is a precautionary measure to protect the security of our customers' transactions. You can still pay for your fuel, goods or services with your card by swipe and signature. We will reintroduce chip and pin as soon as it is possible, following consultation with the terminal manufacturer, card companies and the relevant authorities."

BP is also looking into card fraud at petrol stations in Worcestershire but it is not known if this is connected to chip-and-pin.

And immediately followed by more details in this article: Customers across the country have had their credit and debit card details copied by fraudsters, and then money withdrawn from their accounts. More than £1 million has been siphoned off by the fraudsters, and an investigation by the Metropolitan Police's Cheque and Plastic Crime Unit is under way.

The association's spokeswoman Sandra Quinn said: "They have used an old style skimming device. They are skimming the card, copying the magnetic details - there is no new fraud here. They have managed to tamper with the pin pads. These pads are supposed to be tamper resistant, they are supposed to shut down, so that has obviously failed."

Ms Quinn said the fraud related to one petrol chain: "This is a specific issue for Shell and their supplier to sort out. We are confident that this is not a systemic issue."

Such issues have been discussed before.

May 05, 2006

Security Soap Opera - (Central) banks don't (want to) know, MS prefers Brand X, airlines selling your identity, first transaction trojan

Journalist Roger Grimes did some research on trojans and came up with this:

Even more disturbing is that most banks and regulatory officials donít understand the new threat, and when presented with it, hesitate to offer anything but the same old advice.Every bank and regulatory official contacted for this article said they have already recommended banks implement a two-factor or multifactor log-on authentication screen. In general, they expressed frustration at the amount of effort it has taken to get banks to follow that advice. And all complained about the trouble these schemes are causing legitimate customers.

When told how SSL-evading Trojans can bypass any authentication mechanism, most offered up additional ineffective authentication as a solution. When convinced by additional discussion that the problem could be solved only by fixing transactional authorization, most shrugged their shoulders and said they would remain under pressure to continue implementing authentication-only solutions.

They were also hesitant to broach the subject with senior management. It had taken so long to get banks to agree to two-factor authentication, they said, it would be almost impossible to change recommendations midstream. That puts the banking industry on a collision course with escalating attacks.

On the nail. (Sorry, Dave!)

Microsoft has apparently expressed a preference of smart cards over two-factor tokens:

More interesting is Microsoft's long-term view of two-factor authentication. In contrast to companies such as E*Trade, AOL, and VeriSign which have either announced support for or are already supporting one-time password + security token combinations for their customers, Microsoft sees things moving in a different direction, according to a spokesperson.Most customers told Microsoft they do not view one-time passwords as strategic and are looking long term to smart cards as their preferred strong authentication mechanism.

In any soap opera, there appear advert breaks where the housewife is offered the choice of bland brand A versus bland brand B. "Most housewifes we surveyed chose Brand X soap powder." Maybe Microsoft's heart is in the right place, though:

Last week, Microsoft pledged to bring about 100 legal actions against phishers in Europe, the Middle East and Africa (EMEA) over the next few months.

That's smart. Given their risk exposure, they'd better have something good to bring to the negotiating table, especially given their extensive experience in prosecuting evil software copiers and less extensive success in stopping spam. To take a leaf out of Chandler's book, with fraud you are either fighting them or your are supporting them. (hmmm, spoke too soon.)

Adam finds an article on how Adam Laurie and Steve Boggan "hacked" the airline ticket tracking systems to extract the full identity of a flyer. Skipping the details on the hack itself (did buying the ticket establish their credentials?) the piece is more relevant for its revelations of just how much data is being put together for erstwhile tracking purposes.

We logged on to the BA website, bought a ticket in Broer's name and then, using the frequent flyer number on his boarding pass stub, without typing in a password, were given full access to all his personal details - including his passport number, the date it expired, his nationality (he is Dutch, living in the UK) and his date of birth. The system even allowed us to change the information.Using this information and surfing publicly available databases, we were able - within 15 minutes - to find out where Broer lived, who lived there with him, where he worked, which universities he had attended and even how much his house was worth when he bought it two years ago.

The article talks about how the Europeans handed over the data to the Americans in apparent breach of their own privacy rules. Things like what means you ordered, what sort of hotel room. Today you're a terrorist for ordering the ethnic meal, and tomorrow you run the same risk if you swap hotels and your hotel chain doesn't approve. Think that's extreme? Look how the information creep has started:

"They want to extend the advance passenger information system [APIS] to include data on where passengers are going and where they are staying because of concerns over plagues," he says. "For example, if bird flu breaks out, they want to know where all the foreign travellers are.

That's nothing more than an excuse by the system operators to extract more information. Of course, your hotel will be then required to provide up to date information as to where you moved next.

A data point - perhaps the first transaction trojan. FTR:

Transaction-based SSL-evading Trojans are the most dangerous and sophisticated. They wait until the user has successfully authenticated at the bankís Web site, eliminating the need to bypass or capture authentication information. The Trojan then manipulates the underlying transaction, so that what the user thinks is happening is different from whatís actually transpiring on the siteís servers.The Win32.Grams E-gold Trojan, spawned in November 2004, is a prime example of transaction-based type. When the user successfully authenticates, the Trojan opens a hidden browser window, reads the userís account balance, and creates another hidden window that initiates a secret transfer. The userís account balance, minus a small amount (to bypass any automatic warnings), is then sent to a predefined payee.

Many SSL-evading Trojans are ďone-offs,Ē meaning that they are encrypted or packaged so that each Trojan is unique -- defeating signature-style detection by anti-virus software.

Ultimately, SSL-evading Trojans can be defeated only when users stop running untrusted code -- or better still, when banks deploy back-end defensive mechanisms that move beyond mere authentication protection.

Sorry about the "blame the user" trick at the end. When will they ever learn?

May 01, 2006

Fido reads your mind

Fido is a maths puzzle (needs Flash, hopefully doesn't infect your machine) that seems counter-intiutive... Any mathematicians in the house? My sister wants to know...

In terms of presence, the site itself seems to be a web presence company that works with music media. Giving away fun things like that seems to work well - I wouln't have looked further if they had pumped their brand excessively.