March 29, 2010

Pushing the CA into taking responsibility for the MITM

This ArsTechnica article explores what happens when the CA-supplied certificate is used as an MITM over some SSL connection to protect online-banking or similar. In the secure-lite model that emerged after the real-estate wars of the mid-1990s, consumers were told to click on their tiny padlock to check the cert:

Now, a careful observer might be able to detect this. Amazon's certificate, for example, should be issued by VeriSign. If it suddenly changed to be signed by Etisalat (the UAE's national phone company), this could be noticed by someone clicking the padlock to view the detailed certificate information. But few people do this in practice, and even fewer people know who should be issuing the certificates for a given organization.

Right, so where does this go? Well, people don't notice because they can't. Put the CA on the chrome and people will notice. What then?

Right, so where does this go? Well, people don't notice because they can't. Put the CA on the chrome and people will notice. What then?

A switch in CA is a very significant event. Jane Public might not be able to do something, but if a customer of Verisign's was MITM'd by a cert from Etisalat, this is something that effects Verisign. We might reasonably expect Verisign to be interested in that. As it effects the chrome, and as customers might get annoyed, we might even expect Verisign to treat this as an attack on their good reputation.

And that's why putting the brand of the CA onto the chrome is so important: it's the only real way to bring pressure to bear on a CA to get it to lift its game. Security, reputation, sales. These things are all on the line when there is a handle to grasp by the public.

When the public has no handle on what is going on, the deal falls back into the shadows. No security there, in the shadows we find audit, contracts, outsourcing. Got a problem? Shrug. It doesn't effect our sales.

So, what happens when a CA MITM's its own customer?

Even this is limited; if VeriSign issued the original certificate as well as the compelled certificate, no one would be any the wiser. The researchers have devised a Firefox plug-in that should be released shortly that will attempt to detect particularly unusual situations (such as a US-based company with a China-issued certificate), but this is far from sufficient.

Arguably, this is not an MITM, because the CA is the authority (not the subscriber) ... but exotic legal arguments aside; we clearly don't want it. When it goes on, what we do need is software like whitelisting, like Conspiracy and like the other ideas floating around to do it.

And, we need the CA-on-the-chrome idea so that the responsibility aspect is established. CAs shouldn't be able to MITM other CAs. If we can establish that, with teeth, then the CA-against-itself case is far easier to deal with.

March 24, 2010

Why the browsers must change their old SSL security (?) model

In a paper Certified Lies: Detecting and Defeating Government Interception Attacks Against SSL_, by Christopher Soghoian and Sid Stammby, there is a reasonably good layout of the problem that browsers face in delivering their "one-model-suits-all" security model. It is more or less what we've understood all these years, in that by accepting an entire root list of 100s of CAs, there is no barrier to any one of them going a little rogue.

Of course, it is easy to raise the hypothetical of the rogue CA, and even to show compelling evidence of business models (they cover much the same claims with a CA that also works in the lawful intercept business that was covered here in FC many years ago). Beyond theoretical or probable evidence, it seems the authors have stumbled on some evidence that it is happening:

The company’s CEO, Victor Oppelman confirmed, in a conversation with the author at the company’s booth, the claims made in their marketing materials: That government customers have compelled CAs into issuing certificates for use in surveillance operations. While Mr Oppelman would not reveal which governments have purchased the 5-series device, he did confirm that it has been sold both domestically and to foreign customers.

(my emphasis.) This has been a lurking problem underlying all CAs since the beginning. The flip side of the trusted-third-party concept ("TTP") is the centralised-vulnerability-party or "CVP". That is, you may have been told you "trust" your TTP, but in reality, you are totally vulnerable to it. E.g., from the famous Blackberry "official spyware" case:

Nevertheless, hundreds of millions of people around the world, most of whom have never heard of Etisalat, unknowingly depend upon a company that has intentionally delivered spyware to its own paying customers, to protect their own communications security.

Which becomes worse when the browsers insist, not without good reason, that the root list is hidden from the consumer. The problem that occurs here is that the compelled CA problem multiplies to the square of the number of roots: if a CA in (say) Ecuador is compelled to deliver a rogue cert, then that can be used against a CA in Korea, and indeed all the other CAs. A brief examination of the ways in which CAs work, and browsers interact with CAs, leads one to the unfortunate conclusion that nobody in the CAs, and nobody in the browsers, can do a darn thing about it.

So it then falls to a question of statistics: at what point do we believe that there are so many CAs in there, that the chance of getting away with a little interception is too enticing? Square law says that the chances are say 100 CAs squared, or 10,000 times the chance of any one intercept. As we've reached that number, this indicates that the temptation to resist intercept is good for all except 0.01% of circumstances. OK, pretty scratchy maths, but it does indicate that the temptation is a small but not infinitesimal number. A risk exists, in words, and in numbers.

One CA can hide amongst the crowd, but there is a little bit of a fix to open up that crowd. This fix is to simply show the user the CA brand, to put faces on the crowd. Think of the above, and while it doesn't solve the underlying weakness of the CVP, it does mean that the mathematics of squared vulnerability collapses. Once a user sees their CA has changed, or has a chance of seeing it, hiding amongst the crowd of CAs is no longer as easy.

Why then do browsers resist this fix? There is one good reason, which is that consumers really don't care and don't want to care. In more particular terms, they do not want to be bothered by security models, and the security displays in the past have never worked out. Gerv puts it this way in comments:

Security UI comes at a cost - a cost in complexity of UI and of message, and in potential user confusion. We should only present users with UI which enables them to make meaningful decisions based on information they have.

They love Skype, which gives them everything they need without asking them anything. Which therefore should be reasonable enough motive to follow those lessons, but the context is different. Skype is in the chat & voice market, and the security model it has chosen is well-excessive to needs there. Browsing on the other hand is in the credit-card shopping and Internet online banking market, and the security model imposed by the mid 1990s evolution of uncontrollable forces has now broken before the onslaught of phishing & friends.

In other words, for browsing, the writing is on the wall. Why then don't they move? In a perceptive footnote, the authors also ponder this conundrum:

3. The browser vendors wield considerable theoretical power over each CA. Any CA no longer trusted by the major browsers will have an impossible time attracting or retaining clients, as visitors to those clients’ websites will be greeted by a scary browser warning each time they attempt to establish a secure connection. Nevertheless, the browser vendors appear loathe to actually drop CAs that engage in inappropriate be- havior — a rather lengthy list of bad CA practices that have not resulted in the CAs being dropped by one browser vendor can be seen in [6].

I have observed this for a long time now, predicting phishing until it became the flood of fraud. The answer is, to my mind, a complicated one which I can only paraphrase.

For Mozilla, the reason is simple lack of security capability at the *architectural* and *governance* levels. Indeed, it should be noticed that this lack of capability is their policy, as they deliberately and explicitly outsource big security questions to others (known as the "standards groups" such as IETF's RFC committees). As they have little of the capability, they aren't in a good position to use the power, no matter whether they would want to or not. So, it only needs a mildly argumentative approach on the behalf of the others, and Mozilla is restrained from its apparent power.

What then of Microsoft? Well, they certainly have the capability, but they have other fish to fry. They aren't fussed about the power because it doesn't bring them anything of use to them. As a corporation, they are strictly interested in shareholders' profits (by law and by custom), and as nobody can show them a bottom line improvement from CA & cert business, no interest is generated. And without that interest, it is practically impossible to get the various many groups within Microsoft to move.

Unlike Mozilla, my view of Microsoft is much more "external", based on many observations that have never been confirmed internally. However it seems to fit; all of their security work has been directed to market interests. Hence for example their work in identity & authentication (.net, infocard, etc) was all directed at creating the platform for capturing the future market.

What is odd is that all CAs agree that they want their logo on their browser real estate. Big and small. So one would think that there was a unified approach to this, and it would eventually win the day; the browser wins for advancing security, the CAs win because their brand investments now make sense. The consumer wins for both reasons. Indeed, early recommendations from the CABForum, a closed group of CAs and browsers, had these fixes in there.

But these ideas keep running up against resistance, and none of the resistance makes any sense. And that is probably the best way to think of it: the browsers don't have a logical model for where to go for security, so anything leaps the bar when the level is set to zero.

Which all leads to a new group of people trying to solve the problem. The authors present their model as this:

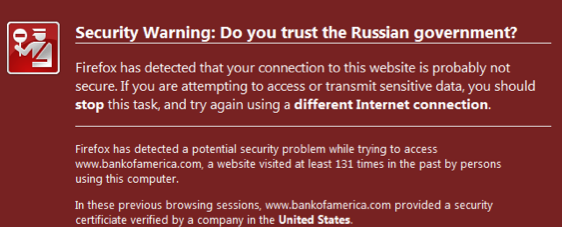

The Firefox browser already retains history data for all visited websites. We have simply modified the browser to cause it to retain slightly more information. Thus, for each new SSL protected website that the user visits, a Certlock enabled browser also caches the following additional certificate information:

A hash of the certificate.

The country of the issuing CA.

The name of the CA.

The country of the website.

The name of the website.

The entire chain of trust up to the root CA.When a user re-visits a SSL protected website, Certlock first calculates the hash of the site’s certificate and compares it to the stored hash from previous visits. If it hasn’t changed, the page is loaded without warning. If the certificate has changed, the CAs that issued the old and new certificates are compared. If the CAs are the same, or from the same country, the page is loaded without any warning. If, on the other hand, the CAs’ countries differ, then the user will see a warning (See Figure 3).

This isn't new. The authors credit recent work, but no further back than a year or two. Which I find sad because the important work done by TrustBar and Petnames is pretty much forgotten.

But it is encouraging that the security models are battling it out, because it gets people thinking, and challenging their assumptions. Only actual produced code, and garnered market share is likely to change the security benefits of the users. So while we can criticise the country approach (it assumes a sort of magical touch of law within the countries concerned that is already assumed not to exist, by dint of us being here in the first place), the country "proxy" is much better than nothing, and it gets us closer to the real information: the CA.

From a market for security pov, it is an interesting period. The first attempts around 2004-2006 in this area failed. This time, the resurgence seems to have a little more steam, and possibly now is a better time. In 2004-2006 the threat was seen as more or less theoretical by the hoi polloi. Now however we've got governments interested, consumers sick of it, and the entire military-industrial complex obsessed with it (both in participating and fighting). So perhaps the newcomers can ride this wave of FUD in, where previous attempts drowned far from the shore.

March 15, 2010

Ernst & Young staring down the barrel that shot Arthur Andersen

The report on Lehman Brothers' collapse is out, and it apparently includes a smoking gun against the auditor. Prison Planet alleges that "The firm’s auditor, Ernst & Young, one of the four biggest auditing firms in the world, failed in its oversight role:"

In May 2008, a Lehman Senior Vice President, Matthew Lee, wrote a letter to management alleging accounting improprieties; in the course of investigating the allegations, Ernst & Young was advised by Lee on June 12, 2008 that Lehman used $50 billion of Repo 105 transactions to temporarily move assets off balance sheet and quarter end.The next day -- on June 13, 2008 -- Ernst & Young met with the Lehman Board Audit Committee but did not advise it about Lee’s assertions, despite an express direction from the Committee to advise on all allegations raised by Lee.Ernst & Young took virtually no action to investigate the Repo 105 allegations. Ernst & Young took no steps to question or challenge the non-disclosure by Lehman of its use of $50 billion of temporary, off-balance sheet transactions.

Colorable claims exist that Ernst & Young did not meet professional standards, both in investigating Lee’s allegations and in connection with its audit and review of Lehman’s financial statements.

Now, I haven't read it in depth. But, on first blush, that looks directly analogous to the conditions that wiped out Arthur Andersen. (Agreement on Business Insider.)

The Audit industry will now feel the old Chinese curse: to live in interesting times...