February 10, 2010

EV's green cert is breached (of course) (SNAFU)

Reading up on something or other (Ivan Ristić), I stumbled on this EV breach by Adrian Dimcev:

Reading up on something or other (Ivan Ristić), I stumbled on this EV breach by Adrian Dimcev:

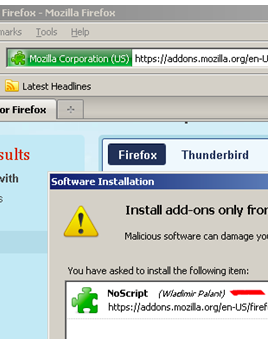

Say you go to https://addons.mozilla.org and download a popular extension. Maybe NoScript. The download location appears to be over HTTPS. ... (lots of HTTP/HTTPS blah blah) ... Today I had some time, so Iíve decided to play a little with this. ...Well, Wladimir Palant is now the author of NoScript, and of course, Iím downloading it over HTTPS.

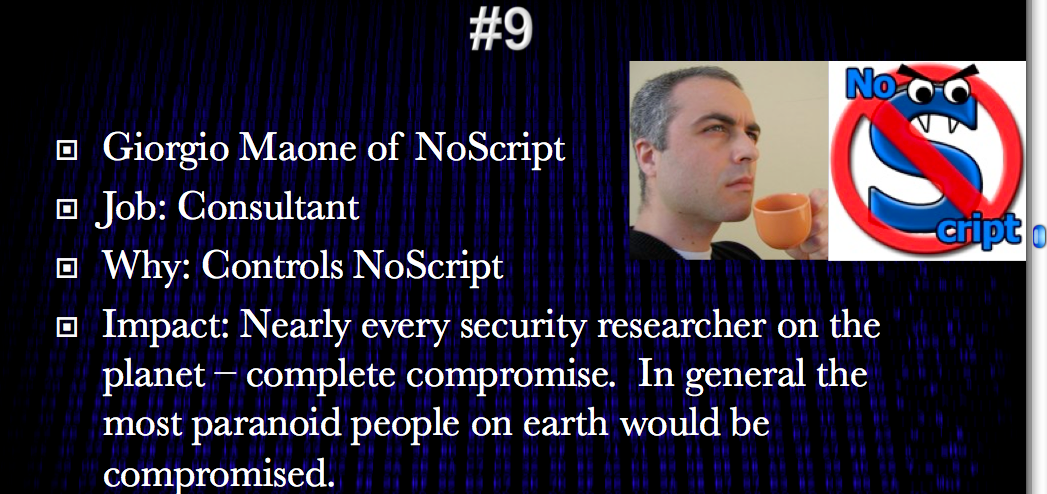

Boom! Mozilla's website uses an EV ("extended validation") certificate to present to the user that this is a high security site, or something. However, it provided the downloads over HTTP, and this was easily manipulable. Hence, NoScript (which I use) was hit by an MITM, and this might explain why Robert Hansen said that the real author of NoScript, Giorgio Maone, was the 9th most dangerous person on the planet.

What's going on here? Well, several things. The promise that the web site makes when it displays the green EV certificate is just that: a promise. The essence of the EV promise is that it is somehow of a stronger quality than say raw unencrypted HTTP.

What's going on here? Well, several things. The promise that the web site makes when it displays the green EV certificate is just that: a promise. The essence of the EV promise is that it is somehow of a stronger quality than say raw unencrypted HTTP.

EV checks identity to the Nth degree, which is why it is called "extended validation." Checking the identity is useful, but no more than is matched by a balance in overall security, so the difference between ordinary certs and EV is mostly irrelevant. In simple security model terms, that's not where the threats are (or ever were), so they are winding up the wrong knob. In economics terms, EV raises the cost barrier, which is classical price discrimination, and this results in a colourful signal, not a metric or measure. The marketing aligns the signal of green to security, but the attack easily splits security from promise. Worse, the more they wind the knob, the more they drift off topic, as per silver bullets hypothesis.

If you want to put it in financial or payments context, EV should be like PCI, not like KYC.

But it isn't, it's the gold-card of KYC. So, how easy is it to breach the site itself and render the promise a joke? Well, this is the annoying part. Security practices in the HTTP world today are so woeful that even if you know a lot, and even if you try hard to make it secure, the current practices are terrifically hard to secure. Basically, complexity is the enemy of security, and complexity is too high in the world of websites & browsers.

So CABForum, the promoters of EV, fell into the trap of tightening the identity knob up so much, to increase the promise of security ... but didn't look at all at the real security equation on which it is dependent. So, it is a strong brand over a weak product, it's green paint over wood rot. Worse for them, they won't ever rectify this, because the solutions are out of their reach: they cannot weaken the identity promise without causing the bureaucrats to attack them, and they cannot improve the security of the foundation without destroying the business model.

Which brings us to the real security question. What's the easiest fix? It is:

there is only one mode, and it is secure.

Now, hypothetically, we could see a possibility of saving EV if the CABForum contracts were adjusted such that they enforce this dictum. In practical measures, it would be a restriction that an EV-protected website would only communicate over EV, there would be no downgrade possible. Not to Blue HTTPS, not to white HTTP. This would include all downloads, all javascript, all mashups, all off-site blah blah.

And that would be the first problem with securing the promise: EV is sold as a simple identity advertisement, it does not enter into the security business at all. So this would make a radical change in the sales process, and it's not clear it would survive the transition.

Secondly, CABForum is a cartel of people who are in the sales business. It's not security-wise but security-talk. So as a practical matter, the institution is not staffed with the people who are going to pick up this ball and run with it. It has a history of missing the target and claiming victory (c.f., phishing), so what would change its path now?

That's not to say that EV is all bad, but here is an example of how CABForum succeeded and failed, as illustrative of their difficulties:

EV achieved one thing, in that it put the brand name of the CA on the agenda. HTTPS security at the identity level is worthless unless the brand of the CA is shown. I haven't seen a Microsoft browser in a while, but the mock-ups showed the brand of the CA, so this gives the consumer a chance to avoid the obvious switcheroo from known brand to strange foreign thing, and recent breaches of various low-end commercial CAs indicate there isn't much consistency across the brands. (Or fake injection attacks, if one is worried about MITB.)

So it is essential to any sense of security that the identity of who made the security statement be known to consumers; and CABForum tried to make this happen. Here's one well-known (branded) CA's display of the complete security statement.

But Mozilla was a hold-out. (It is a mystery why Mozilla fights against the the complete security statement. I have a feeling it is more of the same problem that infects CABForum, Microsoft and the Internet in general; monoculture. Mozilla is staffed by developers who apparently think that brands are TV-adverts or road-side billboards of no value to their users, and Mozilla's mission is to protect their flock against another mass-media rape of consciousness & humanity by the evil globalised corporations ... rather than appreciating the brand as handles to security statements that mesh into an overall security model.)

Which puts the example to the test: although CABForum was capable of winding the identity knob up to 11, and beyond, they were not capable of adjusting the guidelines to change the security model, and force the brand of the CA to be shown on the browser, in some sense. In these terms, what has now happened is that Mozilla is embarrassed, but zero fallback comes onto the CA. It was the CA that should have solved the problem in the first place, because the CA is in the sell-security business, Mozilla is not. So the CA should have adjusted contracts to control the environment in which the green could be displayed. Oops.

Which puts the example to the test: although CABForum was capable of winding the identity knob up to 11, and beyond, they were not capable of adjusting the guidelines to change the security model, and force the brand of the CA to be shown on the browser, in some sense. In these terms, what has now happened is that Mozilla is embarrassed, but zero fallback comes onto the CA. It was the CA that should have solved the problem in the first place, because the CA is in the sell-security business, Mozilla is not. So the CA should have adjusted contracts to control the environment in which the green could be displayed. Oops.

Where do we go from here? Well, we'll probably see a lot of embarrassing attacks on EV ... the brand of EV will wobble, but still sell (mostly because consumers don't believe it anyway, because they know all security marketing is mostly talk [or, do they?], and corporates will try any advertising strategy, because most of them are unprovable anyway). But, gradually the embarrassments will add up to sufficient pressure to push the CABForum to entering the security business. (e.g., PCI not KYC.)

Where do we go from here? Well, we'll probably see a lot of embarrassing attacks on EV ... the brand of EV will wobble, but still sell (mostly because consumers don't believe it anyway, because they know all security marketing is mostly talk [or, do they?], and corporates will try any advertising strategy, because most of them are unprovable anyway). But, gradually the embarrassments will add up to sufficient pressure to push the CABForum to entering the security business. (e.g., PCI not KYC.)

The big question is, how long will this take, and will it actually do any good? If it takes 5-10 years, which I would predict, it will likely be overtaken by events, just as happened with the first round of security world versus phishing. It seems that we are in that old military story where our unfortunate user-soldiers are being shot up on the beaches while the last-war generals are still arguing as to what paint to put on the rusty ships. SNAFU, "situation normal, all futzed up," or in normal language, same as it ever was.

Posted by iang at February 10, 2010 12:23 PM | TrackBackIt's been an interesting few days for releasing "protocol errors"...

Which just goes to show what I've been saying for a while

Threat #1 = side channels

Threat #2 = Protocol errors

Threat #3 = Systems that use 2 and open 1.

Ho hum, I'd seriously expect to see a raft more of 1&2 and thus 3 over the comming months.

However the start of the solution involves a bonfire for 2 and an axe for 3, and a lot of learning for 1.

Then when the decks are cleared put in place a framework that alows bad components to be swaped out very quickly so only the bonfire is needed in future.

Posted by: Clive Robinson at February 12, 2010 03:40 AMJust for those who don't know about the other "protocol failures" I indirectly mentioned above.

Here are handfull of protocol falures in financial and authentication systems (some presented at "Financial Cryptography 10" a few days ago).

They are by no means all the protocol failures of late...

These have been found by the folks over at Cambridge Labs (UK). You need to remember that in comparison to the criminals these guys are few and not well resourced. So...,

I would expect there to be other protocol failures that are currently "zero day"...

First :- Chip-n-Pin Busted

The biggie af far as our wallets go in Europe (and yours in the US unless you make a major major noise about it)...

The Chip-n-Pin protocol has a huge hole in it wide enough to get every skimer/phisher world wide through standing side by side...

If that was just the only problem that would be bad enough, however,

Worse, it falsly records information that the banks then rely on in court to say it was the card holder not their system that was at fault...

http://www.lightbluetouchpaper.org/2010/02/11/chip-and-pin-is-broken/

Second :- HMQV CA PK validation failure

Feng Hao spotted a protocol error in HMQV (this is supposadly the best protocol of it's type...),

http://www.lightbluetouchpaper.org/2010/02/09/new-attacks-on-hmqv/

As he says,

"The HMQV protocol explicitly specifies that the Certificate Authority (CA) does not need to validate the public key except checking it is not zero. (This is one reason why HMQV claims to be more efficient than MQV)"

Although HMQV's author claims it's unimportant it leaves an interesting hole (indetermanate state) that may well be used for say a side channel in future implementations.

And this is more than likley to happen due to the usual "feature creep" abuse of protocols by system designers. Who can be less security savy (mind you that applies to all of us ;)

Third :- 3D Secure (VbV) Busted

Ross Anderson & Steve Murdoch took a serious look at 3D Secure (which underpins Verified by Visa or VbV).

Ross says,

"Steven Murdoch and I analyse 3D Secure. From the engineering point of view, it does just about everything wrong, and it's becoming a fat target for phishing."

So effectivly protocol failures (to numerous to mention) from the user level downwards...

http://www.lightbluetouchpaper.org/2010/01/26/how-online-card-security-fails/

Hey time to sing "happy days are here again... ...cheer again... ...Happy days are here again."

Or more apptly the ancient Chinese Curse appears to have come to pass and "we are living in interesting times"...

Posted by: Clive Robinson at February 12, 2010 06:36 AMFor those thinking of designing their own protocols or "cherry picking" bits from other protocols my advice is don't do it (consider yourself warned ;)

If you still think you can do it securely on your own then there are a series of informal rules you might like to consider, (whilst you commit your error in judgment 8)

With regards to system level security protocols the "zero'th" rule is normaly taken as a given. Which is a bit of a protocol failure in of it's self 8)

So,

0 : The given rule of security protocols 'they should be "state based" to alow clear and unambiguous analysis'.

Yes not just the protocol but the software should be a state machine. It helps you think clearly and if you do it as a matrix helps you realise where you have undefined states etc.

Oh and the well known 0.5 rules of KISS (Keep It Simple Stupid) or 7P's (Piss Poor Planning Produces Piss Poor Protocols) or 3C's (Clear, Concise, Calculated)...

Therfore with that out of the way, as far as security related protocols and systems and their "states" the following rules should be considered,

1 : The first rule of security is 'no undefind or ambiguous states'.

2 : The second rule is 'clearly defined segregation and transition from one state to another'.

3 : The third rule is 'States should not be aware, let alone dependent on "carried/payload data" only protocol'.

4 : The fourth is 'protocol signaling is always out of band to "carried/payload data" (that is the source and sink of the "carried/payload data" cannot see or change the security protocol signaling and data)'.

5 : The fifth is 'protocol and "carried/payload data" should both be authenticated, seperatly of each other, and further each datagram/message should be authenticated across them both'.

There are other rules to do with dealing with redundancy within and future extension of protocols (put simply DON'T ALOW IT) but those five go a long way to solving a lot of the problems that open up side channels.

As a note on redundancy it is always best to avoid it. That is the full range of values in byte or other value container are valid as data. This means you have to use "Pascal" not "C" strings etc and no padding to fit etc.

Why the fuss about "side chanels" they are all proto "covert channels" waiting to be exploited at some point in the future.

The main problem with side/covert channels is that their information bandwidth needs only to be an almost infitesimaly small fraction of the main channel to cause problems.

Think of it this way each bit leaked is your security atleast halved (to brut force) or worse with more directed or "trade off" attacks (oh one bit is always leaked you cannot avoid it or do much about it).

Oh and always always remember the EmSec rules apply equally as well to data in a protocol "information channel" as well as a "physical communications channel",

1, Clock the Inputs,

2, Clock the Outputs,

3, Limit and fill Bandwidth,

4, Hard fail on error.

The first three help prevent "time based covert channels" not just within the system but through a system as well (have a look at Mat Blazes "Key bugs" for more on how to implement such a time based "forward" covert channel).

The first prevents "forward channels" the second "backward channels". The consiquence of this clocking is that you have to "pipeline" everything and use the same "master clock".

If this cannot be done you need to assess which risk is worse data leaking out (forward channel) or illicit data being sent in "reverse channel").

That is look at it as potentialy your key value being "leaked" onto the network (forward) or your key value being "forced" into a known value from the network (reverse).

Hmm difficult call how about that master clock again 8)

The third is about redundancy within a channel.

Any redundancy in a channel can be used to implement a "redundancy covert channel". Therfore you need to first hard limit the bandwidth of the channel to a known and constant value. Then fill the channel to capacity with sent data so there is no redundancy.

You usually see this on military comms networks and the reason given if you ask is "it prevents traffic analysis" which whilst true conveniantly hides the truth about "redundancy covert channels"...

The forth rule is important when anything goes wrong "hard fail" and start again at some later point in time you select.

This limits channels from "induced error covert channels". When hard failing it is best to "stall the channel" for a random period of time atleast double the length of time the error occured from the chanel being opened to send a message.

Whilst this cannot stop an "error covert channel" being formed it makes it of very low bandwidth and easily seen (flag up all errors).

There are other rules but these should limit most of the publicaly known covert channel types.

Posted by: Clive Robinson at February 12, 2010 08:09 AM"(It is a mystery why Mozilla fights against the the complete security statement. I have a feeling it is more of the same problem that infects CABForum, Microsoft and the Internet in general; monoculture. Mozilla is staffed by developers who apparently think that brands are TV-adverts or road-side billboards of no value to their users, and Mozilla's mission is to protect their flock against another mass-media rape of consciousness & humanity by the evil globalised corporations ... rather than appreciating the brand as handles to security statements that mesh into an overall security model.)"

We keep telling you why we don't do this, but you keep forgetting because the answer doesn't fit with your meta-narrative.

The CA market is not large enough for CAs to have marketing budgets large enough for them to impress their brand upon a sufficient percentage of consciousnesses in order to have the social effects you describe.

What do you advise someone to do if they see a brand they don't recognise on a new e-commerce site they are visiting?

1) Don't shop there. Result: the CA market reduces to one or two players who have enough marketing clout to get recognised.

2) Do shop there. Result: brands are irrelevant.

Gerv

Posted by: Gerv at February 23, 2010 12:00 PMGerv,

thanks for your comment, but I have to say: your comment eloquently makes my point. Think of it this way. Imagine the marketing manager with decades of experience in brand management, product design, marketing strategy, etc, coming to you and saying "I don't know why you're having all this trouble with phishing, just download OpenID and install it!"

Where do you begin to explain? I know it is likely offensive to say so, but this is approximately where Mozilla is with brands. There are people in the marketing world who get university degrees and MBAs and masters and decades of experience before they know enough to say "this is a brand problem, here is a brand solution."

Meanwhile, you are telling CAs and consumers that you are going to be managing their brands for them, because you're worried that shoppers will be confused, and/or you might over-concentrate the industry.

In practice, brand is used to reduce confusion, and consumers love brands for that very reason, so you're on the back foot to start with. To make that point work, you'd have to undermine centuries worth of factual development of marketing!

Also, Mozilla doesn't manage the market, the market manages the market. The notion that Mozilla should "protect consumers from concentration by reducing brands" or "save CAs because they don't have the budget" was quaint in the past, but since the fall of the Berlin Wall, centralised market planning is off the agenda. It didn't work for communism, it makes people poorer wherever they try it, and it won't do any good for users of Mozilla.

Posted by: Iang at February 23, 2010 12:52 PMBut, to answer your direct question.

Firstly, TLS isn't only used for shopping, and if you want to set the policy&practices based on that, then we can make some other changes as well: charge CAs for admission, coz they charge shops for admission, and shops charge consumers for admission. We don't do that, and neither should we tell consumers how to do their consumption.

Secondly, you, I, Mozilla rarely tell people to shop anywhere; we normally as individuals might say "that's a well-known brand" ... or "oh, amazon is safe for shoppers." The consumer decides whether to shop, so your answers are a false dichotomy.

Thirdly, the advice on seeing a new brand of CA is simply this:

*inform yourself*, and make a risk judgement. If they can't do that, then they're not safe enough to shop.

Fourthly, it is always a risk and always a judgement. Every purchase whether at the same brand or not is always a new experience. Consumers typically show they are far better in the aggregate at taking judgement calls with money than developers are with security tools, if not they'd starve; they are certainly more reliable at buying stuff than we are at debugging code :-)

Fifthly, don't forget google and the Internet: informing oneself about the quality of a local shop or CA just from the brand has never been easier.

Sixly, people still have friends, even if the net tells them that facebook is the place for society. Everyone I know in real life has a computer person they ask for advice.

Posted by: Iang at February 23, 2010 01:00 PMIan: so you are suggesting that if a user visits www.thegap.com and see a CA they don't recognise, the official advice would be to get their browser to tell them the name of the CA, and for them to go off and do research into that CA's trustworthiness before the come back and buy the latest hipsters?

We are not proposing centralized brand management, or "protect[ing] consumers from concentration by reducing brands" (please don't use quotation marks if it's not a quotation - I never said anything of the kind). It's your proposal which would reduce the brands, because only the largest CAs would have any hope of getting well known enough for people to recognize their brand.

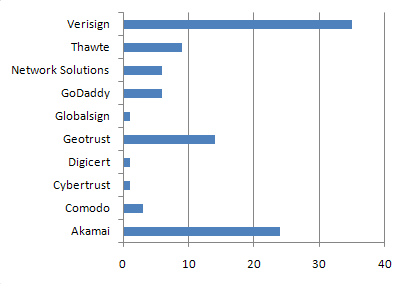

Security UI comes at a cost - a cost in complexity of UI and of message, and in potential user confusion. We should only present users with UI which enables them to make meaningful decisions based on information they have. 97% of the world population have never heard of Verisign, let alone Entrust or Comodo - and almost all of those who have, have no strong opinions either way about their trustworthiness. And that isn't going to change any time soon.

Gerv

Posted by: Gerv at February 25, 2010 10:23 AM