February 27, 2008

Attack on Brit retail payments -- some takeways

Some of the people at U.Cambridge have successfully and easily attacked the card readers and cards used in retail transactions in Britain. This is good work. You should skim it for the documented attacks on the British payments systems, if only because the summary is well written.

My takeaways are these:

- the attack is a listening/recording attack in between the card readers and cards

- the communications between reader and card are not secured (they say "not encrypted"), so easy to tap,

- the attack hides inside a compromised reader (which is slightly but not significantly tamper-resistant)

- the cards themselves have "weak modes" to make the cards usable overseas,

- the card readers are available for sale on eBay!

- the certification or independent security review is done in secret.

Many others will write about the failure to use some security protocol, etc. Also note the failure of modes. So I shall add a postscript on the last point, secrecy of architecture. (I have written before about this problem, but I recall not where.)

By placing the security of the system under the wrap of secrecy, this allows a "secure-by-design" myth to emerge. Marketing people and managers cannot resist the allure of secret designs, and the internal security team has little interest in telling the truth (their job is easier if no informed scrutiny!). As the designs and processes are secret, there is no independent check on the spread of this myth of total security.

At some point the organisation (a bank or a banking sector) internalises the myth of total security and starts to lean heavily on the false belief. Other very-needed parts of the security arrangement are slowly stripped away because "the devices are secure so other parts are not needed."

The reality is that the system is not "secure" but is "economically difficult to attack". Defence in depth was employed to raise and balance the total security equation, which means other parts of the business become part of the security. By way of example, the card readers are often required to be tamper-resistant, and to be "controlled items" which means they cannot be purchased openly. These things will be written into the security architecture.

But, because all the business outside the security team cannot see the security architecture -- it's secret! -- they do not know this. So we see cost cutting and business changes indicated above. Because the business believes the system to be totally secure -- the myth! -- they don't bother to tell the security team that the cards have dual modes, readers are now made of plastic, are sold on eBay. The security team doesn't need to know this because they built a secure system.

In this way, the security-model-wrapped-in-secrecy backfires and destroys the security. I sometimes term the arisal of the myth within banks as organisational cognitive dissonance for want of a better term.

February 17, 2008

Say it ain't so? MITM protection on SSH shows its paces...

For a decade now, SSH has successfully employed a simple opportunistic protection model that solved the shared-key problem. The premise is quite simple: use the information that the user probably knows. It does this by caching keys on first sight, and watching for unexpected changes. This was originally intended to address the theoretical weakness of public key cryptography called MITM or man-in-the-middle.

Critics of the SSH model, a.k.a. apologists for the PKI model of the Trusted Third Party (certificate authority) have always pointed out that this simply leaves SSH open to a first-time MITM. That is, when some key changes or you first go to a server, it is "unknown" and therefore has to be established with a "leap of faith."

The SSH defenders claim that we know much more about the other machine, so we know when the key is supposed to change. Therefore, it isn't so much a leap of faith as educated risk-taking. To which the critics respond that we all suffer from click-thru syndrome and we never read those messages, anyway.

Etc etc, you can see that this argument goes round and round, and will never be solved until we get some data. So far, the data is almost universally against the TTP model (recall phishing, which the high priests of the PKI have not addressed to any serious extent that I've ever seen). About a year or two back, attack attention started on SSH, and so far it has withstood difficulties with no major or widespread results. So much so that we hear very little about it, in contrast to phishing, which is now a 4 year flood of grief.

After which preamble, I can now report that I have a data point on an attack on SSH! As this is fairly rare, I'm going to report it in fullness, in case it helps. Here goes:

Yesterday, I ssh'd to a machine, and it said:

zhukov$ ssh some.where.example.net WARNING: RSA key found for host in .ssh/known_hosts:18 RSA key fingerprint 05:a4:c2:cf:32:cc:e8:4d:86:27:b7:01:9a:9c:02:0f. The authenticity of host can't be established but keys of different type are already known for this host. DSA key fingerprint is 61:43:9e:1f:ae:24:41:99:b5:0c:3f:e2:43:cd:bc:83. Are you sure you want to continue connecting (yes/no)?

OK, so I am supposed to know what was going on with that machine, and it was being rebuilt, but I really did not expect SSH to be effected. The ganglia twitch! I asked the sysadm, and he said no, it wasn't him. Hmmm... mighty suspicious.

I accepted the key and carried on. Does this prove that click-through syndrome is really an irresistable temptation and the archilles heel of SSH, and even the experienced user will fall for it? Not quite. Firstly, we don't really have a choice as sysadms, we have to get in there, compromise or no compromise, and see. Secondly, it is ok to compromise as long as we know it, we assess the risks and take them. I deliberately chose to go ahead in this case, so it is fair to say that I was warned, and the SSH security model did all that was asked of it.

Key accepted (yes), and onwards! It immediately came back and said:

iang@somewhere's password:

Now the ganglia are doing a ninja turtle act and I'm feeling very strange indeed: The apparent thought of being the victim of an actual real live MITM is doubly delicious, as it is supposed to be as unlikely as dying from shark bite. SSH is not supposed to fall back to passwords, it is supposed to use the keys that were set up earlier. At this point, for some emotional reason I can't further divine, I decided to treat this as a compromise and asked my mate to change my password. He did that, and then I logged in.

Then we checked. Lo and behold, SSH had been reinstalled completely, and a little bit of investigation revealed what the warped daemon was up to: password harvesting. And, I had a compromised fresh password, whereas my sysadm mates had their real passwords compromised:

$ cat /dev/saux foo@...208 (aendermich) [Fri Feb 15 2008 14:56:05 +0100] iang@...152 (changeme!) [Fri Feb 15 2008 15:01:11 +0100] nuss@...208 (43Er5z7) [Fri Feb 15 2008 16:10:34 +0100] iang@...113 (kash@zza75) [Fri Feb 15 2008 16:23:15 +0100] iang@...113 (kash@zza75) [Fri Feb 15 2008 16:35:59 +0100] $

The attacker had replaced the SSH daemon with one that insisted that the users type in their passwords. Luckily, we caught it with only one or two compromises.

In sum, the SSH security model did its job. This time! The fallback to server-key re-acceptance triggered sufficient suspicion, and the fallback to passwords gave confirmation.

As a single data point, it's not easy to extrapolate but we can point at which direction it is heading:

- the model works better than its absence would, for this environment and this threat.

- This was a node threat (the machine was apparently hacked via dodgy PHP and last week's linux kernel root exploit).

- the SSH model was originally intended to counter an MITM threat, not a node threat.

- because SSH prefers keys to passwords (machines being more reliable than humans) my password was protected by the default usage,

- then, as a side-effect, or by easy extension, the SSH model also protects against a security-mode switch.

- it would have worked for a real MITM, but only just, as there would only have been the one warning.

- But frankly, I don't care. The compromise of the node was far more serious,

- and we know that MITM is the least cost-effective breach of all. There is a high chance of visibility and it is very expensive to run.

- If we can seduce even a small proportion of breach attacks across to MITM work then we have done a valuable thing indeed.

In terms of our principles, we can then underscore the following:

- We are still a long way away from seeing any good data on intercept over-the-wire MITMs. Remember: the threat is on the node. The wire is (relatively) secure.

- In this current context, SSH's feature to accept passwords, and fallback from key-auth to password-auth, is a weakness. If the password mode had been disabled, then an entire area of attack possibilities would have been evaded. Remember: There is only one mode, and it is secure.

- The use of the information known to me saved me in this case. This is a good example of how to use the principle of Divide and Conquer. I call this process "bootstrapping relationships into key exchanges" and it is widely used outside the formal security industry.

All in all, SSH did a good job. Which still leaves us with the rather traumatic job of cleaning up a machine with 3-4 years of crappy PHP applications ... but that's another story.

For those wondering what to do about today's breach, it seems so far:

- turn all PHP to secure settings. throw out all old PHP apps that can't cope.

- find an update for your Linux kernel quickly

- watch out for SSH replacements and password harvesting

- prefer SSH keys over passwords. The compromises can be more easily cleaned up by re-generating and re-setting the keys, they don't leapfrog so easily, and they aren't so susceptible to what is sometimes called "social engineering" attacks.

February 15, 2008

What is Apple doing with the iPhone?

Bruce Schneier has a good article from the technical side of "lock-in" in this month's Crypto-gram. If you wish to understand the forces on technology suppliers like Apple, it is a good read. It finishes with:

As for Apple and the iPhone, I don't know what they're going to do. On the one hand, there's this analyst report that claims there are over a million unlocked iPhones, costing Apple between $300 million and $400 million in revenue. On the other hand, Apple is planning to release a software development kit this month, reversing its earlier restriction and allowing third-party vendors to write iPhone applications. Apple will attempt to keep control through a secret application key that will be required by all "official" third-party applications, but of course it's already been leaked.And the security arms race goes on...

What Apple are doing is neither full lock-in nor full open. That's the confusion. Why?

The answer is from marketing, and more specifically, the product life cycle or product roll-out economics. Let's assume that there is no competition for the iPhone (as this makes it easier to model).

In a rollout of a new innovation, there is a huge problem with market understanding. The product can't sell because nobody understands what it is about. So the need is critical for what is called "early adopters" which are the relatively clever, relatively rich people who buy any toy for the fun of it, and for the "first on the block" effect. These are around 1-3% of the market, depending.

Then, these early adopters will, if the product is any good, sell it to the rest of the people around them. Your sales force is your early adopters. So this means it is critical to please the early adopters, because without them, the product won't sell.

Who are the early adopters for any new and expensive phone? Phone hackers is one good answer. (Business geeks is another.) People who hack phones won't achieve too much but they tend to be quite influential as their sex-appeal is high to the media and their knowledge is wisdom to the public. Their enthusiasm sells phones.

The challenge for Apple is revealed. They have to attract the hackers, but not so much as to lose control. The mass market wants lock-in because they want a simple solid product with few choices. The early adopters want open, the reverse. Apple therefore walks the line between the two.

So far successfully. Of course, this whole model gets much more complicated when there are competitors, which is why watching the google phone and the gnu designs are interesting ... there you will see the Apple model being challenged.

But for now, it is fairly clear that this is the strategy that Apple is following. And, in the future, Apple will simply wind the lock up a bit. Not fast, just fast enough to keep the mass market locked in, and the early adopter enthusiasm keen.

It's good stuff, my hat off to Apple, this is what strategic marketing is all about. The only thing better than watching a great strategy unroll is creating one :)

February 14, 2008

FC2008 -- report by Dani Nagy

This was my first time [writes Dani Nagy] at the annual Financial Cryptography and Data Security Conference, even though I have extensively used results published at this conference in my research. In short, it was very interesting from both a technical and a social point of view (as in learning new results and meeting interesting people from the field). And it was a lot of fun, too.

Pairing based cryptography seems to be all the rage in the fundamental crypto research department. Secure Function Evaluation seems to be slowly inching from pure theory into the realm of applicable techniques. But don't hold your breath, yet.

In between theory and practice, was Moty Yung's very entertaining invited talk about Kleptography -- using cryptographic techniques for offensive, malicious purposes, rather than defenses, typically against other cryptographic systems. As an example, he gave a public-private RSA key generation algorithm, which is indistinguishable from an honest, random one in a black box manner, and even if reverse engineered, the keys generated with it can be factored only with the effort of factoring a key half that long. The attacker, however, that pushes this key generation algorithm on unsuspecting victims, will be able to factor their keys with very little effort.

By sheer accident, I found myself on the panel about e-cash. The topic was the gap between real-life electronic cash and academic research. One rule was not to speak about one's own work. The participants were selected from different parts of the world and different walks of life. For me, the biggest news was that credit cards are not common at all in Japan. For most of the people, WebMoney (which was what I talked about) was a complete novelty; I, in turn, found it a bit surprising that WebMoney is almost entirely unknown among FC people. On the other hand, the reason is obvious: most of their publications, including scientific ones, are available only in Russian.

The rump session was a lot of fun, too. In the last minute, I decided to present the core of my other paper that was rejected. There were many different talks, with quite a bit of humor.

The other panel, about usability issues was also interesting, but my personal conclusion was that there's still a very long way to go, until Skype-like usability becomes the norm rather than odd exceptions. The completely wrong threat models of the 1990-es with all-powerful adversaries, men in the middle and completely trustworthy third parties are still to deeply entrenched in many people's thinking.

For future conferences, the goal is to attract more people with finance, business and law backgrounds, in addition to cryptography and CS, which still dominate almost exclusively, despite the fact that there is a growing realization that it is not necessarily the crypto part that makes or breaks FC solutions.

At the general meeting of IFCA, there were the usual voting-on-voting discussions and people not willing to take any responsibility for anything, but I sort of expected it. The important news is that the next island is Barbados and the one after that is, hopefully, Tenerife (this is what most voting members seem to prefer, including myself). The financial objective of having the cost of two conferences in the bank has not been achieved yet, but IFCA is getting there. The nightmare scenario is that a hurricane destroys the island AFTER EVERYTHING HAS BEEN PAID, and all registered participants still need to be refunded.

The conference hotel (Beach Resort El Cozumeleño) was excellent (except for one of the evening shows, which was horrible), the Internet access was reasonably good, the food was good, the sea and the weather were warm, so the overall impression is very positive. The various organized activities were fun, too, such as diving and snorkeling.

For those of us, who left some time before and/or after the conference for exploring, the Yucatan peninsula also offered numerous opportunities. But that was not strictly part of the conference.

Daniel A. Nagy

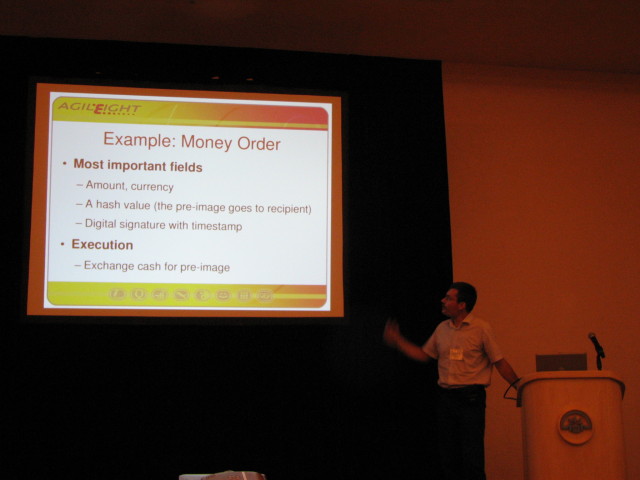

AgilEight, Security Architect

February 13, 2008

H2.1 Protocols Divide Naturally Into Two Parts

Peter Gutmann made the following comment:

Hmm, given this X-to-key-Y pattern (your DTLS-for-SRTP example, as well as OpenVPN using ESP with TLS keying), I wonder if it's worth unbundling the key exchange from the transport? At the moment there's (at least):TLS-keying --+-- TLS transport | +-- DTLS transport | +-- IPsec (ESP) transport | +-- SRTP transport | +-- Heck, SSH transport if you really wantIs the TLS handshake the universal impedance-matcher of secure-session mechanisms?

Which reminds me to bring out another hypothesis in secure protocol design, being #2: Divide and conquer. The first part is close to the above remark:

#2.1 Protocols Divide Naturally Into Two Parts

Good protocols divide into two parts, the first of which says to the second,

trust this key completely!

Frequently we see this separation between a Key Exchange phase and Wire Encryption phase within a protocol. Mingling these phases seems to result in excessive confusion of goals, whereas clean separation improves simplicity and stability.

Note that this principle is recursive. That is, your protocol might separate around the key, being part 1 for key exchange, and part 2 for encryption. The first part might then separate into two more components, one based on public keys and the other on secret keys, which we can call 1.a and 1.b. And, some protocols separate further, for example primary (or root) public keys and local public keys, and, session negotiation secret keys and payload encryption keys.

As long as the borders are clean and simple, this game is good because it allows us to conquer complexity. But when the borders are breached, we are adding complexity which adds insecurity.

A Warning. An advantage from this hypothesis might appear to be that one can swap in a different Key Exchange, or upgrade the protection protocol, or indeed repair one or other part without doing undue damage. But bear in mind the #1: just because these natural borders appear doesn't mean you should slice, dice, cook and book like software people do.

Divide and conquer is #2 because of its natural tendency to turn one big problem into two smaller problems. More later...

February 02, 2008

SocGen - the FC solution, the core failure, and some short term hacks...

Everyone is talking about Société Générale and how they managed to mislay EUR 4.7bn. The current public line is that a rogue trader threw it all away on the market, but some of the more canny people in the business don't buy it.

One superficial question is how to avoid this dilemma?

That's a question for financial cryptographers, I say. If we imagine a hard payment system is used for the various derivative trades, we would have to model the trades as two or more back-to-back payments. As they are positions that have to be made then unwound, or cancelled off against each other, this means that each trader is an issuer of subsidiary instruments that are combined into a package that simulates the intent of the trade (theoretical market specialists will recall the zero-coupon bond concept as the basic building block).

So, Monsieur Kerviel would have to issue his part in the trades, and match them to the issued instruments of his counterparty (whos name we would dearly love to know!). The two issued instruments can be made dependent on each other, an implementation detail we can gloss over today.

Which brings us to the first part: fraudulent trades to cover other trades would not be possible with proper FC because it is not possible to forge the counterparty's position under triple-entry systems (that being the special magic of triple-entry).

Higher layer issues are harder, because they are less core rights issues and more human constructs, so they aren't as yet as amenable to cryptographic techniques, but we can use higher layer governance tricks. For example, the size of the position, the alarms and limits, and the creation of accounts (secret or bogus customers). The backoffice people can see into the systems because it is they who manage the issuance servers (ok, that's a presumption). Given the ability to tie down every transaction, we are simply left with the difficult job of correctly analysing every deviation. But, it is at least easier because a whole class of errors is removed.

Which brings us to the underlying FC question: why not? It was apparent through history, and there are now enough cases to form a pattern, that the reason for the failure of FC was fundamentally that the banks did not want it. If anything, they'd rather you dropped dead on the spot than suggest something that might improve their lives.

Which leads us to the very troubling question of why banks hate to do it properly. There are many answers, all speculation, and as far as I know, nobody has done research into why banks do not employ the stuff they should if they responded to events as other markets do. Here are some speculative suggestions:

- banks love complexity

- more money is made in complexity because the customer pays more, and the margins are higher for higher payments

- complexity works as a barrier to entry

- complexity hides funny business, which works as well for naughty banks, tricky managers, and rogue traders. It creates jobs, makes staffs look bigger. Indeed it works well for everyone, except outsiders.

- compliance helps increase complexity, which helps everything else, so compliance is fine as long as all have to suffer the same fate.

- banks have a tendency to adopt one compatible solution across the board, and cartels are slow to change

- nobody is rewarded for taking a management risk (only a trading risk)

- banks are not entrepreneurial or experimental

- HR processes are steam-age, so there aren't the people to do it even if they wanted to.

Every one of those reasons is a completely standard malaise which strikes every company, but not other industries. The difference is competition; in every other industry, the competition would eat up the poorer players, but in banking, it keeps the poorer players alive. So the #1 fundamental reason why rogue traders will continue to eat up banks, one by one, is lack of competitive pressures to do any better.

And of course, all these issues feed into each other. Given all that, it is hard to see how FC will ever make a difference from inside; the only way is from outside, to the extent that challengers find an end-run around the rules for non-competition in banking.

What then would we propose to the bank to solve the SocGen dilemma as a short term hack? There are two possibilities that might be explored.

- Insurance for rogue traders. Employ an external insurer and underwriter to provide a 10bn policy on such events. Then, let the insurer dictate systems & controls. As more knowledge of how to stop the event comes in, the premiums will drop to reward those who have the better protection.

This works because it is an independent and financially motivated check. It also helps to start the inevitable shift of moving parts of regulation from the current broken 20th century structure over to a free market governance mechanism. That is, it is aligned with the eventual future economic structure.

- Separate board charged with governance of risky (banking) assets. As the current board structure of banking is that the directors cannot and will not see into the real positions, due to all the above and more, it seems that as time goes on, more and more systematic and systemic conditions will build up. Managing these is more than a full time job, and more than an ordinary board can do.

So outsource the whole lot of risk governance to specialists in a separate board-level structure. This structure should have visibility of all accounts, all SPEs, all positions, and should also be the main conduit to the regulator. It has to be equal to the business board, because it has to have the power to make it happen.

The existing board maintains the business side: HR, markets, products, etc. This would nicely divide into two the "special" area of banking from the "general" area of business. Then, when things go wrong, it is much easier to identify who to sack, which improves the feedback to the point where it can be useful. It also puts into more clear focus the specialness of banks, and their packaged franchises, regulatory costs and other things.

Why or how these work is beyond scope of a blog. Indeed, whether they work is a difficult experiment to run, and given the Competition finding above, it might be that we do all this, and still fail. But, I'd still suggest them, as both those ideas can be rolled out in a year, and the current central banking structure has at least another decade to run, and probably two, before the penny drops, and people realise that the regulation is the problem, not the solution.

(PS: Jim invented the second one!)

middle banking in a english muddle

The British bankers are still trying to convince the skeptical public that cash is overpriced, and the "subsidy" should be let go. Although the central banks have lined up behind their banks, and various credible reports have been duly spec'ed, paid for, and rushed out to an audience bereft of other sources, the masses are reacting to something else the banks got a little wrong: Identity. And Payments. And Guarantees. Indeed, everything it seems except the noble pound note.

Maybe it's a spoof, but I can't tell. Read for yourself:

It's a middle England commuter town where the chief topic of conversation is usually the weather or train delays. But now the Hertfordshire town of Letchworth is coping with an explosion of identity theft, the victim of gangs of fraudsters who target one community, siphon as much money as possible out of bank accounts then move, locust-like, to neighbouring areas.The impact on individuals who have seen their bank accounts cleaned out is devastating. And now evidence is emerging of how whole communities are losing faith in bank cards and chip-and-pin technology - and are turning back to cash-only transactions.

When Guardian Money spoke to consumers on the streets of Letchworth, we found large numbers of people boycotting outdoor cash machines, and, in some cases, abandoning the use of bank cards in stores.

Shoppers at the Shell petrol station told us they will never use their bank cards to pay for fuel again, after witnessing the chaos caused to friends who have had bank accounts plundered by fraudsters. Outdoor ATMs are strangely quiet, while inside banks there are queues of customers taking out cash.

Letchworth has a population of 33,000, but virtually everyone we spoke to in the town centre this week said they had either been the victim of bank card fraud - or they knew of someone who has had money illegally taken from their bank account. Usually the illegal withdrawals take place in Australia.

Those tricky Australians! Thank heavens we have someone to blame. Now, we all know that the Guardian is not exactly the most credible of sources, but it hasn't exactly been challenged in integrity in anything on the payments blight that I have seen.

So what's the truth? Are the Aussies turning your plastic cards into ashes? Is middle England being hit for six? Is this the end of the banks' bodyline assault on cash?

(Dave talks some more on this. Also, see Light Blue Touchpaper which probably isn't a spoof!)