January 26, 2020

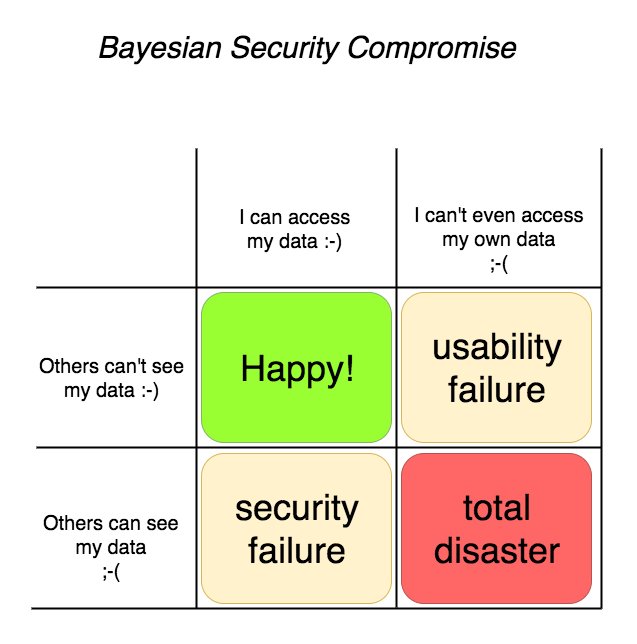

Bayesian Security Compromise

Watching an argument (I started) (on the Internet) about Apple dropping iCloud encryption (allegedly) reminded me of how hard it is to get security & privacy right.

What had actually happened was that Apple had made it possible for you to back up securely to iCloud and leave a key there, so if you got locked out, Apple could help you get your access back. Usability to the fore!

But some were surprised at this - I know I was. So how do you get it your way? The details are complicated, take several pages and clearly most will not follow the instructions or get it how they want without help.

Keeping access to your own data is *important*! Keeping others out of your data is also important. And these two are in collision, tension, across the yellow diagonal above.

Strangely, it has a Bayesian feel to it - there is a strong link like that between the "false positives" and the "false negatives" such that if you dial one down, you actually dial the other up! Bayesian statistics tells you where on the compromise you are, and whether you've blown out the model by being too tight in one particular axis.

When you get to scale such that your user base includes people of all persuasions and security & privacy preferences, it might be that you're stuck in that compromise - you can save most of the people most of the time, so an awful lot depends on what the most of your users are like.

(Note the discord with there is only one mode, and it is secure.)

Posted by iang at January 26, 2020 04:40 AM